-

Bug

-

Resolution: Done-Errata

-

Critical

-

None

-

Incidents & Support

-

False

-

-

True

-

-

-

Critical

Description of problem:

Destination VM is observing XFS filesystem corruption after migrating using VMware VM to OpenShift Virtualization using MTV.

Followed the below test steps:

[1] VM have 2 disks, 2nd disk is mounted on /test.

Started fio with target /test on the VMware VM:

fio --rw=randrw --ioengine=libaio --directory=/test/ --size=10G --bs=4k --name=vm_perf --runtime=12000 --time_based --iodepth=512 --group_reporting

The runtime is kept high so that it always runs during the migration.

[2] Reduced the “controller_precopy_interval” to 10 minutes for faster precopy.

[3] Started a warm migration.

After the initial process of the full download of the virtual disks, when the DVs were in paused state, I manually tried to map and mount the rbd device from the worker node and it was successful:

[root@openshift-worker-deneb-0 ~]# mount /dev/rbd3 /mnt/ [root@openshift-worker-deneb-0 ~]# ls /mnt/ test.out test2.out vm_perf.0.0

[4] Then waited for the first incremental copy to complete. Tried again to mount the disk when the DV was in paused state. It failed with following error:

[root@openshift-worker-deneb-0 ~]# mount /dev/rbd3 /mnt/

mount: /var/mnt: wrong fs type, bad option, bad superblock on /dev/rbd3, missing codepage or helper program, or other error.

Journalctl:

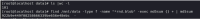

Nov 10 07:16:50 openshift-worker-deneb-0 kernel: XFS (rbd3): Mounting V5 Filesystem e1483691-a7e0-489a-aa73-fd469a7fcdd6 Nov 10 07:16:50 openshift-worker-deneb-0 kernel: XFS (rbd3): Corruption warning: Metadata has LSN (1:5851) ahead of current LSN (1:5823). Please unmoun> Nov 10 07:16:50 openshift-worker-deneb-0 kernel: XFS (rbd3): log mount/recovery failed: error -22 Nov 10 07:16:50 openshift-worker-deneb-0 kernel: XFS (rbd3): log mount failed

So the problem is during the incremental copy.

[5] Did the cutover, the virt-v2v have the same error.

command: mount '-o' 'ro' '/dev/sdb' '/sysroot//'^M [ 7.904799] SGI XFS with ACLs, security attributes, scrub, quota, no debug enabled^M [ 7.914852] XFS (sdb): Mounting V5 Filesystem e1483691-a7e0-489a-aa73-fd469a7fcdd6^M [ 8.027063] XFS (sdb): Corruption warning: Metadata has LSN (1:5905) ahead of current LSN (1:5823). Please unmount and run xfs_repair (>= v4.3) to resolve.^M [ 8.031136] XFS (sdb): log mount/recovery failed: error -22^M [ 8.033444] XFS (sdb): log mount failed^M

VM boots into maintenance mode because it’s unable to mount the second disk.

Note that [4] and [5] was to understand at which stage, we are getting a corrupted disk and ensured that DV is in pause while doing that. Issue is reproducible without those steps.

One thing I noticed during the test is that forklift is deleting the snapshot immediately after it sets checkpoints on the DVs. So when the importer pod spawns and does QueryChangedDiskAreas, it is waiting for the snapshot delete operation to finish.

I1110 07:20:54.685177 1 vddk-datasource_amd64.go:298] Current VM power state: poweredOff < – Blocked during snapshot delete -> I1110 07:24:22.450431 1 vddk-datasource_amd64.go:900] Set disk file name from current snapshot: [nfs-dell-per7525-02] rhel9/rhel9_2.vmdk

As per https://docs.vmware.com/en/VMware-vSphere/8.0/vsphere-vddk-programming-guide/GUID-C9D1177E-009E-4366-AB11-6CD99EF82B5B.html , shouldn’t we delete the snapshot first before creating another and start the download? But not sure if this is creating problem.

Version-Release number of selected component (if applicable):

Migration Toolkit for Virtualization Operator 2.7.3

OpenShift Virtualization 4.17.0

Guest is RHEL 9.4

How reproducible:

I can consistently reproduce the problem in my test environment with fio running.

Actual results:

XFS filesystem corruption after warm migration of VM from VMware

Expected results:

No corruption

- is related to

-

MTV-1681 [SPIKE] Investigate disabled snapshot consolidation

-

- New

-

- relates to

-

MTV-1679 XFS filesystem corruption after warm migration of VM from VMware (too many changed blocks)

-

- Closed

-

- split to

-

MTV-1679 XFS filesystem corruption after warm migration of VM from VMware (too many changed blocks)

-

- Closed

-

-

MTV-1664 Warm migration with pre-existing snapshot will not work

-

- Closed

-

- links to

-

RHBA-2024:142279

MTV 2.7.4 Images

RHBA-2024:142279

MTV 2.7.4 Images

- mentioned on