-

Bug

-

Resolution: Done

-

Blocker

-

RHODS_1.5.0_GA

-

False

-

False

-

None

-

No

-

-

-

-

-

-

-

1.6.0-8

-

No

-

-

No

-

Yes

-

None

-

Description of problem:

During a RHODS upgrade from 1.3 to 1.5 we have noticed that some of the images are not correctly updated; Specifically we have confirmed this behaviour for Standard Data Science, PyTorch and Tensorflow, but it likely applies to all images.

What is happening is that during an upgrade, the ImageStreams are correctly updated, i.e. the OCP console will show newer timestamps, imagestream metadata will be updated to the latest version, spawner tooltip and description will be updated. However, if an image is already cached on a worker node where a new server will be spawned after the RHODS upgrade is over, the new image will not be pulled again but rather the locally cached copy will be used.

Because of this, there will be discrepancies between what is shown in the spawner and what is installed in the image; in this specific case, we have seen that tensorflow and tensorboard were installed at version 2.4.1 instead of 2.7/2.6 respectively, and jupyterlab/notebook were installed at the old versions for RHODS 1.3 (3.0.16/6.4.4) which are affected by a known CVE.

Removing the locally cached image forces RHODS to pull the newer one, which fixes this issue. I believe this is due to the fact that the image pull policy is set to `IfNotPresent` and that the images in question are using the same tag even when there have been changes to the images themselves. This is a similar issue to what was observed in RHODS-2162.

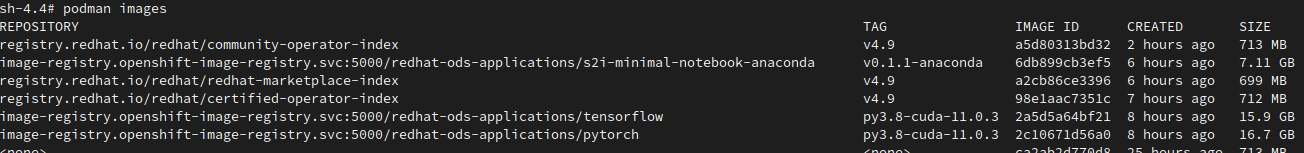

This is an example of what we are seeing, node cache:

ImageStream data:

$ oc get is -n redhat-ods-applications 11.0.3-cuda-s2i-base-ubi8 default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/11.0.3-cuda-s2i-base-ubi8 latest 4 hours ago 11.0.3-cuda-s2i-core-ubi8 default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/11.0.3-cuda-s2i-core-ubi8 latest 4 hours ago 11.0.3-cuda-s2i-py38-ubi8 default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/11.0.3-cuda-s2i-py38-ubi8 latest 4 hours ago 11.0.3-cuda-s2i-thoth-ubi8-py38 default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/11.0.3-cuda-s2i-thoth-ubi8-py38 latest 4 hours ago minimal-gpu default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/minimal-gpu py3.8-cuda-11.0.3 4 hours ago nvidia-cuda-11.0.3 default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/nvidia-cuda-11.0.3 latest 5 hours ago pytorch default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/pytorch py3.8-cuda-11.0.3 4 hours ago s2i-generic-data-science-notebook default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/s2i-generic-data-science-notebook py3.8 4 hours ago s2i-minimal-notebook default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/s2i-minimal-notebook py3.8 4 hours ago tensorflow default-route-openshift-image-registry.apps.ods-qe-upgr.4ujn.s1.devshift.org/redhat-ods-applications/tensorflow py3.8-cuda-11.0.3 4 hours ago

Prerequisites (if any, like setup, operators/versions):

RHODS 1.3 with locally cached images, upgraded to RHODS 1.5

Steps to Reproduce

- Install RHODS 1.3

- Spawn server with all images to locally cache them

- Upgrade RHODS to 1.5

- Confirm that ImageStreams have been correctly updated

- Try spawning a server with one of the cached images on the same worker node

- Verify that the spawner is not pulling the new image but using the cached copy

Actual results:

The servers that are being spawned still use the images provided with RHODS 1.3 rather than the updated ones for RHODS 1.5

Expected results:

Updated images are used

Reproducibility (Always/Intermittent/Only Once):

Always when upgrading from RHODS 1.3 to 1.5 with old images cached

Build Details:

RHODS 1.5.0-7 on OSD, OCP 4.8/4.9

Workaround:

Manually remove the cached images from the worker nodes

Additional info:

- duplicates

-

RHODS-2364 Rebuilt Notebook images not picked up, due to image caching on nodes

-

- Closed

-

- relates to

-

RHODS-2748 Change Image versioning with Intel oneApi AiKit

-

- Backlog

-

-

RHODS-1961 Image versioning issues with OpenVino

-

- Review

-

- mentioned on