-

Bug

-

Resolution: Done

-

Minor

-

AI265 - RHOAI2.13-en-2-20250124

-

None

-

False

-

-

False

-

2

-

-

-

en-US (English)

Please fill in the following information:

| URL: | https://role.rhu.redhat.com/rol-rhu/app/courses/ai265-2.13/pages/ch02s02 |

| Reporter RHNID: | rhn-support-ablum |

| Section Title: | Guided Exercise: Using Model Servers to Deploy Models |

Issue description

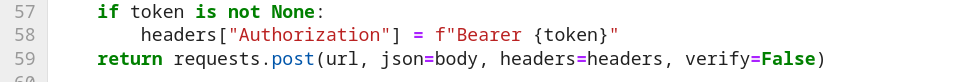

Line 58 of the provided utils.py has a small bug in send_inference_request() function that results in a malformed Authorization: Bearer header when token based authentication is used.

Although the exercise includes a step to NOT enable authentication for the externally exposed model, if enabled and properly provided in the validate_model_servers.ipynb learners face errors.

Steps to reproduce:

1 - insure require token is checked on the multimodel server

2 - store the generated token value in a variable in the validate_model_servers.ipynb

```

token = "eyJhbGc...SNIP...6LPnFo"

3 - Add the token when calling send_inference_request in validate_model_servers.ipynb:

```

print("\nValidating diabetes model...\n")

diabetes_request = utils.prepare_diabetes_request()

print(f"Diabetes request:\n {diabetes_request}")

response = utils.send_inference_request(diabetes_url, diabetes_request, token)

print("\nResponse: \n")

response.json()

```

Running results in

--> 978 raise RequestsJSONDecodeError(e.msg, e.doc, e.pos)

Workaround:

Modify line 58 of utils.py replacing the : with = like:

This is a minor issue since the instructions say to disable token authentication on the external route, but that is a questionable content requirement. However, should we be disabling authentication for external consumption of model training? This report will fix a bug that would need to be fixed to support authentication. I'd also like to see improved use of secrets to store the token and enabling TLS as additional improvements to this exercise.