-

Epic

-

Resolution: Done

-

Blocker

-

quay-v3.7.0, quay-v3.7.1

-

Scalable storage calculation consumption and quota enforcement

-

False

-

-

False

-

Green

-

0% To Do, 0% In Progress, 100% Done

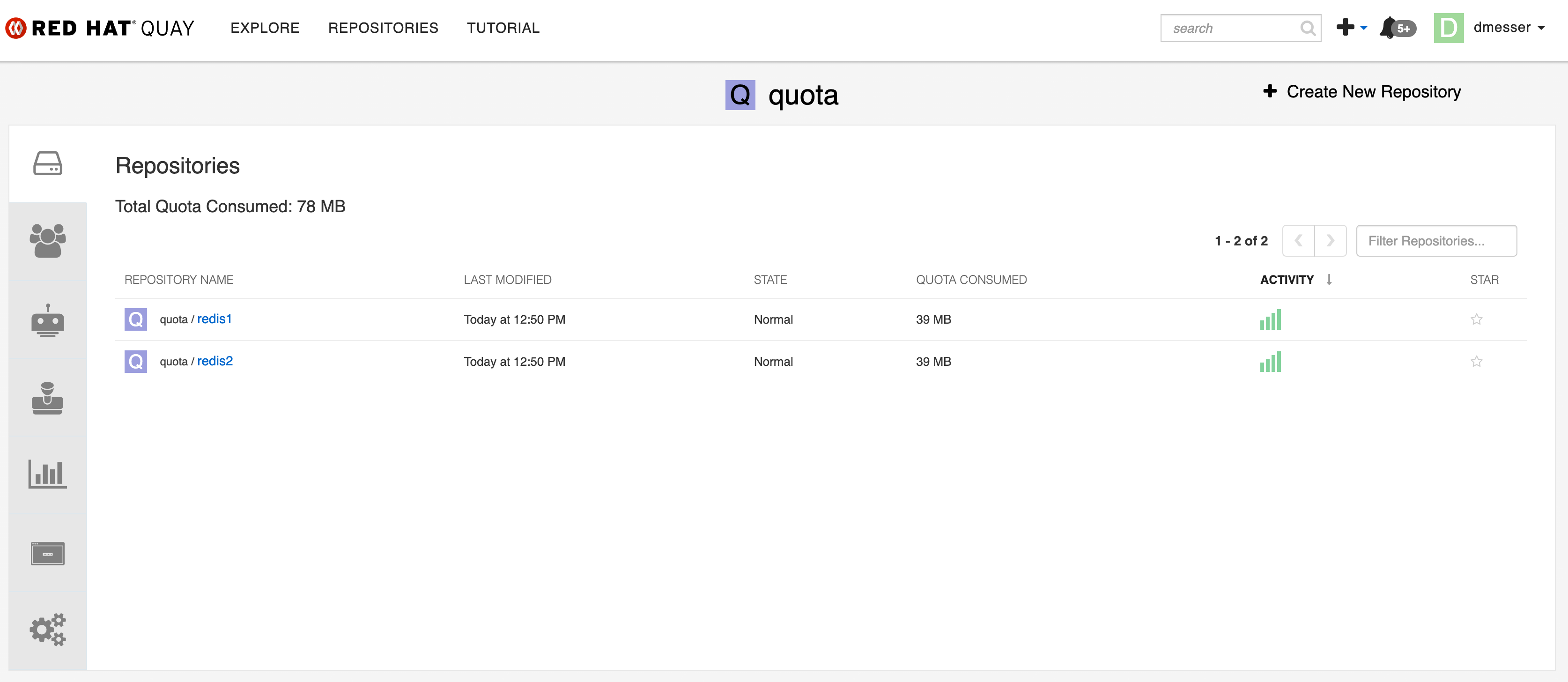

Current situation: When pushing the same image manifest twice into an organization but into different repositories the organization reports double the storage consumption. Also, calculating quota consumption is slow across organizations with a lot of layer blobs.

How to reproduce double counting:

➜ ~ docker tag redis:latest quay.dmesser.io/quota/redis1:latest ➜ ~ docker tag redis:latest quay.dmesser.io/quota/redis2:latest ➜ ~ docker push quay.dmesser.io/quota/redis1:latest The push refers to repository [quay.dmesser.io/quota/redis1] 5339227c591d: Pushed 236c7b868cd4: Pushed 8a4e5813c8ef: Pushed df83e68211e9: Pushed 915605e59115: Pushed 4d31756873fb: Pushed latest: digest: sha256:0ea9c1fc8b222ac3d6859c7f81392f166a749e8ddca8937885ba9f23e209a19a size: 1572 ➜ ~ docker push quay.dmesser.io/quota/redis2:latest The push refers to repository [quay.dmesser.io/quota/redis2] 5339227c591d: Mounted from quota/redis1 236c7b868cd4: Mounted from quota/redis1 8a4e5813c8ef: Mounted from quota/redis1 df83e68211e9: Mounted from quota/redis1 915605e59115: Mounted from quota/redis1 4d31756873fb: Mounted from quota/redis1 latest: digest: sha256:0ea9c1fc8b222ac3d6859c7f81392f166a749e8ddca8937885ba9f23e209a19a size: 1572

Observed result:

This leads to the following storage consumption reported:

Expected result:

The storage consumption reported should be 39MB.

Goal #1: More fair attribution of actual storage consumption at the organization and repository level. The current implementation penalizes users for adding additional tags to the same manifest and for using the same base image across all their images in the same or different repositories. We want to be able to encourage re-tagging existing manifests and use of common base images like UBI.

Proposed implementation:

Storage consumption at the organization level should be reported as the sum of all unique layers referenced by manifests in all repositories in this organization. Storage consumption at the repository level should be reported as the sum of all unique layers referenced by manifests in a repository.

Acceptance criteria:

Based on the following example:

- a image called UBI9 is 75MB

- the image is stored in an organization org1 as org1/image:latest

- the image is also stored in an organzation org2 as org2/image:latest and org2/image:1.0

- the image is also stored in an organization org3 as org3/image:latest and org3/base:latest

The storage consumption should be reported as follows:

- total registry storage consumption is 75MB

- total consumption of org1 is 75, the same value as for org2 and org3

- total consumption of the repo org2/image is 75MB

- total consumption of the repo org3/image is 75MB, in the same org org3/base is also 75MB

Goal #2: Storage consumption reporting needs to be fast and scalable so we can rely on it on quay.io

Other criteria are:

- the consumption calculation needs to be scalable for large deployments:

- repositories can have thousands of tags

- organizations can have thousands of repositories

- a Quay deployment can have thousands of organisations (quay.io)

- the Quay API should report storage consumption at the registry level and also separately at the organization level and separately at the repository level

- a graphical frontend or a customer should not be required to do client-side calculation when showing the

- total consumption of the entire registry

- total consumption of a given organization

- total consumption of a given repository

- a graphical frontend or a customer should not be required to do client-side calculation when showing the

- manifest lists size need to be calculated as the sum of a unique layers among their child manifests, this should be reflected as the size of the manifest via the API and UI instead of the value N/A today

- deleted tags will lead to untagged manifests which may still kept in storage for Time Machine purposes

- these untagged manifests still count against the storage consumption of the respective repositories and organisation it was part of until Garbage Collection deletes them for good

- at the organisation level the aggregate size of these untagged manifests are reported as "reclaimable storage space", both via the UI and API

- an organisation administrator has the ability to reclaim his space by triggering a garbage collection run against the blobs of this particular organisation to force the system to permanently delete the untagged manifests and their blobs to free up storage space at the expense of loosing the ability to restore those manifests via Time Machine

Explanation / Rationale:

The bug above is caused by the storage consumption tracking counting unique manifests layers twice, therefore ignoring layer sharing. Storage consumption reporting is partially at odds with layer sharing within a registry. In Quay layer sharing always happens at a global registry level, so attributing the right storage consumption is tricky with Quay's tenancy model: organizations are encapsulated areas of collaboration but layer content may actually be shared at the storage level. A natural approach would be to attribute the storage consumption of layers to user who owns them. However it's computationally difficult and conceptually challenging to report storage consumption accurately with respect to actual ownership of layers that end up consuming storage. A fair system would attribute that consumption to the tenant who first created a particular layers. However as additional tenants bring in content that shares some of these layers, ownership is tricky to track. The original tenant may decide to delete the manifests that originally brought the layer in that is now shared. There is no determined owner of this layer now. One option would be to transfer ownership to the next tenant in history that was uploading first manifest reusing the shared layer, and so on. This, very likely, is not scalable and makes storage consumption hard to compute and hard to convey to users. After all, tenants storing and retrieving manifests that partially share layers from their organizations still benefit from the registry service in the same way the original owner of the shared layered does. So in terms of attribution of cost there is no fair model here. Hence layer sharing is unsuited for being taken into account when computing a registry-level aggregate storage consumption, due to the tenancy model.

As an alternative, organizations should always report their storage consumption as an aggregation of all unique layers that are stored in the organization. This will cause consumption of all organization summed up to be higher than the actual on-disk storage consumption of the entire registry, which is acceptable given that tenants (organization owners) benefit equally from shared layers. As a result, duplicate manifests in organizations will not cause duplicate storage consumption reporting as shown in the reproducer above. In turn, organization owners can effectively lower their storage consumption by deleting content inside their organization which will be counted against their quota, as a fair consequence of them not being able to use the deleted content anymore. Deleting content will stage the untagged manifest for garbage collection but may be withheld from permanent deletion by Time Machine. In such cases their storage consumption should still be counted agains the respective organization.

This should be implemented in the same way for storage consumption reporting in repositories. They should report an aggregation of all unique layers that are stored inside the repository. As a result the sum of all repositories' reported consumption in an organization will be higher than the actual and reported storage consumption of that organization, which is acceptable since this exposes the organization owners to the benefit of layer sharing. At the same time it attributes the storage consumption at the repository level to the owners of each repository as they benefit equally from the stored layers. This will be important once a repository-level quota cap mechanism is implemented. This is likely going happen, since organizations are in practice shared by several tenants responsible for a distinct set of repositories inside a single organization.

Likewise, the aggregate sum of all manifest sizes in a repository will be higher than the actual repository level consumption, which is acceptable because it exposes the owner of the repository to the benefits of layer sharing. Repository owners who store software that using a common base image like UBI are not penalized for re-using that base image across repeated builds. And as owners delete content in their repositories they will be fairly attributed to the remaining content's storage consumption but the overall organization's storage might not be reduced by the same amount, if other repositories still share layers with the previously deleted content.

As a consequence, the storage consumption reporting value displays for organizations and repositories in the UI should contain additional hints that they are computed with layer sharing taken into account.

In addition, at the organization level, they should contain information about storage consumption taken up by untagged manifest held for garbage collection by the Time Machine feature and offer the ability to permanently delete those as well.

- account is impacted by

-

PROJQUAY-5230 Quota doesn't compute multi-arch images

-

- Closed

-

- is depended on by

-

PROJQUAY-4874 Enable quota on quay.io and report on storage consumption (showback)

-

- Closed

-

- is documented by

-

PROJQUAY-5059 Quota enforcement

-

- Closed

-

- links to

- mentioned on