-

Outcome

-

Resolution: Done

-

Major

-

None

-

None

-

None

-

Product / Portfolio Work

-

Not Selected

-

?

-

No

-

?

-

?

-

?

-

0% To Do, 0% In Progress, 100% Done

Market Problem

Red Hat has always cared about tending to customer needs on every layer of the stack from the OS to the Application layers, even beyond that with developer experience, workflows, and productivity.

Today is no different, while OpenShift abstracts and facilitates many of the rather manual and mundane day 2 operational tasks such as monitoring, logging, updates/upgrades, deletion, versioning, packaging, etc. It is still just a one cluster universe, possibly shared by one or more users, to cover a myriad of use-cases. That model no matter how good it might be, is not enough to cover our fast growing market need for managing and orchestrating a multi-verse of clusters.

The question now is, how does one apply the same abstraction principles for improving and facilitating user-experience to more than just one cluster at a time. That question is properly defined and answered in [1], mainly to cover homogeneity and consistency in addressing common multi-cluster problems such as Cluster Lifecycle, policy enforcement, observability, inter-cluster networking, storage, etc.

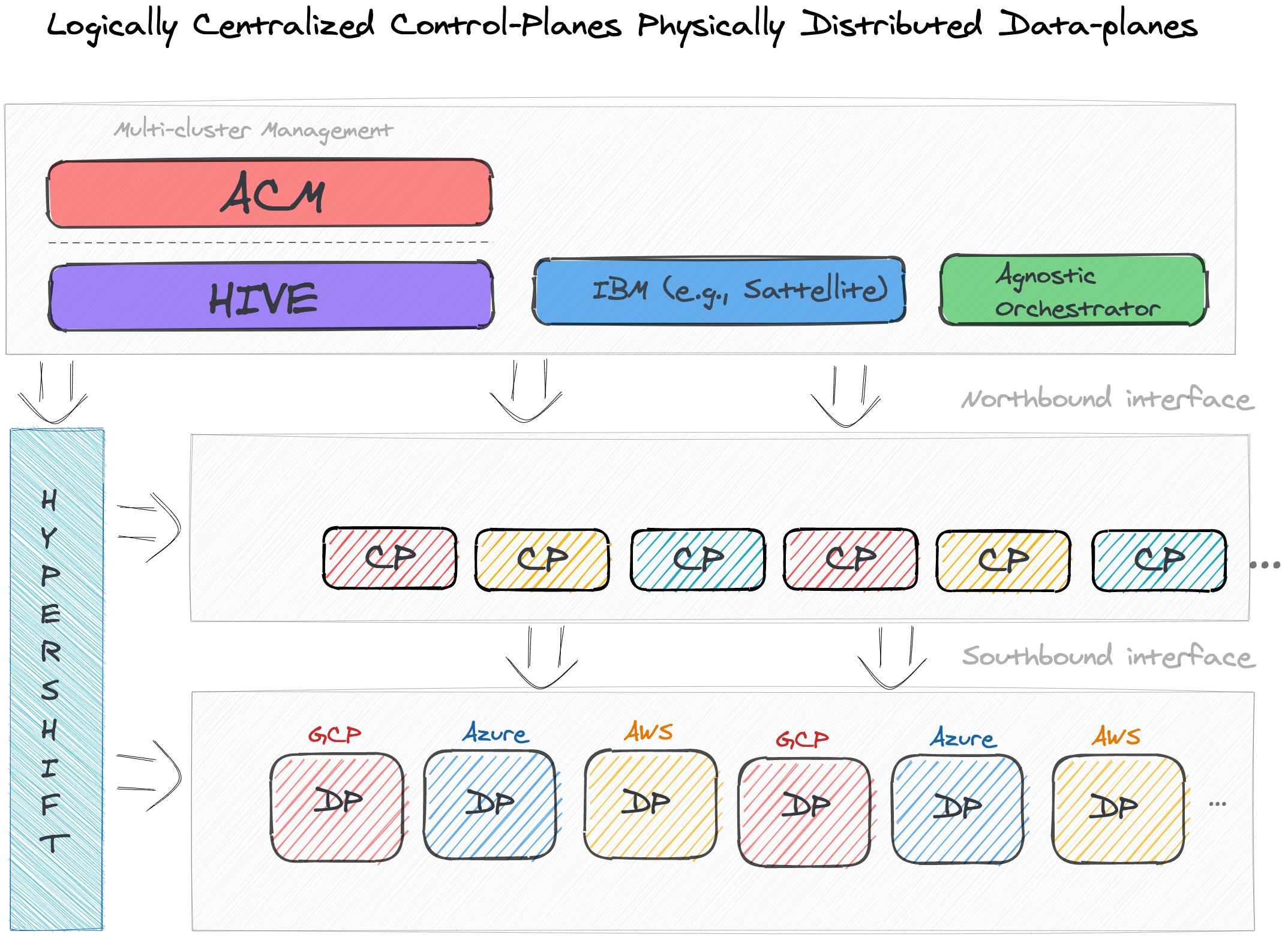

Now let's take one step back and define what is a cluster. A cluster is the composition of a Control-Plane (cp), that being an API endpoint, a storage endpoint, a workload scheduler, and an active actuator for ensuring state and a Data-Plane (DP) mainly covering the hot-path, such as compute, storage and networking. In today's OpenShift, the CP and the DP are coupled with respect to the locality, the CP is represented by a dedicated set of nodes with a mandatory minimum number (to ensure quorum), and is sharing the same network as the DP. That might be desirable, and in fact, it is working very well, but as mentioned earlier, Red Hat is always striving to go the extra mile with customer needs as a first-class thought. Thus, in addition to offering standalone (coupled CP and DP) OpenShift, Red Hat is considering offering another model that aims to decouple the CP from the DP which introduces many benefits.

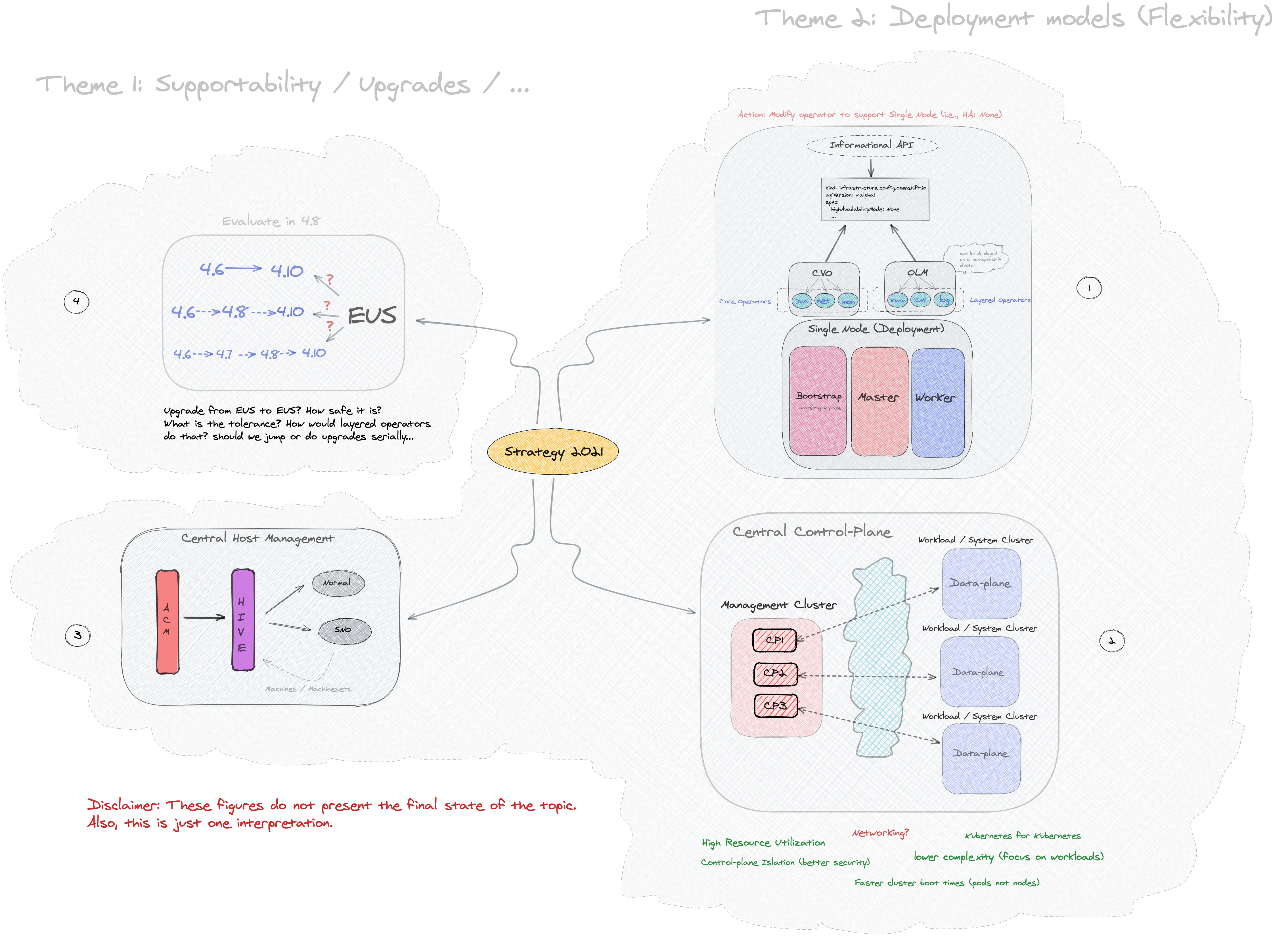

Amongst the benefits of such decoupling is the ability to centrally manage control-planes for more than one cluster, with Central Control-Plane Management being one of the main Red Hat initiatives this year (below, in the figure, are the four target initiatives) .

Why it Matters

Red Hat is building a story for addressing multi-cluster management and orchestration as an emerging customer need. With the decoupling of CP and DP, a cluster now is represented by two different flavors, 1) standalone, 2) decoupled. The standalone version has been the default, it is reliable, bullet-proof, and battle-tested. The decoupled version unlocks yet another feature for multi-cluster management, that being Central Control-Plane Management. Building for these two flavors expands Red Hat's portfolio to cater to more customer use-cases.

Features & Benefits

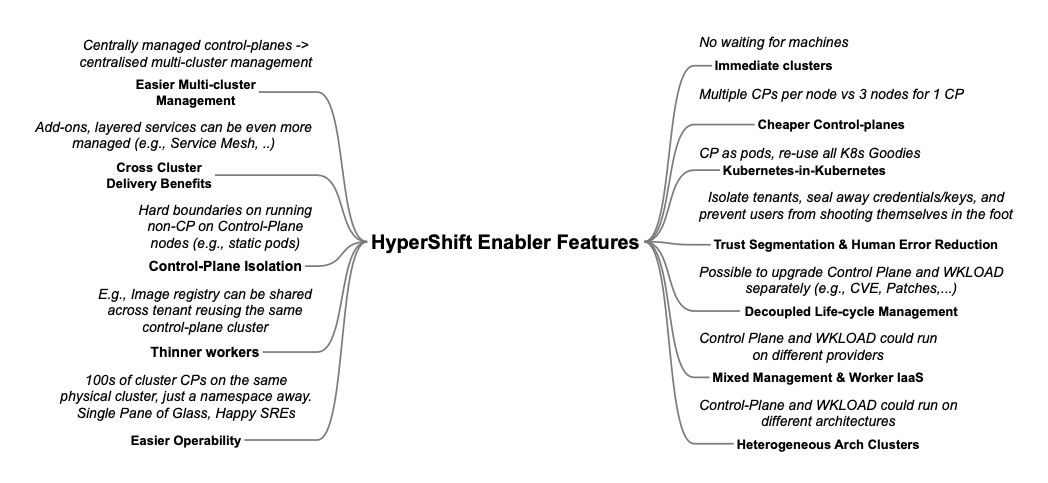

The decoupling of the CP from the DP enables more use-cases and introduces a lot of benefits, such as:

- Immediate Clusters: Since the control plane are pods being launched on OpenShift, you are not waiting for machines to provision.

- Cheaper Control Planes: You can cram ~7-21 control planes into the same 3 machines you were using for 1 control plane. And run ~1000 control planes on 150 nodes. Thus you run most densely on existing hardware. Which also makes HA clusters cheaper.

- Kubernetes Managing Kubernetes: Having control-plane as Kubernetes workloads, immediately unlocks for free all the features of Kubernetes such as HPA/VPA, cheap HA in the form of replicas, control-plane Hibernation now that control-plane is represented as deployments, pods, ... etc.

- Trust Segmentation & Human Error Reduction: Management plane for control planes and cloud credentials separate from end-user cluster. A separate network of management from the workload. Furthermore, with the control-plane managed, it is harder for users to basically shoot themselves in the foot and destroy their own clusters.

- Infra Component Isolation: Registries, HAProxy, Cluster Monitoring, Storage Nodes, and other infra type components are allowed to be pushed out to the tenant’s cloud provider account those isolating their usage of those to just themselves

- Increased Life Cycle Options: You can upgrade the consolidated control planes out of cycle from the segmented worker nodes including embargoed CVEs.

- Future Mixed Management & Workers IaaS: Although it is not in the solution today, we feel we could get to running the control plane on a different IaaS provider than the workers faster under this architecture

- Heterogeneous Arch Clusters: We can more easily run control planes on one CPU chip type (ie x86) and the workers on a different one (ie ARM).

- Easier Multi-Cluster Management: More centralized multi-cluster management which results in fewer external factors influencing the cluster status and consistency

- Cross Cluster Delivery Benefits: As we look to have more and more layered offerings such as service mesh, server-less, pipelines, and other span multiple clusters having a concept of externalized control planes may make delivering such solutions easier.

- Easy Operability: Think about SREs, instead of chasing down cluster control-planes, they would now have a central-pane of glass where they could debug and navigate their way even to cluster data-plane. Centralized operations, less Time To Resolution (TTR), and higher productivity become low hanging fruits.

Illustrative User Stories or Scenarios

Below is a sample of user-requests that closely match the benefits provided by the HyperShift architecture adoption:

- As an infrastructure-admin, I would like to improve the utilization of metal to support multiple clusters.

- As a network-admin, I would like to have physical network separation between the cluster control-plane and data-plane, only application workloads should exist on the data-plane.

- As a cluster-admin, I would like to improve the maintenance of the control plane versus workers.

- As a cluster-admin, I would like to optimize metal and OpenShift Virt utilization.

Expected Outcomes

- HyperShift, a tool for providing OpenShift clusters-as-a-service with logically centralized control-planes yet physically distributed data-planes on multiple hyper scalers.

- Integrations with north-bound multi-cluster orchestrators (e.g., ACM).

- HyperShift becomes the backend to power our managed services (ROSA/ARO/OSD)

Current state for Providers (prioritized in descending order)

Cloud Providers:

- AWS (TP/Done) - Used for ROSA and for self-managed hosted control planes

- Baremetal (via the Assisted Installer agent workflow) - Preview

- OCP Virt (Dev-Preview) - only for self-managed

- Azure (Dev-Preview) - Will be used for managed and potentially self-managed if there is customer need.

- vSphere (not in scope for CY 2022)

- GCP (not in scope for CY 2022)

Current state for Cloud Services (prioritized in descending order)

cloud services:

- ROSA (Near-term)

- ARO (Long-term)

- OSD (Future)

References

- is related to

-

OPRUN-2170 Identify ways to reduce OLM resource usage at scale

-

- Closed

-