-

Bug

-

Resolution: Done

-

Blocker

-

7.2.0.GA.CR1

-

None

We observed performance degradation in Clustering performances.

We observed performance degradation in Clustering performances.

Some areas are worse.

The values reported are the peaks observed in the average response time calculation.

The scenarios cover very common use cases:

- HTTP Session Replication

- Stateful EJB Replication

- The combination of the two (I always used this myself when I was a developer, in load balanced but not clustered setup)

- replication on all nodes of the cluster

- replication on a subset of the cluster nodes

Here the details:

| scenario | description | 7.1 (ms) | 7.2 (ms) | percentage | notes |

|---|---|---|---|---|---|

| eap-7x-stress-session-dist-sync-haproxy | http sessions replicated on all nodes, HaProxy load balancer | 180 | 220 | +22% | degradation starts with about 3000 clients; HaProxy is used in OpenShift |

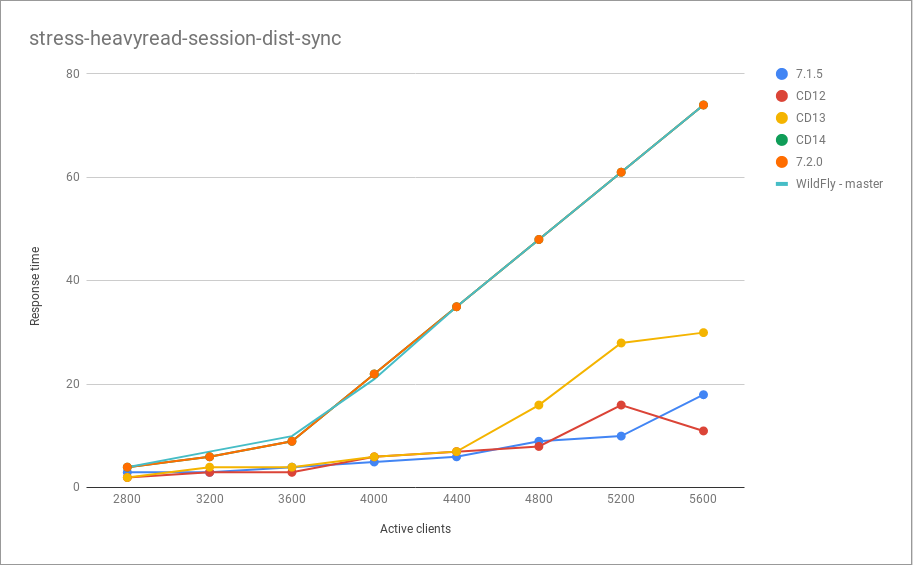

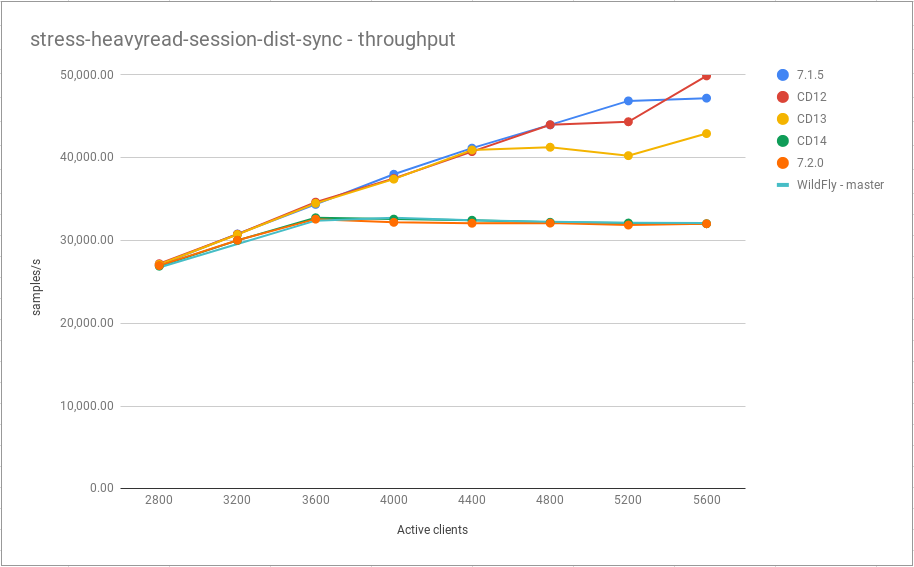

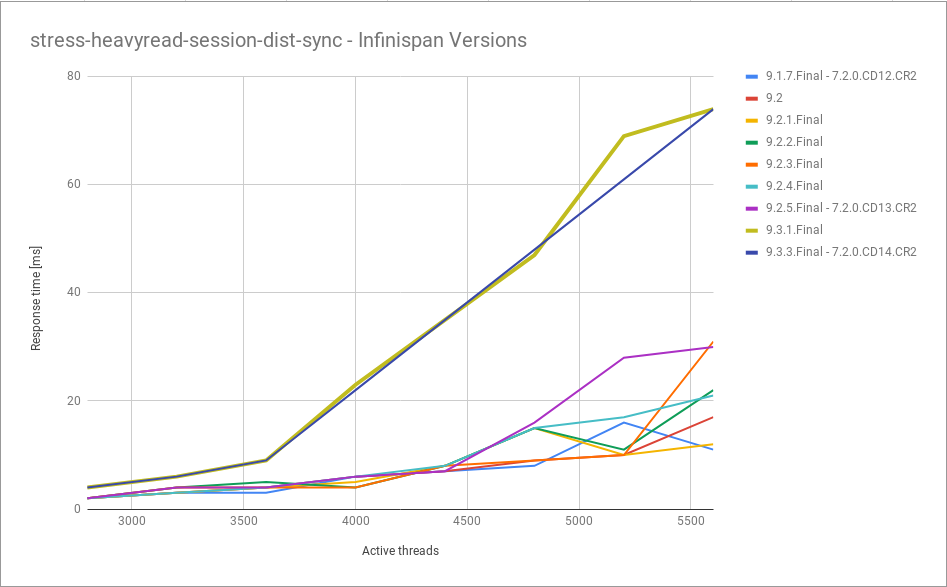

| eap-7x-stress-heavyread-session-dist-sync | http sessions replicated just on 2 nodes (of the total 4), prevalent read access | 20 | 90 | +350% | degradation starts with about 3500 clients (images below) |

| eap-7x-stress-ejbservlet-repl-sync | http sessions and Stateful EJBs replicated on all nodes | 425 | 550 | +29% | uniform degradation |

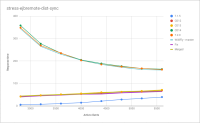

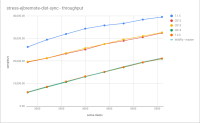

| eap-7x-stress-session-dist-sync | http sessions replicated just on 2 nodes (of the total 4) | 160 | 300 | +87% | degradation starts with about 3500 clients |

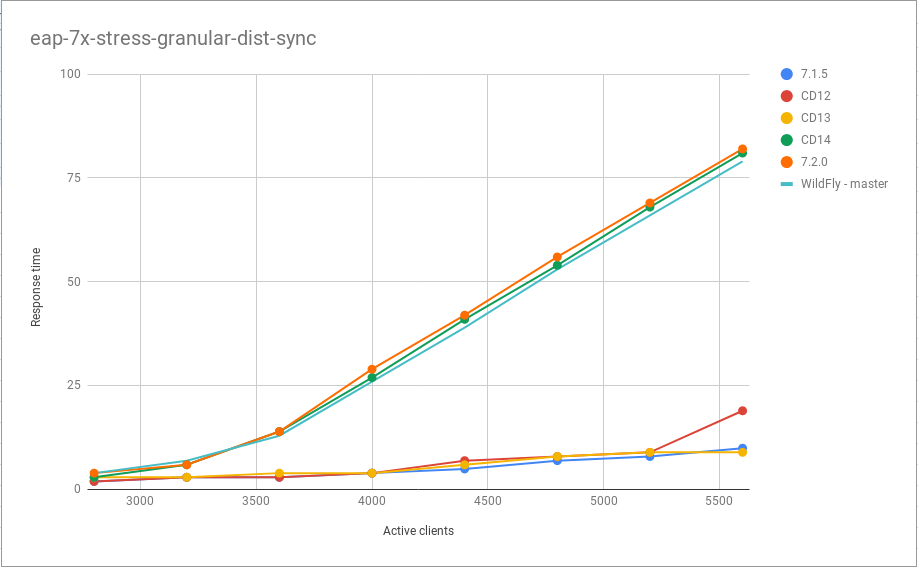

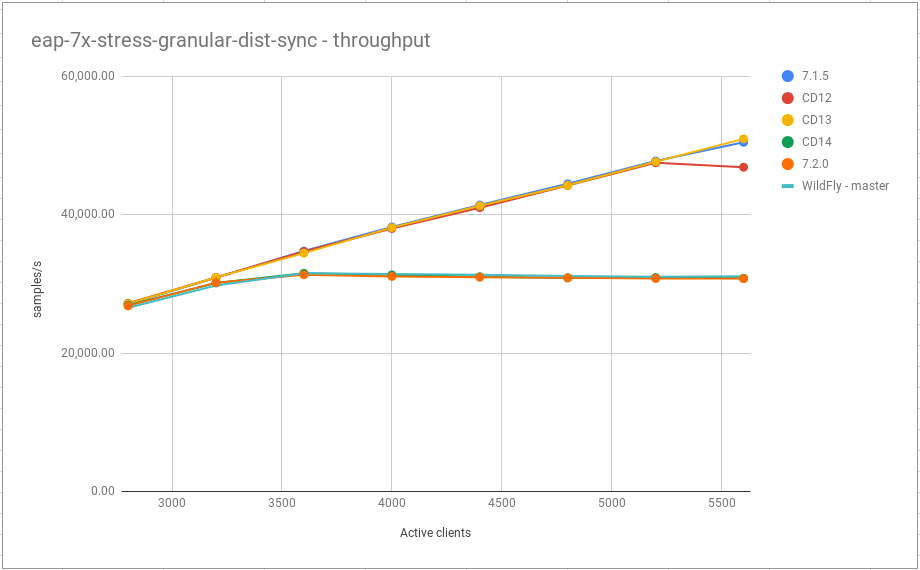

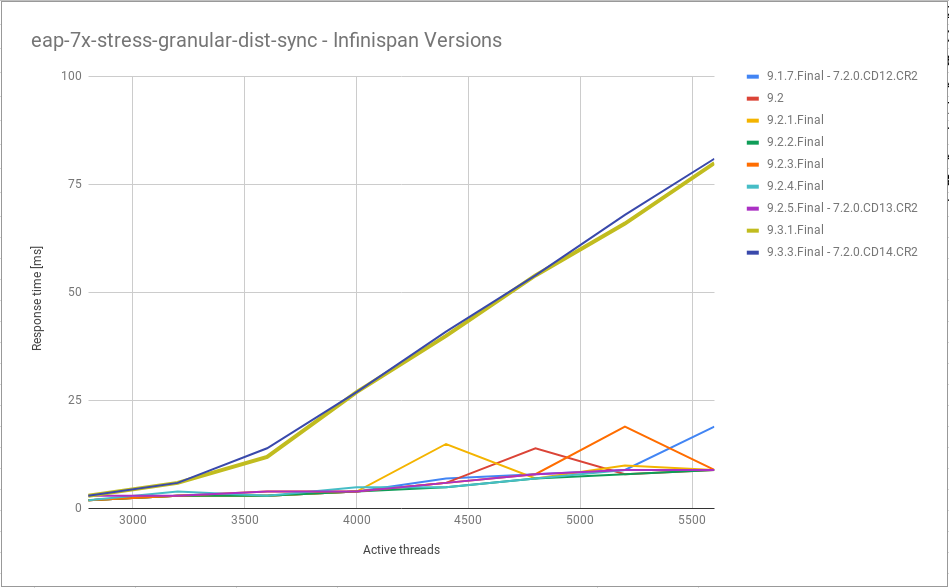

| eap-7x-stress-granular-dist-sync | http sessions attributes replicated individually just on 2 nodes (of the total 4) | 130 | 290 | +123% | degradation starts with about 3000 clients |

| eap-7x-stress-granular-repl-sync | http sessions attributes replicated individually on all nodes | 290 | 400 | +37% | degradation starts with about 3500 clients |

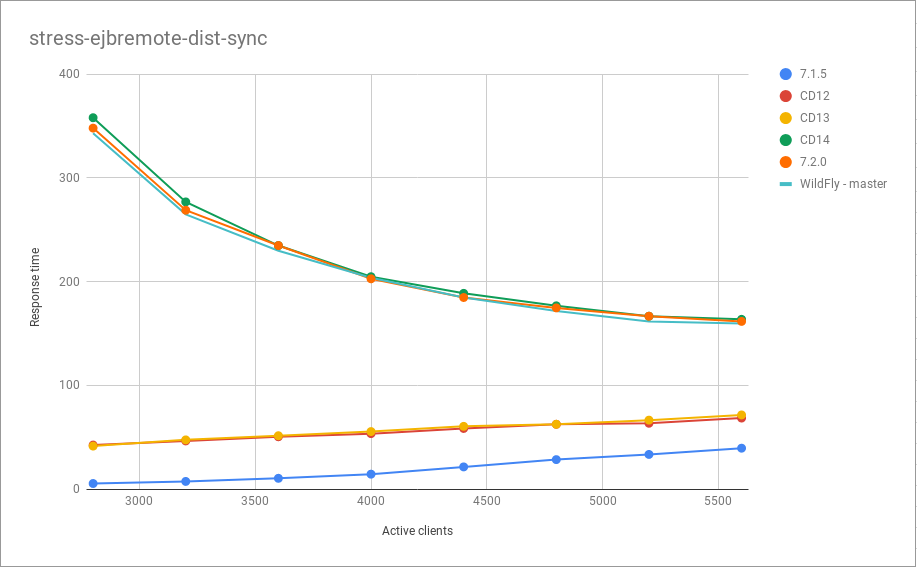

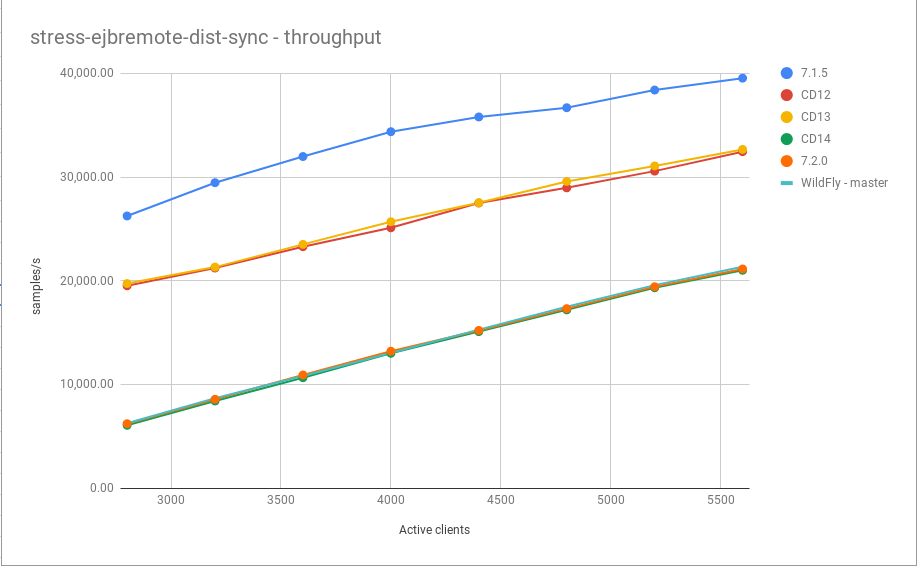

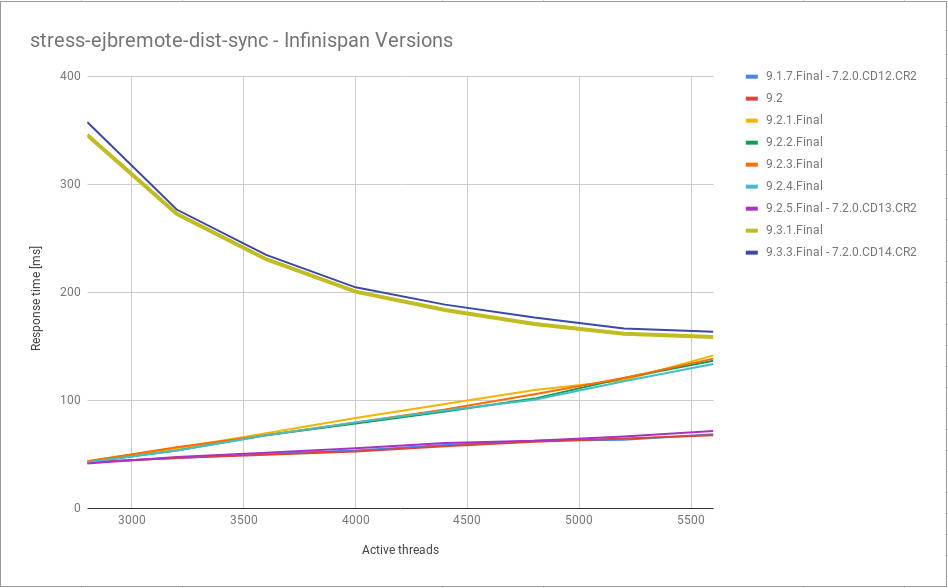

| eap-7x-stress-ejbremote-dist-sync | Sateful EJBs replicated just on 2 nodes (of the total 4) | 50 | 165 | +230% | degradation starts with about 2000 clients |

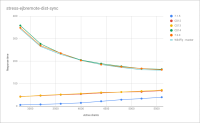

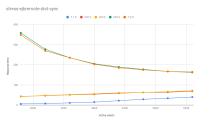

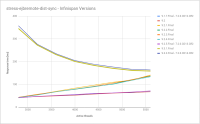

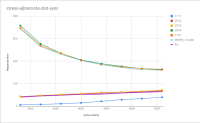

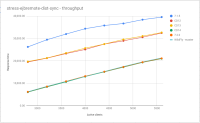

The following are the response time and throughput graphs for the three most affected scenarios:

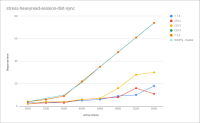

For the 3 most affected ones, here is a table with Infinispan versions details (bold are affected by degradation):

| scenario | 7.2.0.GA.CR1 | 7.2.0.CD14.CR2 | 7.2.0.CD13.CR2 | 7.2.0.CD12.CR2 |

|---|---|---|---|---|

| eap-7x-stress-heavyread-session-dist-sync | 9.3.3.Final | 9.3.3.Final | 9.2.5.Final | 9.1.7.Final |

| eap-7x-stress-ejbremote-dist-sync | 9.3.3.Final | 9.3.3.Final | 9.2.5.Final | 9.1.7.Final |

| eap-7x-stress-granular-dist-sync | 9.3.3.Final | 9.3.3.Final | 9.2.5.Final | 9.1.7.Final |

Here is scenario eap-7x-stress-heavyread-session-dist-sync run with every Infinispan version since CD12 and we can see degradation starts with version 9.3.1.Final :

Here is scenario eap-7x-stress-ejbremote-dist-sync run with every Infinispan version since CD12 and we can see degradation starts with version 9.3.1.Final :

Here is scenario eap-7x-stress-granular-dist-sync run with every Infinispan version since CD12 and we can see degradation starts with version 9.3.1.Final :

- is caused by

-

WFLY-11395 Use synchronous command execution for local dispatcher

-

- Closed

-