-

Story

-

Resolution: Done

-

Major

-

None

-

None

-

Incidents & Support

-

False

-

-

False

-

None

-

None

-

None

-

None

-

None

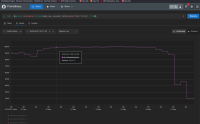

Note the high disruption is originating from periodic-ci-openshift-release-master-nightly-4.20-e2e-agent-ha-dualstack-conformance

Possibly every run.

Appears to be a total loss of egress from a specific node all at once, during e2e testing. A monitortest that fails if we see mass in-cluster disruption from one node would be ideal, I have a partial PR in flight but haven't gotten it working yet to post.

Goal for this card it to formalize a bug for agent installer and potentially networking

- links to