-

Bug

-

Resolution: Done

-

Undefined

-

None

-

4.20.0

-

Incidents & Support

-

False

-

-

None

-

None

-

None

-

None

-

None

-

None

-

None

-

None

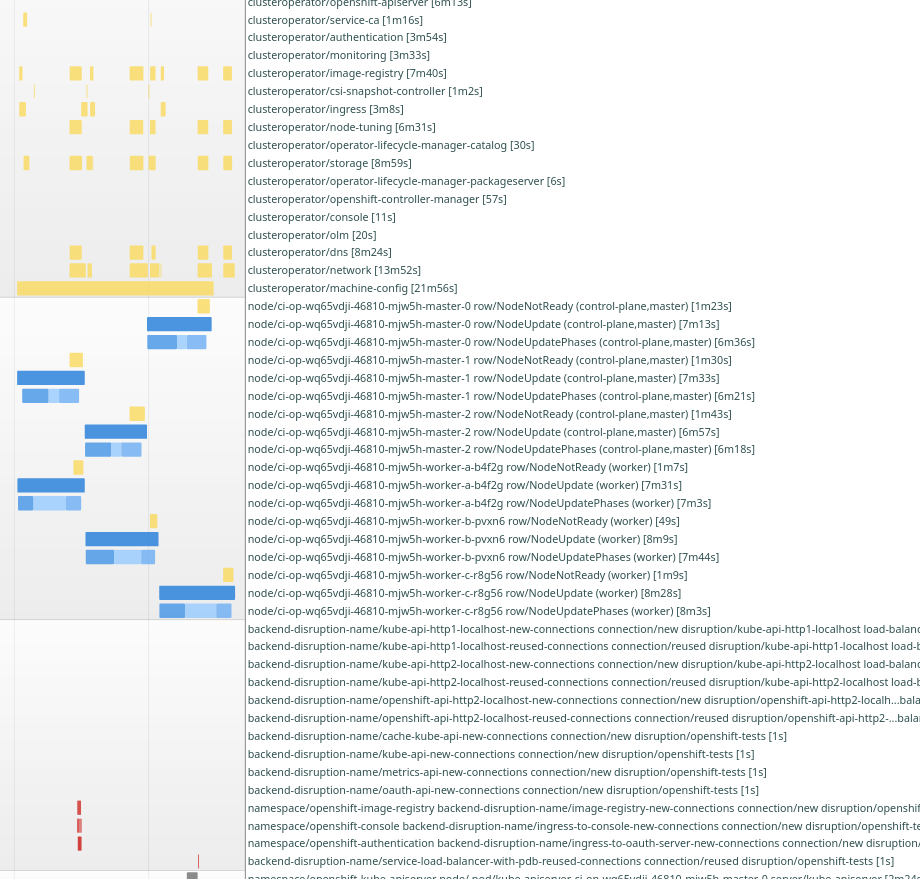

In payload 4.20.0-0.nightly-2025-08-07-023221 we started to see failures for aggregated-gcp-ovn-rt-upgrade-4.20-minor-release due to ingress disruption

ingress-to-console-new-connections disruption P70 should not be worse ingress-to-oauth-server-new-connections disruption P70 should not be worse

The pattern for the increased disruption occurs during the master node updates.

Reviewing gcp disruption for these two backends shows a noticeable increase around 8/4.

Reviewing the jobs list (further down on the dashboard) shows an increase in disruption per job starting 8/7.

Looking further down in that list the first significant disruption we see for job periodic-ci-openshift-release-master-ci-4.20-upgrade-from-stable-4.19-e2e-gcp-runc-upgrade is on 8/2 for payload 4.20.0-0.ci-2025-08-02-135417.

The earliest run showing increase in disruption for periodic-ci-openshift-release-master-ci-4.20-upgrade-from-stable-4.19-e2e-gcp-ovn-rt-upgrade looks to be 7/30. Note:intervals for that job aren't showing in prow but are from Sippy. This job is an outlier in the timeline that may need more review as it occurs before and of the following noted payloads containing ingress changes.

Starting with 4.20.0-0.ci-2025-08-02-135417 we see cluster-ingress-operator#1246. That same PR first shows up in 4.20.0-0.nightly-2025-08-04-154809 along with cluster-ingress-operator#1245

It looks like 4.20.0-0.nightly-2025-08-05-173854 contains some of the first gcp runs where we see the ingress disruption lining up with the ingress operator being updated.