-

Task

-

Resolution: Done

-

Major

-

None

-

None

-

False

-

-

False

-

Not Started

-

Not Started

-

Not Started

-

Not Started

-

Not Started

-

Not Started

-

-

-

RHOAM Sprint 57, RHOAM Sprint 58

WHAT

Replicate as much as possible the performance testing done in 2.8 in order to compare to 2.15

HOW

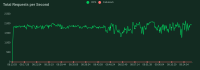

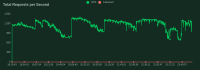

- Run a 6 hour sustained rate test at 1157 rps with a `standard` profile - use standard APIManager

- Run a 6 hour sustained rate test at 1157 rps with a `simple` profile - use simple APIManager

- Run a 1 hour peak rate test at 1736 rps with a `standard` profile - use standard APIManager

- Run a 1 hour peak rate test at 1736 rps with a `simple` profile - use simple APIManager

- Run a 1 hour peak rate test at 4628 rps with a `simple` profile - use simple APIManager

rps is users_per_second

Record parameters used in Hyperfoil. Add HTML to JIRA

We can also consider switching to Locust if reasons are strong enough

VERIFY / OBSERVE

For each test ensure that:

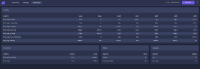

- Resources allocated are inline with SKU/Core/Daily API Requests recommendations and previous test runs.

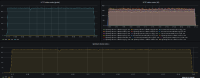

- Observe total CPU and memory usage across the 3 scaling pods, backend worker, backend listener, apicast prod

- Compare totals to 2.8 totals

- Ensure that 3scale remains healthy during the tests

- No alerts firing, no pods crashing, 3scale not reporting errors (product analytics more accurate than testing tool)

References

See test results here Along with extrapolated spreadsheet with resources usage

- clones

-

THREESCALE-11024 Perf test 2.15 - "large" 50M SKU - multi profile

-

- Closed

-