-

Story

-

Resolution: Done

-

Critical

-

None

-

None

-

5

-

False

-

-

True

-

-

We're using Kafka Streams in the swatch billing service to group usages within an hour that then will be sent to either swatch-producer-aws or swatch-producer-azure to submit it.

However, we're seeing lots of inconvenients of using Kafka Streams for this grouping, to number some:

- Missing billing usages SWATCH-2908: we think that this is caused because it internally uses some in memory data structure that is gone when the service is restarted.

- kafka and database transaction issue: We have several places in our code where we need to write to the database and then send a kafka message in a single transaction. This isn't currently handled in quarkus so when one operation fails the other may still succeed, resulting in a mismatch between the database and kafka.

In order to improve swatch-billable-usage's resiliency and observability we need to replace the kstreams implementation that aggregates billable usages. Currently kstreams reads messages from the billable-usage topic and groups messages together that are received within an hour of each other and sends one billable-usage-hourly-aggregate message out. Instead we need to create a query that will fetch all usages for a given billingAccountId/product/metric and create the billable-usage-hourly-aggregate message.

Moreover, to address the second issue, we need to follow the transactional outbox pattern: https://microservices.io/patterns/data/transactional-outbox.html which requires Database changes.

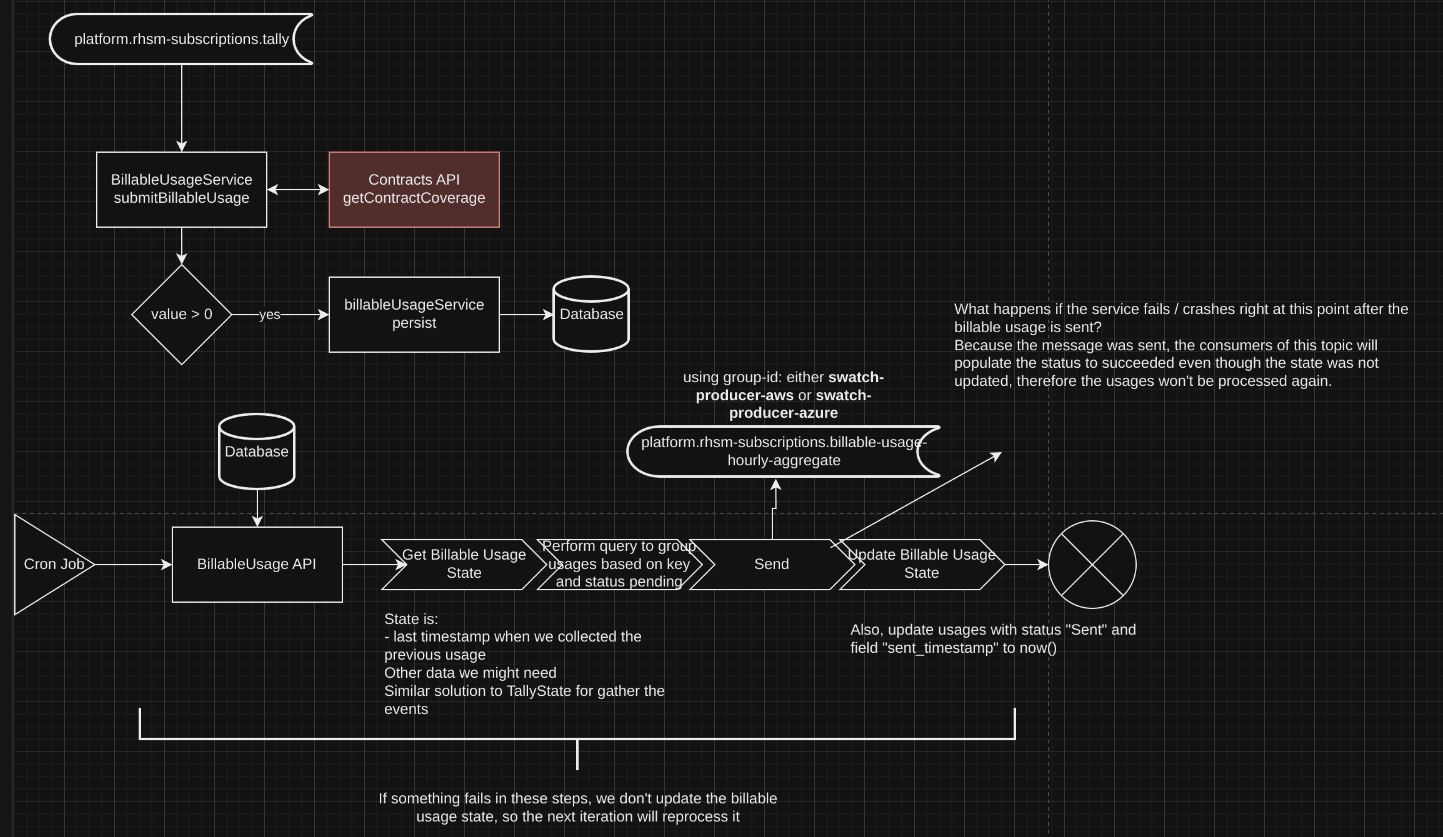

Diagram (above is the existing solution, below is the proposed solution): https://drive.google.com/file/d/1myDaC74bzA8DO6QX9IF_qUdkc6pL0qQv/view

Slack discussion: https://redhat-internal.slack.com/archives/C01F7QFNATC/p1727775760775579

Things to consider:

- How will we make sure each aggregate has a unique key and timestamp?(discussed batch_timestamp field)

- New columns or table for tracking aggregation status and timestamps

- What happens during a failure of swatch-billable-usage if hourly-aggregate message sends and database is not update? (duplicate messages should not be processed by producers)

- What happens if the hourly-aggregate message gets sent and the azure/aws producers fail during processing?(status is not updated but a usage was sent to the marketplace)

Database changes:

- New table: "billable_usage_state" with fields ("latest_usage_date")

- New field in "billable_usage_remittance" with field "sent_timestamp"

New logic for hourly cronjob that groups usages:

Diagram:

Workflow:

1. Cron Job is executed hourly to invoke a BillableUsage API

2. Billable Usage API will retrieve the "latest_usage_date" from "latest_usage_date" table

2.a. Gather all the records "billable_usage_remittance" with "remittance_pending_date" > "latest_usage_date" and status is pending

2.b. Group these records based on the existing "BillableUsageAggregateKey" class and send it to swatch-producer-azure or swatch-producer-aws, using the group-id of "aws" or "azure". This needs to be done in a single transaction.

2.b.1. If the above transaction fails, update the records to "pending" status

2.b.2. If the above transaction works, update the "latest_usage_date" from "latest_usage_date" table with "now()" to prevent the same usages being re-processed again AND update all the records with the status "Sent" and timestamp "sent_timestamp" with now()

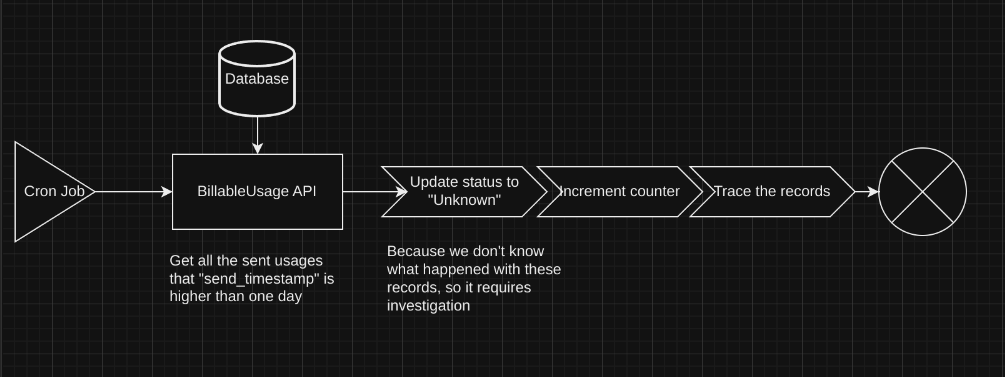

New logic for daily cronjob that monitors usages stuck at "Sent" status:

Diagram:

Workflow:

1. Cron Job is executed hourly to invoke a BillableUsage API

2. Gather all the records "billable_usage_remittance" with "sent_timestamp" > 1 day (configurable) and status is Sent

3. Add a log statement, so we can monitor this in Splunk

4. Increment a counter, so we can diagnostic the number of stuck usages

5. Update all the records with the status "Unknown"

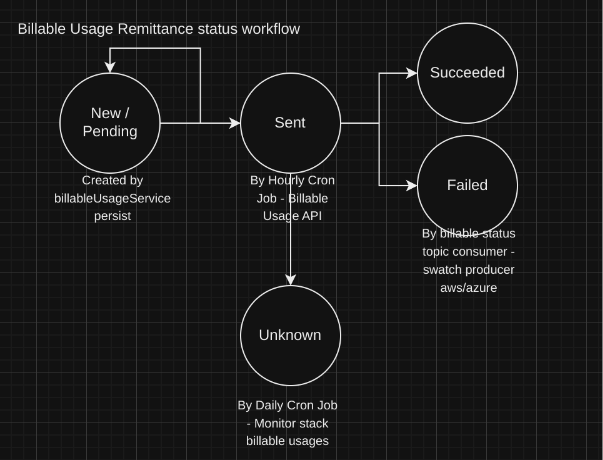

Usage state diagram

Done:

- Documentation of new plan

- Including database schema

- Meeting with team to review document/schema

- Incorporate feedback from team review

- relates to

-

SWATCH-2909 Propagate the Opentelemetry headers when consuming/producing messages with Kafka Streams

-

- Closed

-