-

Bug

-

Resolution: Not a Bug

-

Major

-

None

-

None

-

False

-

-

False

-

None

-

Testable

-

No

-

-

-

-

-

-

-

No

-

No

-

Pending

-

None

-

-

Description of problem:

Follow up / related to: https://issues.redhat.com/browse/RHODS-8796

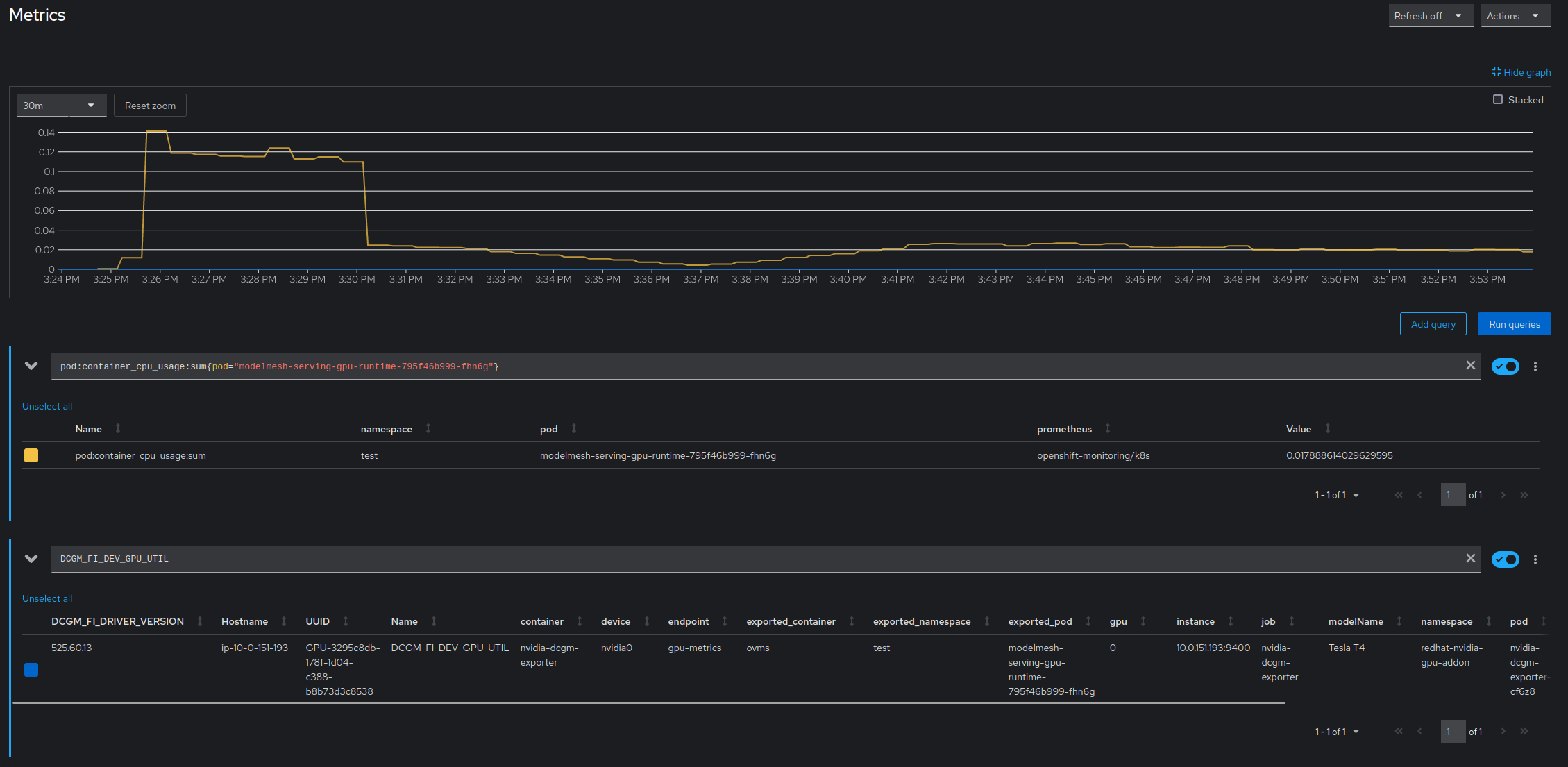

If a user deploys a model with Model Serving and requests/forces GPU usage, the metrics reported by the cluster appear to show that the GPU is not being used and instead inference is performed on the CPU:

These screenshots were taken after about ~6k requests were made to the inference endpoint, but I've since tested up to 20k requests and the results remain the same.

spryor@redhat.com thinks it could be due to the fact that the mnist model used for this test is small enough that GPU utilization does not get reported through the OpenShift metrics, but when we tried deploying a bigger model (yolo) we were not able to do so. If anyone has a better gauge of GPU utilization or a model that can be used to confirm these findings it would be extremely helpful.

Prerequisites (if any, like setup, operators/versions):

RHODS 1.27 RC

Steps to Reproduce

- Provision GPU node

- Install Nvidia GPU Add-On

- Deploy a model in a model serving runtime that uses GPUs (i.e. GPU requested through dashboard and due to

RHODS-8796force flag added manually to ServingRuntime spec) - Send requests to the inference endpoint (~thousands)

- Monitor GPU usage (e.g. DCGM_FI_DEV_GPU_UTIL metric)

Actual results:

GPU usage is pinned at 0

Expected results:

GPU usage increases while inference requests are processed

Reproducibility (Always/Intermittent/Only Once):

Always