-

Bug

-

Resolution: Done

-

Blocker

-

RHODS_1.7.0_GA

-

False

-

False

-

None

-

Yes

-

-

-

-

-

-

-

1.7.0-4

-

No

-

The CUDA version in RHODS has been updated to v11.4.2 to provide support for Tensorflow v2.7.0

-

No

-

Yes

-

None

-

-

MODH Sprint 1.7

Description of problem:

Tensorflow is not able to make use of GPUs in RHODS.

After enabling GPUs via the GPU operator and live build (http://quay.io/modh/rhods-operator-live-catalog:1.7.0-rhods-2315), I am able to run GPU workloads with PyTorch (on the pytorch image itself, as well as the CUDA and TF images after manually installing PyTorch).

Tensorflow instead is not able to see any GPUs attached to the server and will keep running all operations on CPU (same behaviour on all three images).

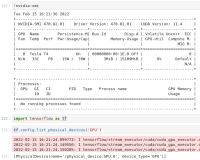

The way in which I have confirmed that the GPU is attached to the server pod is via `nvidia-smi`. For Tensorflow related checks I have followed these steps: https://www.tensorflow.org/guide/gpu , for PyTorch these ones: https://pytorch.org/tutorials/beginner/basics/quickstart_tutorial.html

Prerequisites (if any, like setup, operators/versions):

RHODS live build http://quay.io/modh/rhods-operator-live-catalog:1.7.0-rhods-2315

GPU operator installed in the cluster

GPU node provisioned

Steps to Reproduce

- Install live build

- Install GPU operator

- Provision GPU node

- Spawn cuda-enabled image (CUDA, TF, PyTorch)

- Confirm that GPU is attached (nvidia-smi or similar)

- Confirm that library can see the GPU

- PyTorch:

import torch device = "cuda" if torch.cuda.is_available() else "cpu" print(f"Using {device} device")

-

- TF:

import tensorflow as tf tf.config.list_physical_devices('GPU')

- Confirm that library can use the GPU (check nvidia-smi while code is running, or enable debug level e.g.

tf.debugging.set_log_device_placement(True){})

Actual results:

Tensorflow cannot see or use GPUs. When trying to access a GPU, the following error message is printed:

2022-02-08 14:54:35.193223: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:936] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero 2022-02-08 14:54:35.244755: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcusolver.so.11'; dlerror: libcusolver.so.11: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /usr/local/nvidia/lib:/usr/local/nvidia/lib64 2022-02-08 14:54:35.250776: W tensorflow/core/common_runtime/gpu/gpu_device.cc:1850] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform. Skipping registering GPU devices...

Expected results:

Tensorflow can use GPUs

Reproducibility (Always/Intermittent/Only Once):

Always in 1 OSD cluster

Build Details:

RHODS live build http://quay.io/modh/rhods-operator-live-catalog:1.7.0-rhods-2315 on OCP 4.9

Workaround:

No known workaround at this time

Additional info:

- blocks

-

RHODS-2131 GPU support

-

- Closed

-