-

Bug

-

Resolution: Obsolete

-

Normal

-

None

-

rhelai-1.4.3

-

False

-

-

False

-

-

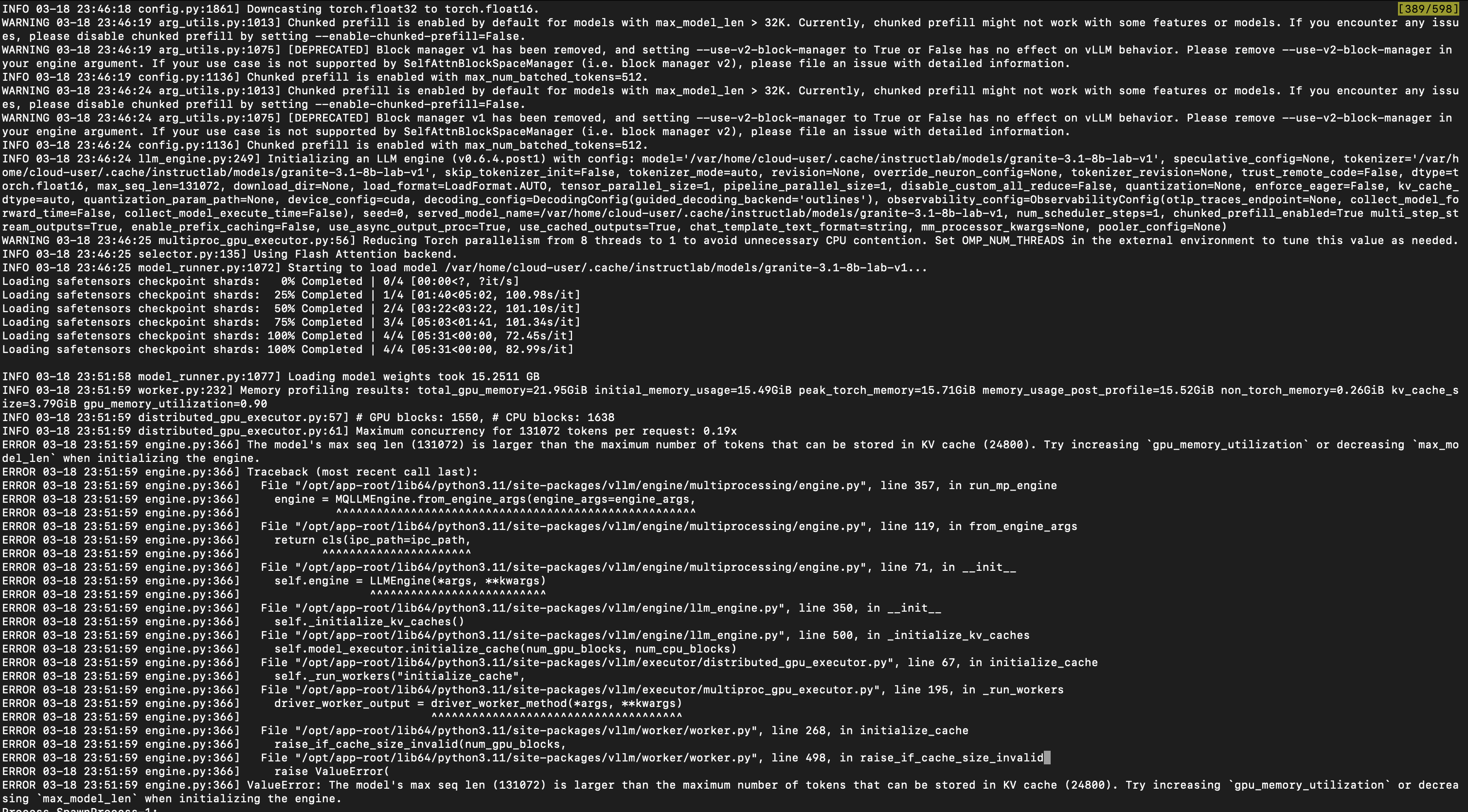

To Reproduce Steps to reproduce the behavior:

Using 1xL4 GPU setup, changed all GPU values in the config file to 1.

IBM Cloud Instance

Expected behavior

- Error while running ilab model serve

Screenshots

Device Info (please complete the following information):

- Hardware Specs: Apple M3 Pro 36GB Memory

- OS Version: Mac 0S 15.3.1

- InstructLab Version:

ilab, version 0.23.3

- registry.stage.redhat.io/rhelai1/bootc-nvidia-rhel9:1.4.3-1741712137

-

ilab system info- ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no

ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no

ggml_cuda_init: found 1 CUDA devices:

Device 0: NVIDIA L4, compute capability 8.9, VMM: yes

Platform:

sys.version: 3.11.7 (main, Jan 8 2025, 00:00:00) [GCC 11.4.1 20231218 (Red Hat 11.4.1-3)]

sys.platform: linux

os.name: posix

platform.release: 5.14.0-427.55.1.el9_4.x86_64

platform.machine: x86_64

platform.node: ecosystem-qe-us-east3-l4

platform.python_version: 3.11.7

os-release.ID: rhel

os-release.VERSION_ID: 9.4

os-release.PRETTY_NAME: Red Hat Enterprise Linux 9.4 (Plow)

memory.total: 78.54 GB

memory.available: 77.18 GB

memory.used: 0.58 GB

InstructLab:

instructlab.version: 0.23.3

instructlab-dolomite.version: 0.2.0

instructlab-eval.version: 0.5.1

instructlab-quantize.version: 0.1.0

instructlab-schema.version: 0.4.2

instructlab-sdg.version: 0.7.1

instructlab-training.version: 0.7.0

Torch:

torch.version: 2.5.1

torch.backends.cpu.capability: AVX512

torch.version.cuda: 12.4

torch.version.hip: None

torch.cuda.available: True

torch.backends.cuda.is_built: True

torch.backends.mps.is_built: False

torch.backends.mps.is_available: False

torch.cuda.bf16: True

torch.cuda.current.device: 0

torch.cuda.0.name: NVIDIA L4

torch.cuda.0.free: 21.8 GB

torch.cuda.0.total: 22.0 GB

torch.cuda.0.capability: 8.9 (see https://developer.nvidia.com/cuda-gpus#compute)

llama_cpp_python:

llama_cpp_python.version: 0.3.2

llama_cpp_python.supports_gpu_offload: True

Additional context

- config.yaml