-

Bug

-

Resolution: Done

-

Undefined

-

None

-

1.8.0

-

None

-

False

-

-

False

-

-

Known Issue

-

Proposed

-

-

-

RHDH AI Sprint 3284

-

Moderate

Description of problem:

So, trying out the MCP stuff - I did the configuration successfully, and was able to configure Cursor to use it (it recognized and was able to use the catalog MCP tool - yay!). But, when I used lightspeed with MCP, it gave a 'No response from model' message in the UI and I see the failure in the llamastack container log related to tool calling:

INFO 2025-11-12 20:20:00,063 uvicorn.access:473 uncategorized: ::1:34596 - "GET /v1/vector-dbs HTTP/1.1" 200

INFO 2025-11-12 20:20:00,067 uvicorn.access:473 uncategorized: ::1:34596 - "POST

/v1/agents/abd8dd6d-b9ed-48be-83b2-6dc790018a9f/session/0dfd18b7-dd20-4278-826f-43d5ec18e538/turn HTTP/1.1" 200

INFO 2025-11-12 20:20:15,228 llama_stack.providers.inline.agents.meta_reference.agent_instance:892 agents: executing tool call:

fetch-catalog-entities with args: {'kind': 'Template', 'type': 'service', 'name': '', 'owner': '', 'lifecycle': '', 'tags': '', 'verbose':

False}

INFO 2025-11-12 20:20:15,254 mcp.client.streamable_http:146 uncategorized: Negotiated protocol version: 2025-06-18

ERROR 2025-11-12 20:20:16,277 llama_stack.core.server.server:194 server: Error in sse_generator

╭──────────────────────────────────────────────────── Traceback (most recent call last) ────────────────────────────────────────────────────╮

│ /app-root/.venv/lib64/python3.12/site-packages/llama_stack/core/server/server.py:187 in sse_generator │

│ │

│ 184 │ event_gen = None │

│ 185 │ try: │

│ 186 │ │ event_gen = await event_gen_coroutine │

│ ❱ 187 │ │ async for item in event_gen: │

.... lots of stack trace omitted ....

│ /app-root/.venv/lib64/python3.12/site-packages/openai/_base_client.py:1594 in request │

│ │

│ 1591 │ │ │ │ │ await err.response.aread() │

│ 1592 │ │ │ │ │

│ 1593 │ │ │ │ log.debug("Re-raising status error") │

│ ❱ 1594 │ │ │ │ raise self._make_status_error_from_response(err.response) from None │

│ 1595 │ │ │ │

│ 1596 │ │ │ break │

│ 1597 │

╰───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

BadRequestError: Error code: 400 - {'error': {'message': "Missing parameter 'tool_call_id': messages with role 'tool' must have a

'tool_call_id'.", 'type': 'invalid_request_error', 'param': 'messages.[5].tool_call_id', 'code': None}}

Prerequisites (if any, like setup, operators/versions):

Follow MCP configuration for the catalog and techdocs MCP tools, and configure Lightspeed to use it following the docs at https://docs.redhat.com/en/documentation/red_hat_developer_hub/1.8/html/interacting_with_model_context_protocol_tools_for_red_hat_developer_hub/index

Steps to Reproduce

Using the OpenAI gpt-4.1 model (and several other gpt variants),

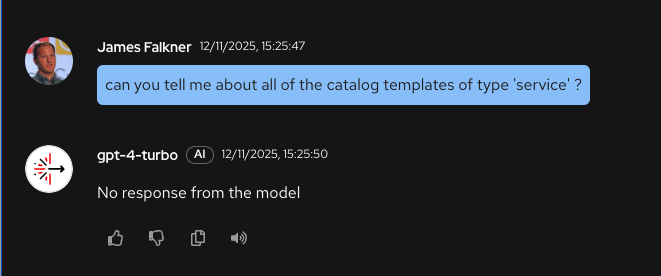

Issue a prompt such as: "can you tell me about all of the catalog templates of type 'service' ?"

Actual results:

Error in UI:

and the stack trace shown above in the llamastack sidecar logs

Expected results:

No error.

Reproducibility (Always/Intermittent/Only Once):

Always

Build Details:

RHDH 1.8.0 GA