-

Bug

-

Resolution: Done

-

Major

-

4.4.1.Final, 3.9.3.Final

-

None

-

None

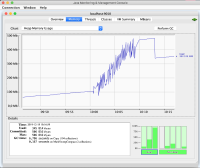

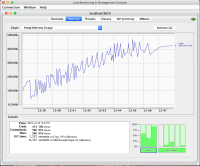

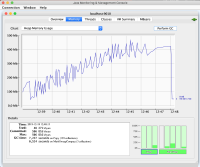

When using `RESTEasy`-based `SSE` application (`Quarkus`) quite quickly drains entire heap space. Quick google search shows that this is quite common problem - especially when running on limited resources. Since we cannot afford increasing the JVM heap space to 8GB for our SSE solution (like they've done it at LinkedIn - https://engineering.linkedin.com/blog/2016/10/instant-messaging-at-linkedin--scaling-to-hundreds-of-thousands-), I've spent some time trying to identify the source of the leak. Heap dumps analysis pointed me to many byte arrays left after major GC. Following references I've ended up in `SseEventOutputImpl` (`contextDataMap`) and found that it is not cleaned up on close. I found feasible `clearContextData` method in `org.jboss.resteasy.core.SynchronousDispatcher` and copied it into `SseEventOutputImpl`, where it is called from `close` method. After that - major GC is able to collect all leftovers.