-

Bug

-

Resolution: Obsolete

-

Major

-

quay-v3.6.0

-

False

-

False

-

Quay Enterprise

-

Description:

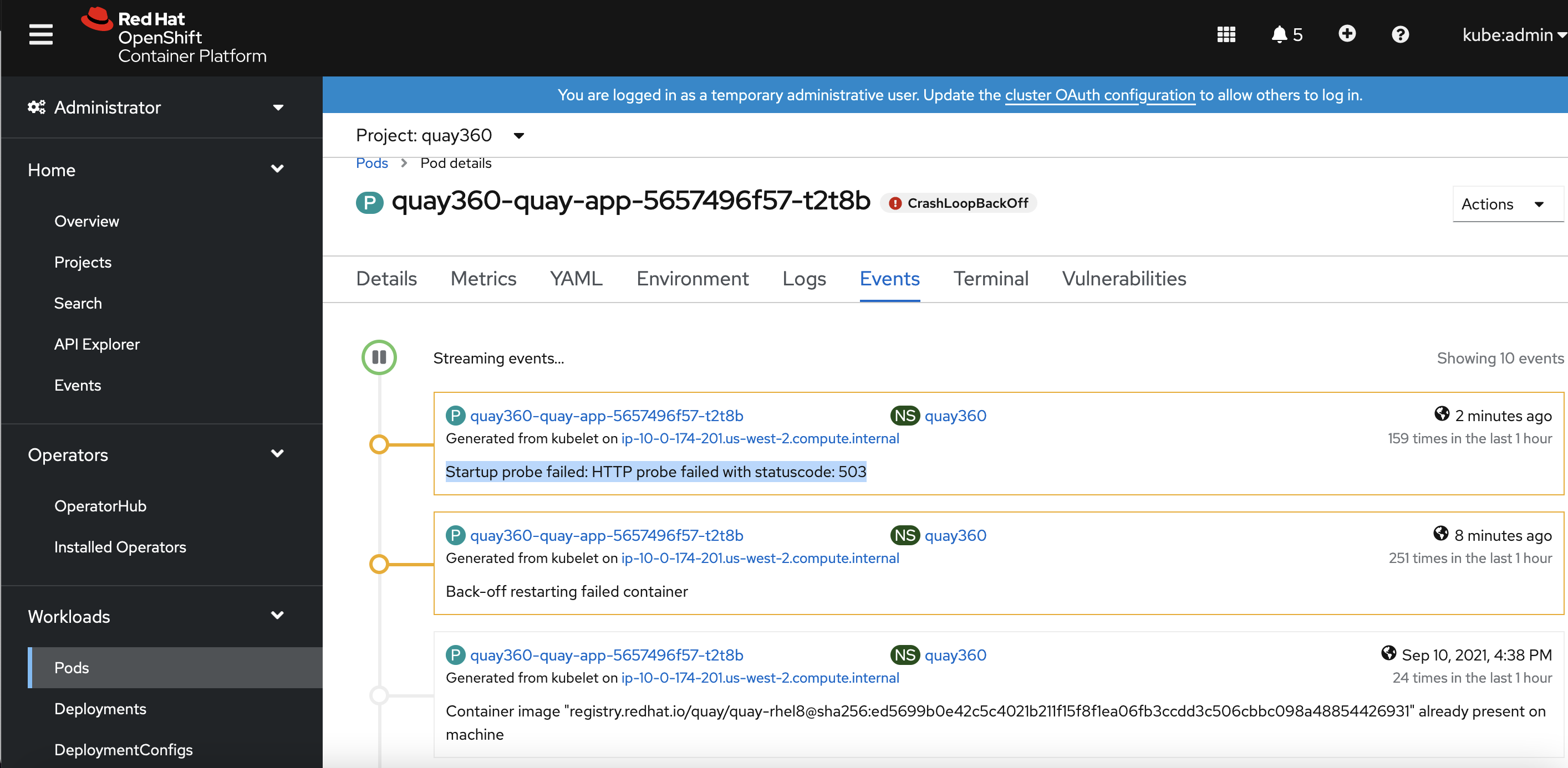

This is an issue found when use config editor to configure Quay to use AWS RDS Mysql 8.0(enforced SSL), after Quay Operator reconcile the change, found Quay APP POD was failed to start, the reason is POD startupProbe check was failed, and checked target Mysql database and Quay Upgrade POD logs, found totally 93 tables are created correctly, in Quay APP POD's Database Validation, it's green, see detailed Quay APP POD logs quay_360_app_mysql_pod.logs![]()

Note: Quay image is "quay-operator-bundle-container-v3.6.0-30"

Note: This issue is not existed with Quay 3.5.6.

oc get pod NAME READY STATUS RESTARTS AGE quay-operator.v3.6.0-6d8c55c69c-rf4xm 1/1 Running 0 5h15m quay360-clair-app-85fffbff44-88tvz 1/1 Running 0 73m quay360-clair-app-85fffbff44-zp7s2 1/1 Running 0 73m quay360-clair-postgres-6785f8db5d-k2flw 1/1 Running 0 72m quay360-quay-app-5657496f57-65cf7 0/1 CrashLoopBackOff 17 73m quay360-quay-app-5657496f57-t2t8b 0/1 Running 18 73m quay360-quay-app-5fcf45f9bd-bc8s7 1/1 Running 0 5h8m quay360-quay-app-upgrade-45k9j 0/1 Completed 0 73m quay360-quay-config-editor-546f7cc75f-g7m5f 1/1 Running 0 73m quay360-quay-database-5578464b47-8lwn2 0/1 ContainerCreating 0 5h8m quay360-quay-database-6f564989f9-xcvzk 1/1 Running 0 5h9m quay360-quay-mirror-8548866666-67rrd 1/1 Running 0 72m quay360-quay-mirror-8548866666-gt9jx 1/1 Running 0 72m quay360-quay-postgres-init-9ctdp 1/1 Running 0 5h8m quay360-quay-redis-9b98c6ff7-995jq 1/1 Running 0 73m oc get pod quay-operator.v3.6.0-6d8c55c69c-rf4xm -o json | jq '.spec.containers[0].image' "registry.redhat.io/quay/quay-operator-rhel8@sha256:c31485bc404255f94a5ef10bc83dbc5df971d92a3c499fe20316a34fde12018c" oc get pod quay360-quay-app-5657496f57-65cf7 -o json | jq '.spec.containers[0].image' "registry.redhat.io/quay/quay-rhel8@sha256:ed5699b0e42c5c4021b211f15f8f1ea06fb3ccdd3c506cbbc098a48854426931"

Quay 3.6.0 New App POD yaml:

apiVersion: v1

kind: Pod

metadata:

annotations:

k8s.v1.cni.cncf.io/network-status: |-

[{

"name": "openshift-sdn",

"interface": "eth0",

"ips": [

"10.131.1.180"

],

"default": true,

"dns": {}

}]

k8s.v1.cni.cncf.io/networks-status: |-

[{

"name": "openshift-sdn",

"interface": "eth0",

"ips": [

"10.131.1.180"

],

"default": true,

"dns": {}

}]

openshift.io/scc: restricted

quay-buildmanager-hostname: ""

quay-managed-fieldgroups: SecurityScanner,Redis,HostSettings,RepoMirror

quay-operator-service-endpoint: http://quay-operator.quay360:7071

quay-registry-hostname: quayv360.apps.quay-perf-738.perfscale.devcluster.openshift.com

creationTimestamp: "2021-09-10T06:58:02Z"

generateName: quay360-quay-app-5657496f57-

labels:

app: quay

pod-template-hash: 5657496f57

quay-component: quay-app

quay-operator/quayregistry: quay360

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:quay-buildmanager-hostname: {}

f:quay-managed-fieldgroups: {}

f:quay-operator-service-endpoint: {}

f:quay-registry-hostname: {}

f:generateName: {}

f:labels:

.: {}

f:app: {}

f:pod-template-hash: {}

f:quay-component: {}

f:quay-operator/quayregistry: {}

f:ownerReferences:

.: {}

k:{"uid":"091325ec-c748-4619-a0b4-bcb758f7886c"}:

.: {}

f:apiVersion: {}

f:blockOwnerDeletion: {}

f:controller: {}

f:kind: {}

f:name: {}

f:uid: {}

f:spec:

f:containers:

k:{"name":"quay-app"}:

.: {}

f:args: {}

f:env:

.: {}

k:{"name":"DEBUGLOG"}:

.: {}

f:name: {}

f:value: {}

k:{"name":"QE_K8S_CONFIG_SECRET"}:

.: {}

f:name: {}

f:value: {}

k:{"name":"QE_K8S_NAMESPACE"}:

.: {}

f:name: {}

f:valueFrom:

.: {}

f:fieldRef:

.: {}

f:apiVersion: {}

f:fieldPath: {}

k:{"name":"WORKER_COUNT_REGISTRY"}:

.: {}

f:name: {}

f:value: {}

k:{"name":"WORKER_COUNT_SECSCAN"}:

.: {}

f:name: {}

f:value: {}

k:{"name":"WORKER_COUNT_WEB"}:

.: {}

f:name: {}

f:value: {}

f:image: {}

f:imagePullPolicy: {}

f:name: {}

f:ports:

.: {}

k:{"containerPort":8080,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:protocol: {}

k:{"containerPort":8081,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:protocol: {}

k:{"containerPort":8443,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:protocol: {}

k:{"containerPort":9091,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:protocol: {}

f:readinessProbe:

.: {}

f:failureThreshold: {}

f:httpGet:

.: {}

f:path: {}

f:port: {}

f:scheme: {}

f:periodSeconds: {}

f:successThreshold: {}

f:timeoutSeconds: {}

f:resources:

.: {}

f:limits:

.: {}

f:cpu: {}

f:memory: {}

f:requests:

.: {}

f:cpu: {}

f:memory: {}

f:startupProbe:

.: {}

f:failureThreshold: {}

f:httpGet:

.: {}

f:path: {}

f:port: {}

f:scheme: {}

f:periodSeconds: {}

f:successThreshold: {}

f:timeoutSeconds: {}

f:terminationMessagePath: {}

f:terminationMessagePolicy: {}

f:volumeMounts:

.: {}

k:{"mountPath":"/conf/stack"}:

.: {}

f:mountPath: {}

f:name: {}

k:{"mountPath":"/conf/stack/extra_ca_certs"}:

.: {}

f:mountPath: {}

f:name: {}

f:readOnly: {}

f:dnsPolicy: {}

f:enableServiceLinks: {}

f:restartPolicy: {}

f:schedulerName: {}

f:securityContext:

.: {}

f:fsGroup: {}

f:seLinuxOptions:

f:level: {}

f:serviceAccount: {}

f:serviceAccountName: {}

f:terminationGracePeriodSeconds: {}

f:volumes:

.: {}

k:{"name":"config"}:

.: {}

f:name: {}

f:secret:

.: {}

f:defaultMode: {}

f:secretName: {}

k:{"name":"extra-ca-certs"}:

.: {}

f:configMap:

.: {}

f:defaultMode: {}

f:name: {}

f:name: {}

manager: kube-controller-manager

operation: Update

time: "2021-09-10T06:58:02Z"

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:status:

f:conditions:

k:{"type":"ContainersReady"}:

.: {}

f:lastProbeTime: {}

f:lastTransitionTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

k:{"type":"Initialized"}:

.: {}

f:lastProbeTime: {}

f:lastTransitionTime: {}

f:status: {}

f:type: {}

k:{"type":"Ready"}:

.: {}

f:lastProbeTime: {}

f:lastTransitionTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

f:containerStatuses: {}

f:hostIP: {}

f:phase: {}

f:podIP: {}

f:podIPs:

.: {}

k:{"ip":"10.131.1.180"}:

.: {}

f:ip: {}

f:startTime: {}

manager: kubelet

operation: Update

time: "2021-09-10T06:58:05Z"

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:k8s.v1.cni.cncf.io/network-status: {}

f:k8s.v1.cni.cncf.io/networks-status: {}

manager: multus

operation: Update

time: "2021-09-10T06:58:05Z"

name: quay360-quay-app-5657496f57-65cf7

namespace: quay360

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: quay360-quay-app-5657496f57

uid: 091325ec-c748-4619-a0b4-bcb758f7886c

resourceVersion: "19834611"

uid: 1094ad7f-064f-4bbf-92a8-ae601c7f7983

spec:

containers:

- args:

- registry-nomigrate

env:

- name: QE_K8S_CONFIG_SECRET

value: quay360-quay-config-secret-cg67d2bf58

- name: QE_K8S_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: DEBUGLOG

value: "false"

- name: WORKER_COUNT_WEB

value: "4"

- name: WORKER_COUNT_SECSCAN

value: "2"

- name: WORKER_COUNT_REGISTRY

value: "8"

image: registry.redhat.io/quay/quay-rhel8@sha256:ed5699b0e42c5c4021b211f15f8f1ea06fb3ccdd3c506cbbc098a48854426931

imagePullPolicy: IfNotPresent

name: quay-app

ports:

- containerPort: 8443

protocol: TCP

- containerPort: 8080

protocol: TCP

- containerPort: 8081

protocol: TCP

- containerPort: 9091

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /health/instance

port: 8080

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

limits:

cpu: "2"

memory: 8Gi

requests:

cpu: "2"

memory: 8Gi

securityContext:

capabilities:

drop:

- KILL

- MKNOD

- SETGID

- SETUID

runAsUser: 1000660000

startupProbe:

failureThreshold: 10

httpGet:

path: /health/instance

port: 8080

scheme: HTTP

periodSeconds: 15

successThreshold: 1

timeoutSeconds: 20

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /conf/stack

name: config

- mountPath: /conf/stack/extra_ca_certs

name: extra-ca-certs

readOnly: true

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-mk2p4

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

imagePullSecrets:

- name: quay360-quay-app-dockercfg-l75qb

nodeName: ip-10-0-139-92.us-west-2.compute.internal

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

fsGroup: 1000660000

seLinuxOptions:

level: s0:c26,c5

serviceAccount: quay360-quay-app

serviceAccountName: quay360-quay-app

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

- effect: NoSchedule

key: node.kubernetes.io/memory-pressure

operator: Exists

volumes:

- name: config

secret:

defaultMode: 420

secretName: quay360-quay-config-secret-cg67d2bf58

- configMap:

defaultMode: 420

name: quay360-cluster-service-ca

name: extra-ca-certs

- name: kube-api-access-mk2p4

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

- configMap:

items:

- key: service-ca.crt

path: service-ca.crt

name: openshift-service-ca.crt

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2021-09-10T06:58:02Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2021-09-10T06:58:02Z"

message: 'containers with unready status: [quay-app]'

reason: ContainersNotReady

status: "False"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2021-09-10T06:58:02Z"

message: 'containers with unready status: [quay-app]'

reason: ContainersNotReady

status: "False"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2021-09-10T06:58:02Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: cri-o://0562287b2060838753352884f9c610be100c2155f91e65790f6db6d0f79eac82

image: registry.redhat.io/quay/quay-rhel8@sha256:ed5699b0e42c5c4021b211f15f8f1ea06fb3ccdd3c506cbbc098a48854426931

imageID: registry.redhat.io/quay/quay-rhel8@sha256:5da615fca43ceeb478626fb9b7ec646a1dd3cd0c626b2769265d31ea04d937f9

lastState:

terminated:

containerID: cri-o://0562287b2060838753352884f9c610be100c2155f91e65790f6db6d0f79eac82

exitCode: 0

finishedAt: "2021-09-10T08:58:46Z"

reason: Completed

startedAt: "2021-09-10T08:56:13Z"

name: quay-app

ready: false

restartCount: 27

started: false

state:

waiting:

message: back-off 5m0s restarting failed container=quay-app pod=quay360-quay-app-5657496f57-65cf7_quay360(1094ad7f-064f-4bbf-92a8-ae601c7f7983)

reason: CrashLoopBackOff

hostIP: 10.0.139.92

phase: Running

podIP: 10.131.1.180

podIPs:

- ip: 10.131.1.180

qosClass: Guaranteed

startTime: "2021-09-10T06:58:02Z"

Quay APP Upgrade POD logs:

oc logs quay360-quay-app-upgrade-45k9j __ __ / \ / \ ______ _ _ __ __ __ / /\ / /\ \ / __ \ | | | | / \ \ \ / / / / / / \ \ | | | | | | | | / /\ \ \ / \ \ \ \ / / | |__| | | |__| | / ____ \ | | \ \/ \ \/ / \_ ___/ \____/ /_/ \_\ |_| \__/ \__/ \ \__ \___\ by Red Hat Build, Store, and Distribute your Containers Entering migration mode to version: head /quay-registry/data/secscan_model/__init__.py:28: DeprecationWarning: Call to deprecated class V2SecurityScanner. (Will be replaced by a V4 API security scanner soon) self._legacy_model = V2SecurityScanner(app, instance_keys, storage) Failed to validate security scanner V2 configuration /quay-registry/data/secscan_model/__init__.py:30: DeprecationWarning: Call to deprecated class NoopV2SecurityScanner. (Will be replaced by a V4 API security scanner soon) self._legacy_model = NoopV2SecurityScanner() 06:58:05 INFO [alembic.runtime.migration] Context impl MySQLImpl. 06:58:05 INFO [alembic.runtime.migration] Will assume non-transactional DDL. 06:58:05 INFO [alembic.runtime.migration] Running upgrade -> c156deb8845d, Reset our migrations with a required update. 06:58:11 INFO [alembic.runtime.migration] Running upgrade c156deb8845d -> 6c7014e84a5e, Add user prompt support. 06:58:12 INFO [alembic.runtime.migration] Running upgrade 6c7014e84a5e -> faf752bd2e0a, Add user metadata fields. 06:58:12 INFO [alembic.runtime.migration] Running upgrade faf752bd2e0a -> 94836b099894, Create new notification type. 06:58:12 INFO [alembic.runtime.migration] Running upgrade 94836b099894 -> 45fd8b9869d4, add_notification_type. 06:58:12 INFO [alembic.runtime.migration] Running upgrade 45fd8b9869d4 -> f5167870dd66, update queue item table indices. 06:58:12 INFO [alembic.runtime.migration] Running upgrade f5167870dd66 -> fc47c1ec019f, Add state_id field to QueueItem. 06:58:12 INFO [alembic.runtime.migration] Running upgrade fc47c1ec019f -> 3e8cc74a1e7b, Add severity and media_type to global messages. 06:58:12 INFO [alembic.runtime.migration] Running upgrade 3e8cc74a1e7b -> d42c175b439a, Backfill state_id and make it unique. 06:58:12 INFO [alembic.runtime.migration] Running upgrade d42c175b439a -> e2894a3a3c19, Add full text search indexing for repo name and description. /usr/local/lib/python3.8/site-packages/pymysql/cursors.py:170: Warning: (124, 'InnoDB rebuilding table to add column FTS_DOC_ID') result = self._query(query) 06:58:13 INFO [alembic.runtime.migration] Running upgrade e2894a3a3c19 -> 7a525c68eb13, Add OCI/App models. 06:58:15 INFO [alembic.runtime.migration] Running upgrade 7a525c68eb13 -> b8ae68ad3e52, Change BlobUpload fields to BigIntegers to allow layers > 8GB. 06:58:15 INFO [alembic.runtime.migration] Running upgrade b8ae68ad3e52 -> b4df55dea4b3, add repository kind. 06:58:16 INFO [alembic.runtime.migration] Running upgrade b4df55dea4b3 -> a6c463dfb9fe, back fill build expand_config. 06:58:16 INFO [alembic.runtime.migration] Running upgrade a6c463dfb9fe -> be8d1c402ce0, Add TeamSync table. 06:58:16 INFO [alembic.runtime.migration] Running upgrade be8d1c402ce0 -> f30984525c86, Add RepositorySearchScore table. 06:58:16 INFO [alembic.runtime.migration] Running upgrade f30984525c86 -> c3d4b7ebcdf7, Backfill RepositorySearchScore table. 06:58:16 INFO [alembic.runtime.migration] Running upgrade c3d4b7ebcdf7 -> ed01e313d3cb, Add trust_enabled to repository. 06:58:17 INFO [alembic.runtime.migration] Running upgrade ed01e313d3cb -> 53e2ac668296, Remove reference to subdir. 06:58:17 INFO [alembic.runtime.migration] Running upgrade 53e2ac668296 -> dc4af11a5f90, add notification number of failures column. 06:58:17 INFO [alembic.runtime.migration] Running upgrade dc4af11a5f90 -> d8989249f8f6, Add change_tag_expiration log type. 06:58:17 INFO [alembic.runtime.migration] Running upgrade d8989249f8f6 -> 7367229b38d9, Add support for app specific tokens. 06:58:17 INFO [alembic.runtime.migration] Running upgrade 7367229b38d9 -> cbc8177760d9, Add user location field. 06:58:17 INFO [alembic.runtime.migration] Running upgrade cbc8177760d9 -> 152bb29a1bb3, Add maximum build queue count setting to user table. 06:58:17 INFO [alembic.runtime.migration] Running upgrade 152bb29a1bb3 -> c91c564aad34, Drop checksum on ImageStorage. 06:58:17 INFO [alembic.runtime.migration] Running upgrade c91c564aad34 -> 152edccba18c, Make BlodUpload byte_count not nullable. 06:58:17 INFO [alembic.runtime.migration] Running upgrade 152edccba18c -> b4c2d45bc132, Add deleted namespace table. 06:58:18 INFO [alembic.runtime.migration] Running upgrade b4c2d45bc132 -> 61cadbacb9fc, Add ability for build triggers to be disabled. 06:58:18 INFO [alembic.runtime.migration] Running upgrade 61cadbacb9fc -> 17aff2e1354e, Add automatic disable of build triggers. 06:58:18 INFO [alembic.runtime.migration] Running upgrade 17aff2e1354e -> 87fbbc224f10, Add disabled datetime to trigger. 06:58:18 INFO [alembic.runtime.migration] Running upgrade 87fbbc224f10 -> 0cf50323c78b, Add creation date to User table. 06:58:18 INFO [alembic.runtime.migration] Running upgrade 0cf50323c78b -> b547bc139ad8, Add RobotAccountMetadata table. 06:58:18 INFO [alembic.runtime.migration] Running upgrade b547bc139ad8 -> 224ce4c72c2f, Add last_accessed field to User table. 06:58:18 INFO [alembic.runtime.migration] Running upgrade 224ce4c72c2f -> 5b7503aada1b, Cleanup old robots. 06:58:18 INFO [util.migrate.cleanup_old_robots] Found 0 robots to delete 06:58:18 INFO [alembic.runtime.migration] Running upgrade 5b7503aada1b -> 1783530bee68, Add LogEntry2 table - QUAY.IO ONLY 06:58:19 INFO [alembic.runtime.migration] Running upgrade 1783530bee68 -> 5cbbfc95bac7, Remove 'oci' tables not used by CNR. The rest will be migrated and renamed. 06:58:19 INFO [alembic.runtime.migration] Running upgrade 5cbbfc95bac7 -> 610320e9dacf, Add new Appr-specific tables. 06:58:20 INFO [alembic.runtime.migration] Running upgrade 610320e9dacf -> 5d463ea1e8a8, Backfill new appr tables. 06:58:20 INFO [alembic.runtime.migration] Running upgrade 5d463ea1e8a8 -> d17c695859ea, Delete old Appr tables. 06:58:20 INFO [alembic.runtime.migration] Running upgrade d17c695859ea -> 6c21e2cfb8b6, Change LogEntry to use a BigInteger as its primary key. 06:58:20 INFO [alembic.runtime.migration] Running upgrade 6c21e2cfb8b6 -> 9093adccc784, Add V2_2 data models for Manifest, ManifestBlob and ManifestLegacyImage. 06:58:22 INFO [alembic.runtime.migration] Running upgrade 9093adccc784 -> eafdeadcebc7, Remove blob_index from ManifestBlob table. 06:58:22 INFO [alembic.runtime.migration] Running upgrade eafdeadcebc7 -> 654e6df88b71, Change manifest_bytes to a UTF8 text field. 06:58:22 INFO [alembic.runtime.migration] Running upgrade 654e6df88b71 -> 13411de1c0ff, Remove unique from TagManifestToManifest. 06:58:22 INFO [alembic.runtime.migration] Running upgrade 13411de1c0ff -> 10f45ee2310b, Add Tag, TagKind and ManifestChild tables. 06:58:23 INFO [alembic.runtime.migration] Running upgrade 10f45ee2310b -> 67f0abd172ae, Add TagToRepositoryTag table. 06:58:23 INFO [alembic.runtime.migration] Running upgrade 67f0abd172ae -> c00a1f15968b 06:58:23 INFO [alembic.runtime.migration] Running upgrade c00a1f15968b -> 54492a68a3cf, Add NamespaceGeoRestriction table. 06:58:23 INFO [alembic.runtime.migration] Running upgrade 54492a68a3cf -> 6ec8726c0ace, Add LogEntry3 table. 06:58:23 INFO [alembic.runtime.migration] Running upgrade 6ec8726c0ace -> e184af42242d, Add missing index on UUID fields. 06:58:23 INFO [alembic.runtime.migration] Running upgrade e184af42242d -> b9045731c4de, Add lifetime end indexes to tag tables. 06:58:23 INFO [alembic.runtime.migration] Running upgrade b9045731c4de -> 481623ba00ba, Add index on logs_archived on repositorybuild. 06:58:23 INFO [alembic.runtime.migration] Running upgrade 481623ba00ba -> b918abdbee43, Run full tag backfill. 06:58:23 INFO [alembic.runtime.migration] Running upgrade b918abdbee43 -> 5248ddf35167, Repository Mirror. 06:58:25 INFO [alembic.runtime.migration] Running upgrade 5248ddf35167 -> c13c8052f7a6, Add new fields and tables for encrypted tokens. 06:58:26 INFO [alembic.runtime.migration] Running upgrade c13c8052f7a6 -> 703298a825c2, Backfill new encrypted fields. 06:58:26 INFO [703298a825c2_backfill_new_encrypted_fields_py] Backfilling encrypted credentials for access tokens 06:58:26 INFO [703298a825c2_backfill_new_encrypted_fields_py] Backfilling encrypted credentials for robots 06:58:26 INFO [703298a825c2_backfill_new_encrypted_fields_py] Backfilling encrypted credentials for repo build triggers 06:58:26 INFO [703298a825c2_backfill_new_encrypted_fields_py] Backfilling encrypted credentials for app specific auth tokens 06:58:26 INFO [703298a825c2_backfill_new_encrypted_fields_py] Backfilling credentials for OAuth access tokens 06:58:26 INFO [703298a825c2_backfill_new_encrypted_fields_py] Backfilling credentials for OAuth auth code 06:58:26 INFO [703298a825c2_backfill_new_encrypted_fields_py] Backfilling secret for OAuth applications 06:58:26 INFO [alembic.runtime.migration] Running upgrade 703298a825c2 -> 49e1138ed12d, Change token column types for encrypted columns. 06:58:27 INFO [alembic.runtime.migration] Running upgrade 49e1138ed12d -> c059b952ed76, Remove unencrypted fields and data. 06:58:28 INFO [alembic.runtime.migration] Running upgrade c059b952ed76 -> cc6778199cdb, repository mirror notification. 06:58:28 INFO [alembic.runtime.migration] Running upgrade cc6778199cdb -> 34c8ef052ec9, repo mirror columns. 06:58:28 INFO [34c8ef052ec9_repo_mirror_columns_py] Migrating to external_reference from existing columns 06:58:28 INFO [34c8ef052ec9_repo_mirror_columns_py] Reencrypting existing columns 06:58:28 INFO [alembic.runtime.migration] Running upgrade 34c8ef052ec9 -> 4fd6b8463eb2, Add new DeletedRepository tracking table. 06:58:28 INFO [alembic.runtime.migration] Running upgrade 4fd6b8463eb2 -> 8e6a363784bb, add manifestsecuritystatus table 06:58:29 INFO [alembic.runtime.migration] Running upgrade 8e6a363784bb -> 04b9d2191450 06:58:29 INFO [alembic.runtime.migration] Running upgrade 04b9d2191450 -> 3383aad1e992, Add UploadedBlob table 06:58:29 INFO [alembic.runtime.migration] Running upgrade 3383aad1e992 -> 88e64904d000, Add new metadata columns to Manifest table 06:58:29 INFO [alembic.runtime.migration] Running upgrade 88e64904d000 -> 909d725887d3, Add composite index on manifestblob

Config.yaml used by New Quay APP POD:

ALLOW_PULLS_WITHOUT_STRICT_LOGGING: false ALLOWED_OCI_ARTIFACT_TYPES: application/vnd.cncf.helm.config.v1+json: - application/tar+gzip application/vnd.oci.image.layer.v1.tar+gzip+encrypted: - application/vnd.oci.image.layer.v1.tar+gzip+encrypted AUTHENTICATION_TYPE: Database AVATAR_KIND: local BROWSER_API_CALLS_XHR_ONLY: false BUILDLOGS_REDIS: host: quay360-quay-redis port: 6379 CREATE_REPOSITORY_ON_PUSH_PUBLIC: true DATABASE_SECRET_KEY: VO7oiSxeqyja0zSDhEQV1nxV98KJzOshxRUL29y4ihu3iarQwql893PhV4dNa652XpHIE4WpxsFA51G1 DB_CONNECTION_ARGS: autorollback: true ssl: ca: conf/stack/database.pem threadlocals: true DB_URI: mysql+pymysql://quayrdsdb:quayrdsdb@terraform-20210910024029170100000001.c2dhnkrh69gr.us-west-2.rds.amazonaws.com:3306/quay360 DEFAULT_TAG_EXPIRATION: 4w DISTRIBUTED_STORAGE_CONFIG: default: - S3Storage - host: s3.us-east-2.amazonaws.com s3_access_key: AKIAUMQAHCJON275SXFZ s3_bucket: quay360 s3_secret_key: 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 storage_path: /quay360 DISTRIBUTED_STORAGE_DEFAULT_LOCATIONS: - default DISTRIBUTED_STORAGE_PREFERENCE: - default ENTERPRISE_LOGO_URL: /static/img/quay-horizontal-color.svg EXTERNAL_TLS_TERMINATION: true FEATURE_ACTION_LOG_ROTATION: false FEATURE_ANONYMOUS_ACCESS: true FEATURE_APP_SPECIFIC_TOKENS: true FEATURE_BITBUCKET_BUILD: false FEATURE_BLACKLISTED_EMAILS: false FEATURE_BUILD_SUPPORT: false FEATURE_CHANGE_TAG_EXPIRATION: true FEATURE_DIRECT_LOGIN: true FEATURE_EXTENDED_REPOSITORY_NAMES: true FEATURE_FIPS: false FEATURE_GENERAL_OCI_SUPPORT: true FEATURE_GITHUB_BUILD: false FEATURE_GITHUB_LOGIN: false FEATURE_GITLAB_BUILD: false FEATURE_GOOGLE_LOGIN: false FEATURE_HELM_OCI_SUPPORT: false FEATURE_INVITE_ONLY_USER_CREATION: false FEATURE_MAILING: false FEATURE_NONSUPERUSER_TEAM_SYNCING_SETUP: false FEATURE_PARTIAL_USER_AUTOCOMPLETE: true FEATURE_PROXY_STORAGE: false FEATURE_REPO_MIRROR: true FEATURE_SECURITY_NOTIFICATIONS: true FEATURE_SECURITY_SCANNER: true FEATURE_SIGNING: false FEATURE_STORAGE_REPLICATION: false FEATURE_TEAM_SYNCING: false FEATURE_USER_CREATION: true FEATURE_USER_INITIALIZE: true FEATURE_USER_LAST_ACCESSED: true FEATURE_USER_LOG_ACCESS: false FEATURE_USER_METADATA: false FEATURE_USER_RENAME: false FEATURE_USERNAME_CONFIRMATION: true FRESH_LOGIN_TIMEOUT: 10m GITHUB_LOGIN_CONFIG: {} GITHUB_TRIGGER_CONFIG: {} GITLAB_TRIGGER_KIND: {} GPG2_PRIVATE_KEY_FILENAME: signing-private.gpg GPG2_PUBLIC_KEY_FILENAME: signing-public.gpg LDAP_ALLOW_INSECURE_FALLBACK: false LDAP_EMAIL_ATTR: mail LDAP_UID_ATTR: uid LDAP_URI: ldap://localhost LOGS_MODEL: database LOGS_MODEL_CONFIG: {} MAIL_DEFAULT_SENDER: support@quay.io MAIL_PORT: 587 MAIL_USE_AUTH: false MAIL_USE_TLS: false PREFERRED_URL_SCHEME: https REGISTRY_TITLE: Quay REGISTRY_TITLE_SHORT: Quay REPO_MIRROR_INTERVAL: 30 REPO_MIRROR_TLS_VERIFY: true SEARCH_MAX_RESULT_PAGE_COUNT: 10 SEARCH_RESULTS_PER_PAGE: 10 SECRET_KEY: Fhybvqjq-FCghOcU2SO7gNadvuGFsS13ZYlRGHbyFafT3FB219qeg0be471HmsZ0xp48TVdraiE79nFI SECURITY_SCANNER_INDEXING_INTERVAL: 30 SECURITY_SCANNER_V4_ENDPOINT: http://quay360-clair-app:80 SECURITY_SCANNER_V4_NAMESPACE_WHITELIST: - admin SECURITY_SCANNER_V4_PSK: OEgwQWhDSGh5YVd5N2hLQm1DcDE4RTB2TkNOdTZuZk0= SERVER_HOSTNAME: quayv360.apps.quay-perf-738.perfscale.devcluster.openshift.com SETUP_COMPLETE: true SUPER_USERS: - quay - admin TAG_EXPIRATION_OPTIONS: - 2w - 4w - 8w TEAM_RESYNC_STALE_TIME: 60m TESTING: false USER_EVENTS_REDIS: host: quay360-quay-redis port: 6379 USER_RECOVERY_TOKEN_LIFETIME: 30m

How to create new Mysql 8.0 database:

create database quay360 character set latin1; create database quay356 character set latin1;

How to enforce SSL for AWS RDS Mysql 8.0:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_MySQL.html#MySQL.Concepts.SSLSupport

ALTER USER 'quayrdsdb'@'%' REQUIRE SSL;

Steps:

- Deploy Quay with Quay Operator, choose to use unmanaged objectstorage and unmanaged monitoring components

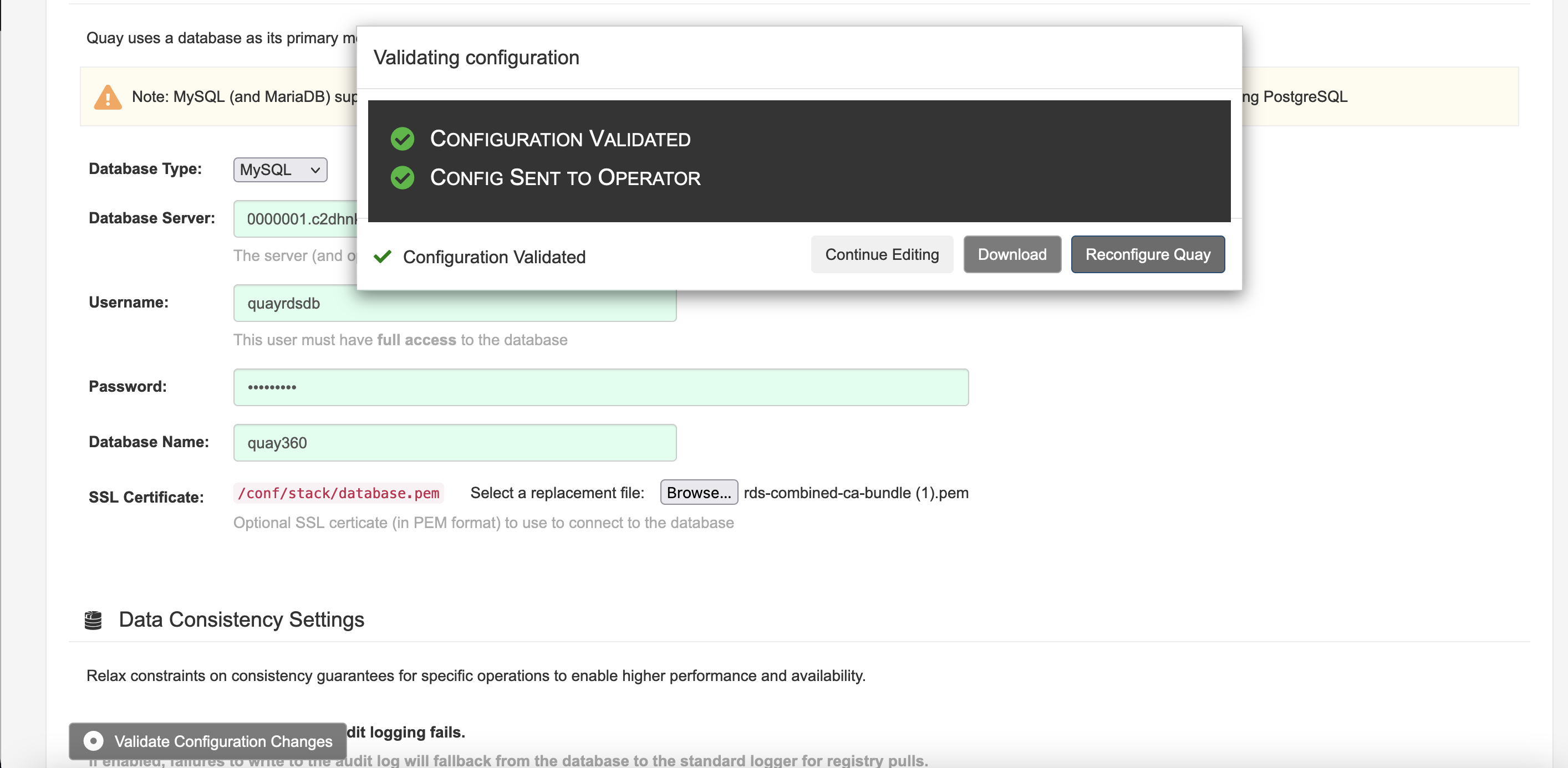

- Login Quay config editor, choose to use external Mysql database, input valid Database Server, username, password, database name, and upload the AWS RDS CA SSL Cert(rds-combined-ca-bundle (1).pem

)

) - Click Validate configuration

- Click Reconfigure Quay

- After Operator Reconcile change, check new Quay APP POD status

- Check the logs of Quay Upgrade POD

Expected Results:

New Quay App POD is ready

Actual Results:

New Quay App POD was failed to start.