-

Bug

-

Resolution: Unresolved

-

Undefined

-

None

-

Logging 5.9, Logging Plugin 6.0, Logging Plugin 6.1

-

None

-

Quality / Stability / Reliability

-

False

-

-

None

-

Moderate

-

None

-

None

-

None

-

None

-

None

-

None

Description

When accessed to the "OCP Console > Observe > Logs", it's possible to apply some filters by:

- Content

- Namespaces

- Pods

- Containers

The last 3 options in the filter: Namespaces, Pods and Containers are based in the default "labelKeys". More details about these default "labelKeys" running:

$ oc explain clusterlogforwarder.spec.outputs.lokiStack.labelKeys

These default "labelKeys" can be:

1. modified, this is a feature implemented in Logging 6 in the RFE https://issues.redhat.com/browse/OBSDA-808

2. Pruned as indicated in the example

Then, if for instance, it's modified the clusterLogForwarder removing the "labelKey: kubernetes_container_name" and added the "labelKey: openshift.cluster_id" as it's in the next example:

$ cat clf-instance.yaml apiVersion: observability.openshift.io/v1 kind: ClusterLogForwarder metadata: name: collector namespace: openshift-logging spec: serviceAccount: name: collector outputs: - name: default-lokistack type: lokiStack lokiStack: target: name: logging-loki namespace: openshift-logging authentication: token: from: serviceAccount labelKeys: global: - log_type - kubernetes.namespace_name - kubernetes.pod_name - openshift.cluster_id tls: ca: key: service-ca.crt configMapName: openshift-service-ca.crt pipelines: - name: default-logstore inputRefs: - application - infrastructure outputRefs: - default-lokistack

The list of labelKeys in Loki are:

$ route=$(oc get route logging-loki -n openshift-logging -o jsonpath='{.spec.host}') $ logcli -o raw --tls-skip-verify --bearer-token="$(oc whoami -t)" --addr "https://${route}/api/logs/v1/application" series --analyze-labels '{log_type="application"}' --retries=2 --since=15m 2024/07/02 12:14:15 https://logging-loki-openshift-logging.apps.worker.example.com/api/logs/v1/application/loki/api/v1/series?end=1719915255211078148&match=%7Blog_type%3D%22application%22%7D&start=1719911655211078148 Total Streams: 2 Unique Labels: 5 Label Name Unique Values Found In Streams kubernetes_host 2 2 kubernetes_pod_name 2 2 log_type 1 2 kubernetes_namespace_name 1 2 openshift_cluster_id 1 2

From the UI perspective/user experience two things not expected will happen:

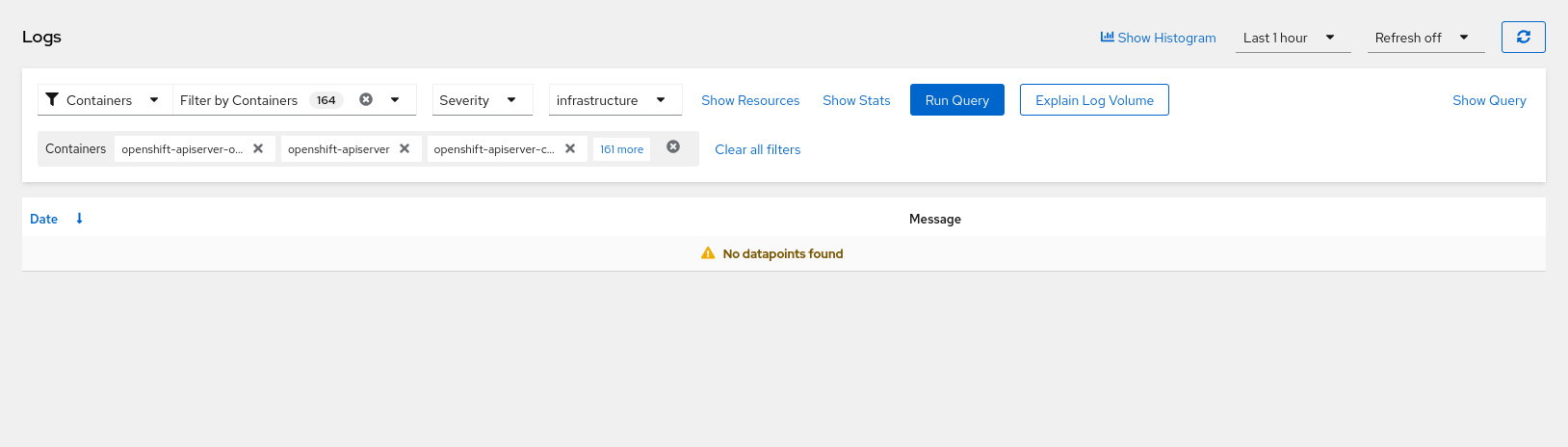

The first not expected output, it's that if selected the filter by "Containers" and selected any or all the containers as the "labelKey: kubernetes.container_name" doesn't exist, then, the result will be always empty. See screenshot below:

The second not output expected is that if a new "labelKey" is introduced as it it's done when using the previous clusterLogForwarder example where introduce the "labelKey: openshift.cluster_id", it should be expected to have it available in the filters list. This second point could also be understood as a RFE, but it's really related to the first issue. The "filters" based in the "labelKeys" are static and not dynamically loaded leading to:

- Fail to get results for a labelKey that it doesn't exist, in the example above "kubernetes.container_name" (Bug)

- Not showing a new labelKey introduced for being able to filter by it, in the example above: "openshift.cluster_id"

{}Second way{}

The second way of reaching the same problems is when using the pruning option and it's pruned default `labelKeys` as it's documented in [0]. Then, the OpenShift Console won't show any application logs when the prune filter is:

apiVersion: logging.openshift.io/v1 kind: ClusterLogForwarder metadata: # ... spec: filters: - name: prune-filter prune: notIn: - .log_type - .message - .timestamp type: prune

- is related to

-

OBSDOCS-2359 Adding a section on fields that cannot be pruned in OpenShift Logging

-

- New

-

- links to