-

Bug

-

Resolution: Done-Errata

-

Major

-

rhos-18.0.7

-

3

-

False

-

-

False

-

No Docs Impact

-

edpm-image-builder-0.1.1-18.0.20241101131714.el9ost

-

None

-

-

Bug Fix

-

Done

-

-

-

Approved

-

HardProv Sprint 3

-

1

-

Important

Hello,

We are deploying RHOSO 18 for a customer and we have an issue while provisionning edpm nodes.

After creating the BMH and OpenStackDataPlaneNodeSet (osdpns), the nodes get provisioned and marked so on BMH level. the status of osdpns is also OK (waiting for for deployment)

but the nodes are actually stuck not getting booted with an error of `/dec/disk/by-label/img-rootfs does not exist` before entering emergency shell.

the nodes after applying osdpns.

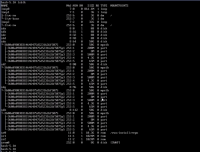

oc get bmh NAME STATE CONSUMER ONLINE ERROR AGE vel1-nfv12-kvm0106 provisioned openstack-data-plane-vradi true 54m vel1-nfv12-kvm0107 provisioned openstack-data-plane-vradi true 54m

the osdpns status

oc get osdpns

NAME STATUS MESSAGE

openstack-data-plane-vradi False NodeSet setup ready, waiting for Deployment...

after a launch of deployment.

the ansiblee job stuck waiting for connection which did not get enabled as the node is on dracut emergency mode..

oc logs -l app=openstackansibleee -f --max-log-requests 10 Skipping callback 'minimal', as we already have a stdout callback. Skipping callback 'oneline', as we already have a stdout callback. PLAYBOOK: bootstrap.yml ******************************************************** 2 plays in /usr/share/ansible/collections/ansible_collections/osp/edpm/playbooks/bootstrap.yml PLAY [Check remote connections] ************************************************ TASK [Wait for connection] ***************************************************** task path: /usr/share/ansible/collections/ansible_collections/osp/edpm/playbooks/bootstrap.yml:7

#To Reproduce:

Not sure if the issue can be reproduced.

#Expected behavior

The expected behavior is to have the node provisioned and started then receive the configurations and openstack pods after a OpenStackDataPlaneDeployment is launched.

#Screenshots

Screenshot for the device path error on the node console.

#Device Info:

Hardware Specs: Cisco UCS B200 M6 2 Socket Blade Server

Disks are exposed to the nodes over SAN and multipath is used.

OS Version: The image set on BMH level is rhel9.4 exposed on a local webserver and the information regarding this image are specified on the BMH level.

Bug impact

We have a RHOSO deployment which is blocked for the customer.

Additional context

the image : edpm-hardened-uefi.qcow2 is used even if we have specified other image information on the bmh file.

- We have both tested specifying a rootDeviceHints to /dev/dm-0 and also with id wwn.

rootDeviceHints: wwn: "0x600a098038314648475d5231626f5067" # deviceName: "/dev/dm-0"

- regarding the image

we have tested without specifying image param on the bmh file and also with speciying ``url: 'http://<local repos ip>/rhel-9.4-x86_64-kvm.qcow2'`` : as our environment is a disconnected env the edpm-hardened-uefi.qcow2 is used by default.

we tried configuring the osdpns osImage but the image is not downloaded (no url attribute to configure local repos so by giving only the image name it is not found while provisionnig )

baremetalSetTemplate:

deploymentSSHSecret: dataplane-ansible-ssh-private-key-secret

bmhNamespace: openstack

cloudUserName: ansible

# osImage: rhel-9.4-x86_64-kvm.qcow2

bmhLabelSelector:

app: openstack

workload: compute

vnf: vradi

ctlplaneInterface: eno10.1023

dnsSearchDomains:

- <domain>

- we tried to pass the kernel param on the osdpns file ``edpm_kernel_args: "rd.multipath=default"`` as suggested on other discussion but didnot resolve the issue.

- links to

-

RHBA-2025:148670

Release of components for RHOSO 18.0

RHBA-2025:148670

Release of components for RHOSO 18.0

- mentioned on