-

Feature

-

Resolution: Won't Do

-

Normal

-

None

-

None

Use Case

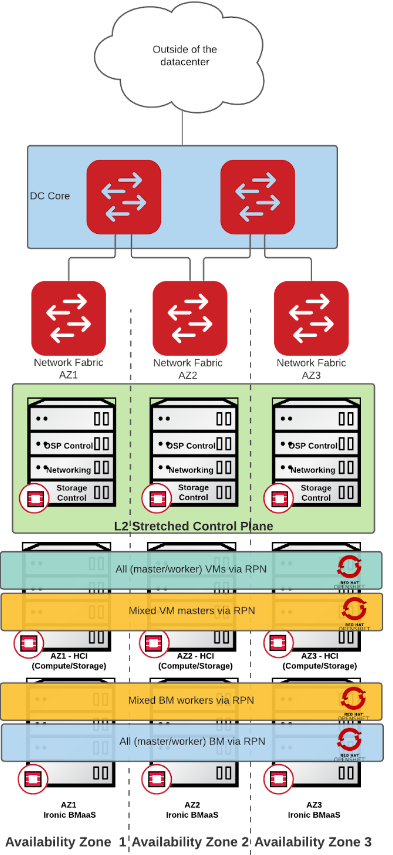

As a ShiftonStack cloud admin for a large enterprise where my RHOSP deployment is distributed across multiple network fabrics (AZs) in a single datacenter - either with spine/leaf or DCN design. Availability Zone can vary from tens to hundreds of compute/hci nodes. The latency is not an issue since the physical location of the cluster is not distributed geographically.

I run OCP cluster stretched across multiple AZs over Routed Provider Networks to achieve maximum network throughput/performance and remove a need of Kuryr to avoid double encapsulation.

Goal

As of today, OCP workloads aren't supported on stretched Spine/Leaf or DCN and have never been tested by QE, so we want to design a Reference Architecture of what can be tested now, in the OSP16.2 timeframe, (therefore supported at some point) and potentially delivered to customers.

And then a next step will be to work on the evolution of this architecture based on the OSP and OCP roadmaps, and find a conjuncture of what customers need and what we'll be able to deliver in the OSP17 timeframe.

Why is this important

Customers are looking at the OpenStack Platform as either a replacement for public clouds (AWS, GCP and Azure) or to augment their existing off-premise deployments and they want to match the capability and resiliency of the public cloud offering. Today all the public clouds support deploying OCP across multiple AZs. Here are some examples from AWS:

apiVersion: v1 baseDomain: lab.uc2.io compute: - architecture: amd64 hyperthreading: Enabled name: worker platform: aws: zones: - us-east-1a - us-east-1b rootVolume: iops: 4000 size: 100 type: io1 type: m5.xlarge replicas: 3 ... platform: aws: region: us-east-1 subnets: - subnet-02dda9e8fd9317ea0 - subnet-0b768178e3286ce5e - subnet-08b492b781df30773 - subnet-0643f973ed471a20f - subnet-0c169245f0a58ad10 - subnet-0272ab642a3086432 ...

There are some caveats for the same type of deployment in AWS:

- you need to enable DNS resolution in the VPC, you should add some AWS specific endpoints (for S3 for instance)

- and if you are publishing externally you need public/private subnets for each AZ

- private using a NAT gateway and public using an IGW, with route tables updating accordingly

example playbooks:

https://github.com/nasx/openshift-vpc

High Level Requirements

- Plan would be Tech Preview in OCP 4.11 and GA in 4.12

- Deliverable:

- Reference architecture of OCP at the Edge for Enterprise, that we can test and support

- Documentation

- QE testing

- Features involving code (TBD)

- Ability to support multiple subnets and/or Routed Provided networks inside install-config.yaml for both Master and Worker nodes (today only a single subnet can be provided).

- Networking

- Support for IPv6 provisioning

- Provider networks

- Dual stack (IPv4/IPv6) for VM and pods

Enterprise and IoT/Retail Edge Requirements

Potential customer(s):

- ADP

- Telus

- One very large customer I cannot name at the moment