-

Bug

-

Resolution: Done-Errata

-

Undefined

-

None

-

None

-

None

-

None

-

False

-

-

None

-

None

-

None

-

None

-

None

-

None

-

None

-

None

Moving this issue to OCPBUGS for better visualization - https://issues.redhat.com/browse/OCPBUGS-74173

I have linked the original bug report below. The issue involves LeaderWorkerSet (LWS) pods entering a CrashLoopBackOff state when an incorrect LocalQueue label is applied. However, this behavior was only observed when Kueue Operand CR only has BatchJob and LeaderWorkerSet as part of Integration / Framework.

When Kueue Operand CR has all Integration / Framework options, such as: BatchJob, Pod, Deployment, StatetfulSet and LeaderWorkerSet, this bug does not happen.

Problems with Preemption (another test) were seem as well when only default options were used on Integration / Framework (BatchJobs + LeaderWorkerSet).

So, can we add Pod, Deployment and StatetfulSet to the Operand CR to avoid more problems?

-------------

Original bug:

There seems to be an inconsistent behavior, misconfigured LocalQueues cause Jobs to suspend, but cause LWS pods to CrashLoopBackOff. LWS should ideally mirror the Job behavior by suspending until the queue configuration is corrected.

Steps to reproduce:

- Install LWS Operator and Operand

- Install Kueue Operator and Operand

- Add LeaderWorkerSet to Operand CR

- Create a ResourceFlavor and ClusterQueue

- Create a Namespace and LocalQueue

- ex: LocalQueue name is local-queue

- Create a Job but, label it with a wrong localQueue name

- Example:

labels: kueue.x-k8s.io/queue-name: user-queue

- Check that the job is going to be Suspended

- Do the same with LWS, apply a template using the same wrong label

- Check the behavior

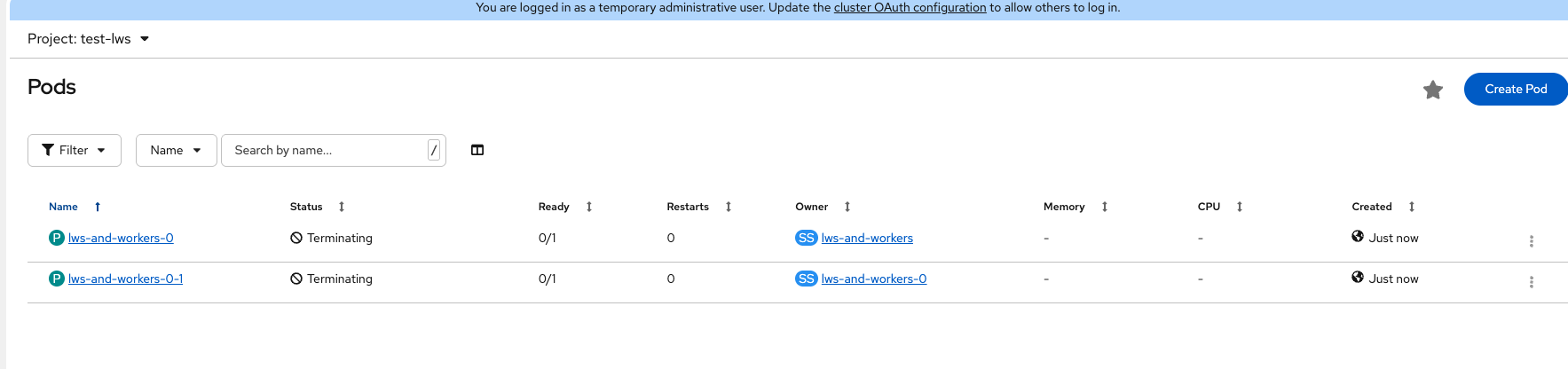

Actual: LWS gets in a loop of terminating the pod and starting it again.

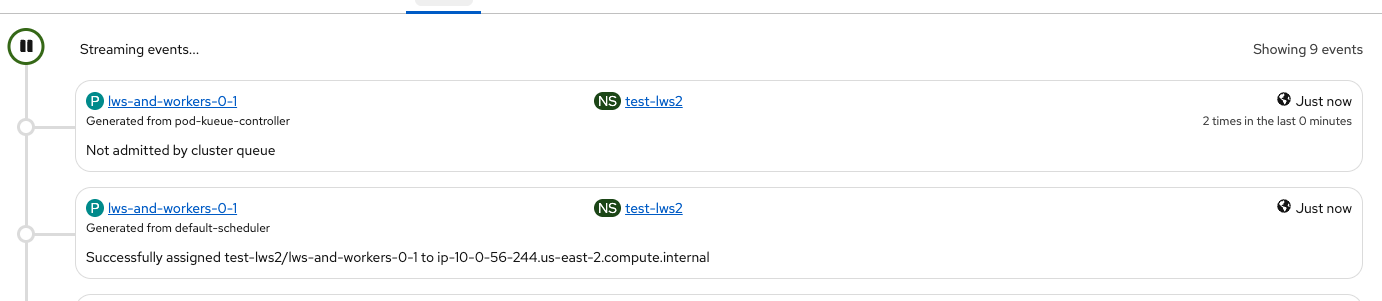

On LWS events, it's possible to see that it was not admitted by Cluster Queue (once the LocalQueue label is wrong).

Expected: LWS could follow the same behavior as Job and get Suspended or Pod behavior and get Pending instead of crashing.

Video - LWS LocalQueue Misconfiguration: https://www.loom.com/share/d5fbf21c80bb4c5b9917a172e5af60c7

- relates to

-

OCPKUEUE-363 Feature Verification for LeaderWorkerSet

-

- Closed

-

-

OCPBUGS-74173 [Kueue] - Operand CR missing configuration makes LWS to fail

-

- Closed

-