-

Bug

-

Resolution: Not a Bug

-

Undefined

-

None

-

4.14, 4.15, 4.16, 4.17

-

Quality / Stability / Reliability

-

False

-

-

None

-

None

-

None

-

None

-

None

-

None

-

None

-

None

-

None

-

None

-

None

-

None

-

None

-

None

Description of problem:

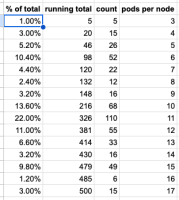

In some scenarios, the k8s scheduler is not balancing the pods properly across the cluster. In big clusters (> 200 nodes), doing a quick massive scale up of pods that have the same resource requests (and no constraints or affinity), we have detected that the scheduling spread is ~20 pods from most utilized to least utilized nodes. This scale up scenario has been tested with deployments modifying all the min replicas of HPA of hundreds of deployments, all at the same time, scaling up around 7000 pods. If using different resource request, nodes are also not balanced (sum by (node) (kube_pod_container_resource_requests_cpu_cores)). In smaller clusters, or scaling up slowly deployments (few at a time, taking some hours to scale up 7000 pods), we have not detected this issue. Debugging this issue is not easy, as it requires a big cluster, and setting a debug level 10, with thousands of pods being scheduled in seconds, can generate a huge amount of logs. And having a score list of more than 100 nodes each scheduling cycle, with thousands of scheduling cycles...

Version-Release number of selected component (if applicable):

4.17 (Kubernetes 1.30)

How reproducible:

In a big cluster (> 200 nodes), scale hundreds of deployments at the same time (~ 7000 pods), and check the pod distribution across the nodes.

Steps to Reproduce:

1. Scale a k8s cluster to have > 200 nodes. For example, 280 nodes.

2. Create hundreds of deployments (no need to use constraints or affinity to be easier)

3. Scale all the deployments (create ~ 7000 pods), for example, changing the min replicas of HPA

4. Check the pods distribution across the cluster.

Actual results:

The scheduling spread is ~20 pods from most utilized to least utilized nodes with pods with similar resource requests.

Expected results:

All the nodes should have the same amount of pods, or at least, the scheduling spread should be a few pods from most utilized to least utilized nodes

Additional info:

Issue open in kubernetes repository [1]