-

Bug

-

Resolution: Can't Do

-

Undefined

-

None

-

4.12

-

None

-

Quality / Stability / Reliability

-

False

-

-

None

-

Important

-

None

-

None

-

None

-

Rejected

-

Storage Sprint 228

-

1

-

None

-

None

-

None

-

None

-

None

-

None

-

None

Description of problem:

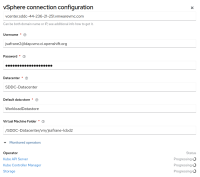

Install cluster against pre-merged payload with PR https://github.com/openshift/console/pull/12068, update vsphere platform parameters through static vsphere connection plugin from console dashboard, it will generate new mc and trigger node reboot. Then find that control node is stuck in status "Ready,SchedulingDisabled".

Checked from machine-config-contorller node, failed to drain node, because pod vmware-vsphere-csi-driver-controller could not be evicted as it would violate the pod's PDB.

$ oc logs machine-config-controller-bdcdfc88d-p5bbq -n openshift-machine-config-operator -c machine-config-controller ... I1014 08:02:33.137138 1 drain_controller.go:110] evicting pod openshift-cluster-csi-drivers/vmware-vsphere-csi-driver-controller-756554dfb6-kzvhh E1014 08:02:33.147025 1 drain_controller.go:110] error when evicting pods/"vmware-vsphere-csi-driver-controller-756554dfb6-kzvhh" -n "openshift-cluster-csi-drivers" (will retry after 5s): Cannot evict pod as it would violate the pod's disruption budget. I1014 08:02:38.147737 1 drain_controller.go:110] evicting pod openshift-cluster-csi-drivers/vmware-vsphere-csi-driver-controller-756554dfb6-kzvhh I1014 08:02:38.147903 1 drain_controller.go:139] node jimadummy01-bqv6h-control-plane-0: Drain failed. Drain has been failing for more than 10 minutes. Waiting 5 minutes then retrying. Error message from drain: error when evicting pods/"vmware-vsphere-csi-driver-controller-756554dfb6-kzvhh" -n "openshift-cluster-csi-drivers": global timeout reached: 1m30s $ oc get nodes NAME STATUS ROLES AGE VERSION jimadummy01-bqv6h-compute-0 Ready worker 7h29m v1.25.0+3ef6ef3 jimadummy01-bqv6h-compute-1 Ready worker 7h29m v1.25.0+3ef6ef3 jimadummy01-bqv6h-control-plane-0 Ready,SchedulingDisabled control-plane,master 7h47m v1.25.0+3ef6ef3 jimadummy01-bqv6h-control-plane-1 Ready control-plane,master 7h47m v1.25.0+3ef6ef3 jimadummy01-bqv6h-control-plane-2 Ready control-plane,master 7h46m v1.25.0+3ef6ef3 $ oc get deployment.apps/vmware-vsphere-csi-driver-controller -n openshift-cluster-csi-drivers NAME READY UP-TO-DATE AVAILABLE AGE vmware-vsphere-csi-driver-controller 0/2 2 0 95m $ oc get pdb -n openshift-cluster-csi-drivers NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE vmware-vsphere-csi-driver-controller-pdb N/A 1 0 94m vmware-vsphere-csi-driver-webhook-pdb N/A 1 1 94m $ oc get pod -n openshift-cluster-csi-drivers | grep controller vmware-vsphere-csi-driver-controller-756554dfb6-9nxnz 11/13 CrashLoopBackOff 27 (2m24s ago) 50m vmware-vsphere-csi-driver-controller-756554dfb6-kzvhh 11/13 CrashLoopBackOff 27 (3m3s ago) 50m

In pod vmware-vsphere-csi-driver-controller log:

{"level":"error","time":"2022-10-14T08:22:15.11976544Z","caller":"service/driver.go:138","msg":"failed to init controller. Error: ServerFaultCode: Cannot complete login due to an incorrect user name or password.","TraceId":"fe46aef8-e7a4-4045-91b9-566a58e605ea","TraceId":"efd0a8fe-de31-4c01-aeec-b817bbb710b6","stacktrace":"sigs.k8s.io/vsphere-csi-driver/v2/pkg/csi/service.(*vsphereCSIDriver).BeforeServe\n\t/go/src/github.com/kubernetes-sigs/vsphere-csi-driver/pkg/csi/service/driver.go:138\nsigs.k8s.io/vsphere-csi-driver/v2/pkg/csi/service.(*vsphereCSIDriver).Run\n\t/go/src/github.com/kubernetes-sigs/vsphere-csi-driver/pkg/csi/service/driver.go:151\nmain.main\n\t/go/src/github.com/kubernetes-sigs/vsphere-csi-driver/cmd/vsphere-csi/main.go:71\nruntime.main\n\t/usr/lib/golang/src/runtime/proc.go:250"}

{"level":"info","time":"2022-10-14T08:22:15.119814564Z","caller":"service/driver.go:104","msg":"Configured: \"csi.vsphere.vmware.com\" with clusterFlavor: \"VANILLA\" and mode: \"controller\"","TraceId":"fe46aef8-e7a4-4045-91b9-566a58e605ea","TraceId":"efd0a8fe-de31-4c01-aeec-b817bbb710b6"}

{"level":"error","time":"2022-10-14T08:22:15.119832373Z","caller":"service/driver.go:152","msg":"failed to run the driver. Err: +ServerFaultCode: Cannot complete login due to an incorrect user name or password.","TraceId":"fe46aef8-e7a4-4045-91b9-566a58e605ea","stacktrace":"sigs.k8s.io/vsphere-csi-driver/v2/pkg/csi/service.(*vsphereCSIDriver).Run\n\t/go/src/github.com/kubernetes-sigs/vsphere-csi-driver/pkg/csi/service/driver.go:152\nmain.main\n\t/go/src/github.com/kubernetes-sigs/vsphere-csi-driver/cmd/vsphere-csi/main.go:71\nruntime.main\n\t/usr/lib/golang/src/runtime/proc.go:250"}

Once PR https://github.com/openshift/console/pull/12068 gets merged, user will be able to update vsphere platform parameters from console dashboard easily, and then updating with invalid credentials will break the cluster as this issue.

Version-Release number of selected component (if applicable):

4.12 pre-merged payload with PR console#12068

How reproducible:

Always if input invalid username and also update datacenter/datastore/folder... parameter.

Steps to Reproduce:

1. Install cluster against 4.12 pre-merged payload with PR console#12068 2. Update vsphere platform parameters through vsphere connection on console dashboard

Actual results:

one master node is stuck in status "Ready,SchedulingDisabled".

Expected results:

cluster is successful to apply new machine config.

Additional info:

- is blocked by

-

RFE-1367 Forcefully remove unhealthy pods controlled by PDB during Machine Update

-

- Waiting

-