-

Bug

-

Resolution: Done

-

Normal

-

OADP 1.5.0

-

Quality / Stability / Reliability

-

3

-

False

-

-

False

-

ToDo

-

-

-

16-MMSDOCS 2025, 17-MMSDOCS 2025, 18-MMSDOCS 2025, 19-MMSDOCS 2025

-

4

-

Important

-

Very Likely

-

0

-

8

-

None

-

Unset

-

Unknown

-

None

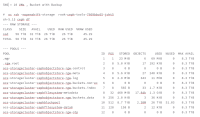

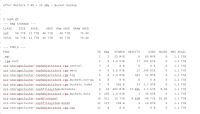

Description of problem:

After kubevirt restore is completed, Ceph capacity is higher than expected.

It displays the size of the PVs and not the actual data on them.

Bucket size: 5 TB

VM with 2 disks:

volumeMode: Block

Disk #1: 45G data , 60G PV size

Disk #2: 30G data , 150G PV size

- After 50 VMs were created, Ceph capacity is ~8.7TB

- After 50 VMs backup is completed, Ceph capacity is ~5.0TB

- After 50 VMs restore is completed, Ceph capacity is ~15TB

Version-Release number of selected component (if applicable):

OCP: 4.19.0-ec.4

ODF: 4.18.4

CNV: 4.18.3

OADP: 1.5.0-108

How reproducible:

Running DataMover backup

Steps to Reproduce:

1. Create VMs and check Ceph capacity

2. Backup all VMs and check Ceph capacity after the backup is completed.

3. Delete all VMs and check Ceph capacity

4. Restore the VMs and check Ceph capacity

Actual results:

Ceph capacity is higher than expected

Expected results:

Additional info:

It looks that after restore, the Ceph calculates the capacity by the PVs size:

60GB(PV1) + 150GB(PV2) = 210GB disks size per VM.

210GB * 50 VMs = Total size 10.5TB

See attachments value "ocs-storagecluster-cephblockpool"