-

Bug

-

Resolution: Unresolved

-

Critical

-

OADP 1.4.1

-

Incidents & Support

-

4

-

False

-

-

False

-

ToDo

-

-

-

0

-

Very Likely

-

0

-

None

-

Unset

-

Unknown

-

None

Description of problem:

Restorer pods are getting scheduled on wrong node which causing datadownload to get stuck until the timeout is hit. Issue looks intermittent sometime it works sometime it doesn't. The issue only seen with restore. Attached details below:-

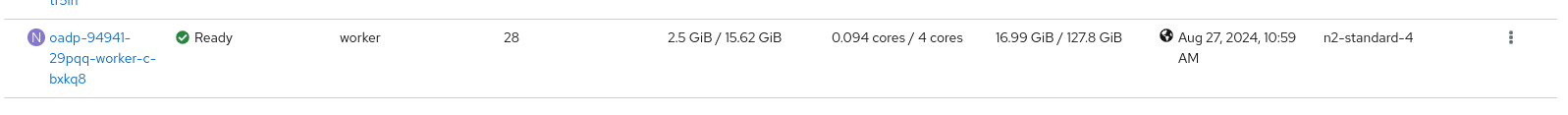

$ oc get node -l test=node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

oadp-94941-29pqq-worker-c-bxkq8 Ready worker 109m v1.29.7+4510e9c 10.0.128.4 <none> Red Hat Enterprise Linux CoreOS 416.94.202408200132-0 5.14.0-427.33.1.el9_4.x86_64 cri-o://1.29.7-5.rhaos4.16.gitb130ec5.el9

$ oc get pod -l velero.io/data-download -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES test-restore2-7m87f 1/1 Running 0 9m59s 10.128.2.28 oadp-94941-29pqq-worker-b-tr5lh <none> <none> test-restore2-95j98 1/1 Running 0 10m 10.128.2.26 oadp-94941-29pqq-worker-b-tr5lh <none> <none> test-restore2-fmxln 1/1 Running 0 10m 10.128.2.23 oadp-94941-29pqq-worker-b-tr5lh <none> <none> test-restore2-frzdz 1/1 Running 0 10m 10.128.2.25 oadp-94941-29pqq-worker-b-tr5lh <none> <none> test-restore2-jdnrn 1/1 Running 0 10m 10.128.2.27 oadp-94941-29pqq-worker-b-tr5lh <none> <none> test-restore2-khdqf 1/1 Running 0 9m59s 10.128.2.29 oadp-94941-29pqq-worker-b-tr5lh <none> <none> test-restore2-nmwfj 1/1 Running 0 10m 10.128.2.24 oadp-94941-29pqq-worker-b-tr5lh <none> <none> test-restore2-tr4ts 1/1 Running 0 9m58s 10.128.2.30 oadp-94941-29pqq-worker-b-tr5lh <none> <none>

Also verified the node has sufficient amount of memory and CPU.

Version-Release number of selected component (if applicable):

OADP 1.4.1-20

How reproducible:

Intermittent(I noticed sometime the test pass as usual)

Steps to Reproduce:

1. Added a label on one of the worker node

$ oc get node -l test=node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

oadp-94941-29pqq-worker-c-bxkq8 Ready worker 114m v1.29.7+4510e9c 10.0.128.4 <none> Red Hat Enterprise Linux CoreOS 416.94.202408200132-0 5.14.0-427.33.1.el9_4.x86_64 cri-o://1.29.7-5.rhaos4.16.gitb130ec5.el9

2. Created configmap with follow spec

$ cat node-agent-config.json

{

"loadAffinity": [

{

"nodeSelector": {

"matchLabels": {

"test": "node"

}

}

}

]

}

$ oc create cm node-agent-config -n openshift-adp-2 --from-file=node-agent-config.json

3. Created DPA with nodeAgent nodeSelector field set.

oc get dpa ts-dpa -o yaml apiVersion: oadp.openshift.io/v1alpha1 kind: DataProtectionApplication metadata: creationTimestamp: "2024-08-27T06:35:02Z" generation: 2 name: ts-dpa namespace: openshift-adp resourceVersion: "48062" uid: 5d8765c5-6ac0-454f-91d0-cd1709e1f044 spec: backupLocations: - velero: credential: key: cloud name: cloud-credentials-gcp default: true objectStorage: bucket: oadp9494129pqq prefix: velero provider: gcp configuration: nodeAgent: enable: true podConfig: nodeSelector: test: node uploaderType: kopia velero: defaultPlugins: - gcp - openshift - csi status: conditions: - lastTransitionTime: "2024-08-27T06:35:23Z" message: Reconcile complete reason: Complete status: "True" type: Reconciled

4. Deployed an application with 8pvcs.

$ appm deploy ocp-8pvc-app

5. Triggered dataMover backup. Backup got completed successfully.

$ oc get backup test-backup-2 -o yaml apiVersion: velero.io/v1 kind: Backup metadata: annotations: velero.io/resource-timeout: 10m0s velero.io/source-cluster-k8s-gitversion: v1.29.7+4510e9c velero.io/source-cluster-k8s-major-version: "1" velero.io/source-cluster-k8s-minor-version: "29" creationTimestamp: "2024-08-27T07:04:14Z" generation: 14 labels: velero.io/storage-location: ts-dpa-1 name: test-backup-2 namespace: openshift-adp resourceVersion: "62170" uid: 37dac656-dee5-4f01-a807-2891ca927d60 spec: csiSnapshotTimeout: 10m0s defaultVolumesToFsBackup: false includedNamespaces: - ocp-8pvc-app itemOperationTimeout: 4h0m0s snapshotMoveData: true storageLocation: ts-dpa-1 ttl: 720h0m0s status: backupItemOperationsAttempted: 8 backupItemOperationsCompleted: 8 completionTimestamp: "2024-08-27T07:07:49Z" expiration: "2024-09-26T07:04:14Z" formatVersion: 1.1.0 hookStatus: {} phase: Completed progress: itemsBackedUp: 84 totalItems: 84 startTimestamp: "2024-08-27T07:04:14Z" version: 1

$ oc get dataupload NAME STATUS STARTED BYTES DONE TOTAL BYTES STORAGE LOCATION AGE NODE test-backup-2-ckt4p Completed 25m 104857654 104857654 ts-dpa-1 27m oadp-94941-29pqq-worker-c-bxkq8 test-backup-2-csvz2 Completed 25m 104857654 104857654 ts-dpa-1 27m oadp-94941-29pqq-worker-c-bxkq8 test-backup-2-jbrnp Completed 26m 104857654 104857654 ts-dpa-1 28m oadp-94941-29pqq-worker-c-bxkq8 test-backup-2-jmbk9 Completed 27m 104857654 104857654 ts-dpa-1 28m oadp-94941-29pqq-worker-c-bxkq8 test-backup-2-lzwlb Completed 25m 104857654 104857654 ts-dpa-1 27m oadp-94941-29pqq-worker-c-bxkq8 test-backup-2-r946f Completed 26m 104857654 104857654 ts-dpa-1 27m oadp-94941-29pqq-worker-c-bxkq8 test-backup-2-tq2lz Completed 26m 104857654 104857654 ts-dpa-1 28m oadp-94941-29pqq-worker-c-bxkq8 test-backup-2-wjmvv Completed 25m 104857654 104857654 ts-dpa-1 28m oadp-94941-29pqq-worker-c-bxkq8

6. Delete app namespace and triggered restore.

$ oc delete ns ocp-8pvc-app

7. Triggered restore with itemOperationsTimeout set to 10m otherwise the restore waits for around 4 hours.

$ oc get restore test-restore2 -o yaml apiVersion: velero.io/v1 kind: Restore metadata: name: test-restore2 namespace: openshift-adp spec: backupName: test-backup-2 itemOperationTimeout: 0h10m0s

Actual results:

Restore is partially failed as the restorer pods are scheduled on the wrong node.

oc get restore test-restore2 -o yaml apiVersion: velero.io/v1 kind: Restore metadata: creationTimestamp: "2024-08-27T09:46:19Z" finalizers: - restores.velero.io/external-resources-finalizer generation: 9 name: test-restore2 namespace: openshift-adp resourceVersion: "123227" uid: 30b581e9-f7a6-4763-ad68-a32925953400 spec: backupName: test-backup-2 excludedResources: - nodes - events - events.events.k8s.io - backups.velero.io - restores.velero.io - resticrepositories.velero.io - csinodes.storage.k8s.io - volumeattachments.storage.k8s.io - backuprepositories.velero.io itemOperationTimeout: 0h10m0s status: completionTimestamp: "2024-08-27T09:56:30Z" errors: 8 hookStatus: {} phase: PartiallyFailed progress: itemsRestored: 44 totalItems: 44 restoreItemOperationsAttempted: 8 restoreItemOperationsFailed: 8 startTimestamp: "2024-08-27T09:46:19Z" warnings: 6

DataDownload CR has the correct node name in velero.io/accepted-by label but the pod was scheduled on the wrong node.

$ oc get datadownload test-restore2-4s24f -o yaml apiVersion: velero.io/v2alpha1 kind: DataDownload metadata: creationTimestamp: "2024-08-27T09:46:21Z" generateName: test-restore2- generation: 4 labels: velero.io/accepted-by: oadp-94941-29pqq-worker-c-jhkvw velero.io/async-operation-id: dd-30b581e9-f7a6-4763-ad68-a32925953400.bc96517c-e2a7-48c320af0 velero.io/restore-name: test-restore2 velero.io/restore-uid: 30b581e9-f7a6-4763-ad68-a32925953400 name: test-restore2-4s24f namespace: openshift-adp ownerReferences: - apiVersion: velero.io/v1 controller: true kind: Restore name: test-restore2 uid: 30b581e9-f7a6-4763-ad68-a32925953400 resourceVersion: "123293" uid: afd10eb7-5a9c-4ba2-8621-3c75922add63 spec: backupStorageLocation: ts-dpa-1 cancel: true operationTimeout: 10m0s snapshotID: 3a11833f1e76eae14fd73de3c6a60963 sourceNamespace: ocp-8pvc-app targetVolume: namespace: ocp-8pvc-app pv: "" pvc: volume6 status: completionTimestamp: "2024-08-27T09:56:30Z" phase: Canceled progress: {} startTimestamp: "2024-08-27T09:56:30Z"

Expected results:

Restore should complete successfully.

Additional info:

Attached datadownload yaml below.