-

Bug

-

Resolution: Done

-

Critical

-

mto-1.1

-

None

-

Quality / Stability / Reliability

-

False

-

-

2

-

None

-

s390x

-

None

-

MTO Sprint 272, MTO Sprint 273, MTO Sprint 274

-

None

-

Bug Fix

-

Improved support to consider bundle images support multi-arch even if they are not manifest lists.

(copied from https://github.com/outrigger-project/multiarch-tuning-operator/issues/645)

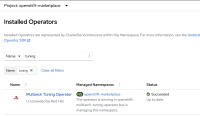

Environment: Openshift 4.18.14 on s390x architecture, z16 generation, with multiarch-tuning-operator v1.1.1.

Problem exists only when multiarch-tuning-operator is installed, it does NOT exist without this operator.

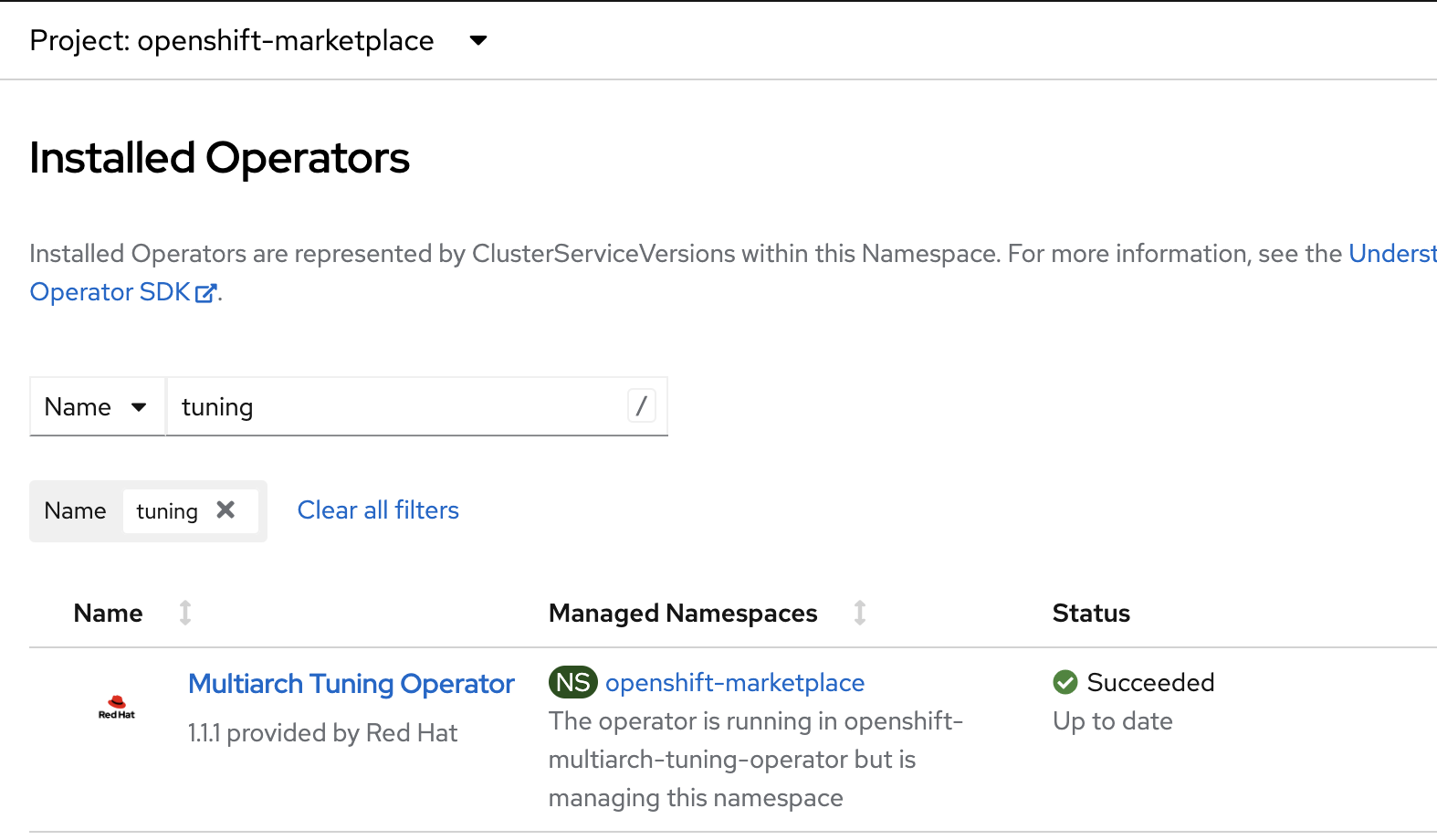

When installing OLM operator, e.g. Streams for Apache Kafka into arbitrary namespace, installation fails due to the failure in the OLM operator bundle unpack Job (OLM auto-generates Job into openshift-marketplace namespace), with the error that the Pod cannot be scheduled due to the unmatched node affinity.

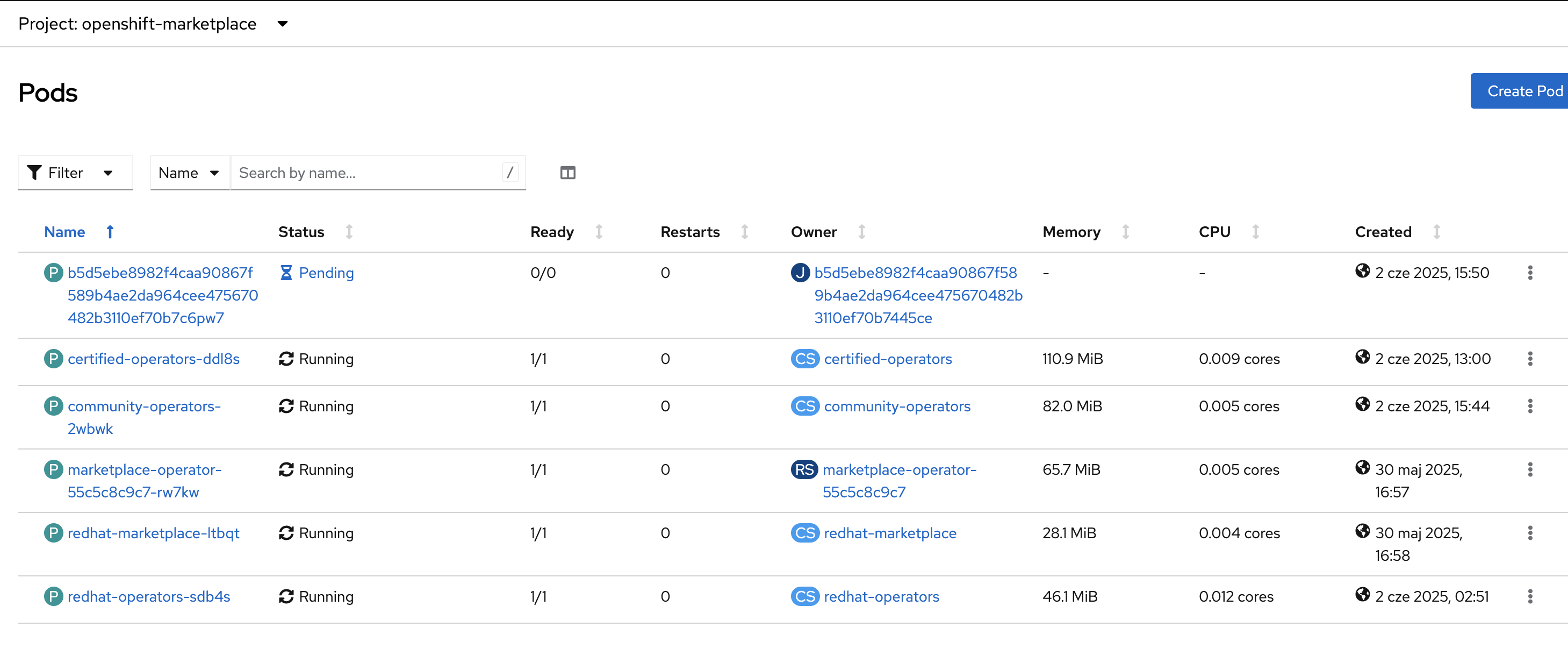

Warning FailedScheduling 5m55s default-scheduler 0/11 nodes are available: 3 node(s) had untolerated taint {node-role.kubernetes.io/master: }, 3 node(s) had untolerated taint {node.ocs.openshift.io/storage: true}, 5 node(s) didn't match Pod's node affinity/selector. preemption: 0/11 nodes are available: 11 Preemption is not helpful for scheduling.

Warning FailedScheduling 50s default-scheduler 0/11 nodes are available: 3 node(s) had untolerated taint {node-role.kubernetes.io/master: }, 3 node(s) had untolerated taint {node.ocs.openshift.io/storage: true}, 5 node(s) didn't match Pod's node affinity/selector. preemption: 0/11 nodes are available: 11 Preemption is not helpful for scheduling.

Normal ArchAwarePredicateSet 5m56s multiarch-tuning-operator Set the supported architectures to {amd64}

Normal ArchAwareSchedGateRemovalSuccess 5m56s multiarch-tuning-operator Successfully removed the multiarch.openshift.io/scheduling-gate scheduling gate

So it seems based on this event:

Set the supported architectures to {amd64}

That looks multiarch-tuning-operator is defaulting the Pod arch to be amd64, despite running on s390x architecture, since I see nodeAffinity being auto-injected:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/arch

operator: In

values:

- amd64

Here is the ClusterPodPlacementConfig CR

apiVersion: multiarch.openshift.io/v1beta1 kind: ClusterPodPlacementConfig metadata: finalizers: - finalizers.multiarch.openshift.io/pod-placement generation: 1 name: cluster spec: logVerbosity: Normal namespaceSelector: matchExpressions: - key: multiarch.openshift.io/exclude-pod-placement operator: DoesNotExist status: conditions: - lastTransitionTime: '2025-06-02T13:45:11Z' message: The cluster pod placement config operand is ready. We can gate and reconcile pods. reason: AllComponentsReady status: 'True' type: Available - lastTransitionTime: '2025-06-02T13:45:12Z' message: The cluster pod placement config operand is not progressing. reason: AllComponentsReady status: 'False' type: Progressing - lastTransitionTime: '2025-06-02T13:45:11Z' message: The cluster pod placement config operand is not degraded. reason: NotDegraded status: 'False' type: Degraded - lastTransitionTime: '2024-12-26T16:04:50Z' message: 'The cluster pod placement config operand is not being deprovisioned. ' reason: NotDeprovisioning status: 'False' type: Deprovisioning - lastTransitionTime: '2025-06-02T13:45:04Z' message: The pod placement controller is fully rolled out. reason: PodPlacementControllerReady status: 'False' type: PodPlacementControllerNotRolledOut - lastTransitionTime: '2025-06-02T13:45:12Z' message: The pod placement webhook is fully rolled out. reason: PodPlacementWebhookReady status: 'False' type: PodPlacementWebhookNotRolledOut - lastTransitionTime: '2025-06-02T13:45:11Z' message: The mutating webhook configuration is ready. reason: AllComponentsReady status: 'False' type: MutatingWebhookConfigurationNotAvailable