-

Bug

-

Resolution: Unresolved

-

Major

-

None

-

2.8.0

-

None

-

Quality / Stability / Reliability

-

False

-

-

True

-

-

Description of problem:

Cold migrate VM with ovn localnet transfer network hit unexpected failure: ---------------------------------------------------------- [ 0.0] Setting up the source: -i libvirt -ic vpx://administrator%40vsphere.local@10.***/data/host/10.***?no_verify=1 -it vddk mtv-rhel7-9-staticip virt-v2v: error: exception: libvirt: VIR_ERR_INTERNAL_ERROR: VIR_FROM_ESX: internal error: curl_easy_perform() returned an error: Couldn't connect to server (7) : Failed to connect to 10.*** port 443: No route to host ----------------------------------------------------------- Rerun the plan successfully without any change. Check in pod with ovn localnet as default route: it failed to connect outside when the pod is created, wait for a while, it can connect outside successfully.

Version-Release number of selected component (if applicable):

MTV 2.8.0

How reproducible:

30%

Steps to Reproduce:

1) Create NodeNetworkConfigurationPolicy to use another interface: eno8403 to create ovs-bridge: br-withvlan, and mapping to localnet: tenantblue

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br-withvlan

spec:

desiredState:

interfaces:

- name: br-withvlan

type: ovs-bridge

state: up

bridge:

options:

stp: true

port:

- name: eno8403

vlan:

mode: trunk

trunk-tags:

- id: 20

ovn:

bridge-mappings:

- localnet: tenantblue

bridge: br-withvlan

state: present

2) Create localnetwork: tenantblue with networkAttachmentDefinition and set: forklift.konveyor.io/route: "192.168.20.1"

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: tenantblue

namespace: test

annotations:

forklift.konveyor.io/route: 192.168.20.1

spec:

config: |2

{

"cniVersion": "0.4.0",

"name": "tenantblue",

"type": "ovn-k8s-cni-overlay",

"topology":"localnet",

"subnets": "192.168.20.0/24",

"excludeSubnets": "192.168.20.1/32",

"vlanID": 20,

"netAttachDefName": "test/tenantblue"

}

3) Create cold migration plan: cold-ovn-br-vlan to migrate vm: mtv-rhel7-9-staticic from vSphere7, select the "Transfer Network: test/tenantblue"

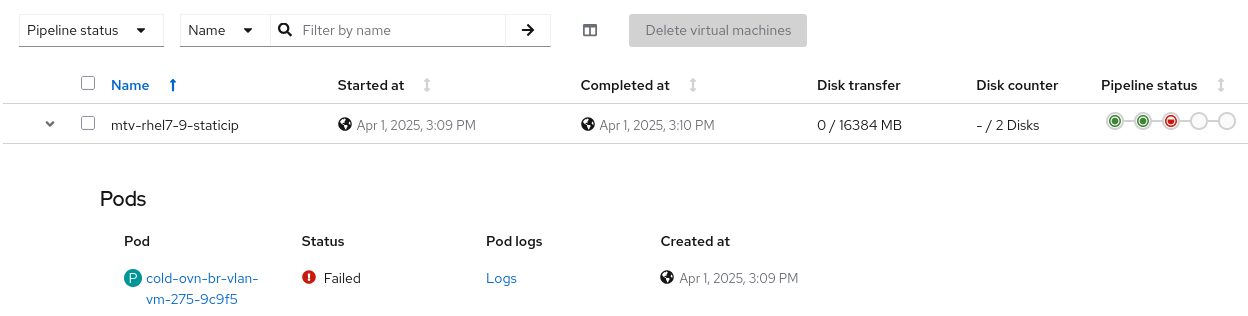

4) Check the ImageConversion phase failed

5) Check the conversion pod cold-ovn-br-vlan-vm-275-9c9f5 log, more details in attached file: cold-ovn-br-vlan-vm-275-9c9f5-virt-v2v.log

[ 0.0] Setting up the source: -i libvirt -ic vpx://administrator%40vsphere.local@10.***/data/host/10.***?no_verify=1 -it vddk mtv-rhel7-9-staticip

virt-v2v: error: exception: libvirt: VIR_ERR_INTERNAL_ERROR: VIR_FROM_ESX: internal error: curl_easy_perform() returned an error: Couldn't connect to server (7) : Failed to connect to 10.*** port 443: No route to host

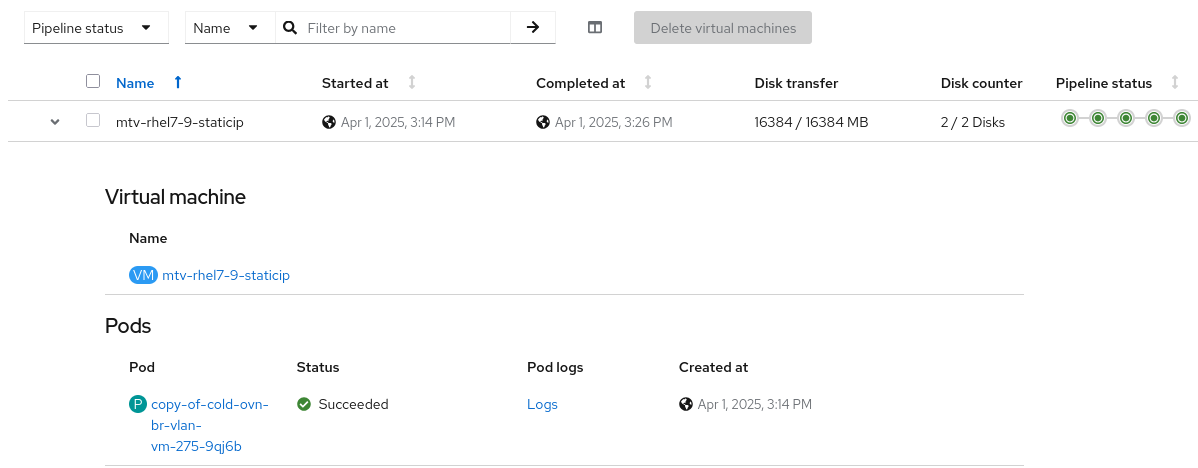

6) Copy the plan to copy-of-cold-ovn-br-vlan without any change, execute the new plan successfully

7) Create a new pod with attached file: POD1-chhu.yaml, use test/tenantblue as default route, login to pod immediately after creating the pod, ping gateway failed, wait for a while in vm, ping gateway successfully

~ $ ip addr

......

3: net1@if264: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc noqueue state UP group default

link/ether 0a:58:c0:a8:14:23 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.20.35/24 brd 192.168.20.255 scope global net1......

~ $ ping 192.168.20.1

PING 192.168.20.1 (192.168.20.1) 56(84) bytes of data.

From 192.168.20.35 icmp_seq=1 Destination Host Unreachable

~ $ date

Tue Apr 1 09:28:09 UTC 2025

~ $ date

Tue Apr 1 09:28:18 UTC 2025

~ $ ping 192.168.20.1

PING 192.168.20.1 (192.168.20.1) 56(84) bytes of data.

64 bytes from 192.168.20.1: icmp_seq=1 ttl=64 time=1.20 ms

Actual results:

In step4,5: Migration plan failed in step4, rerun the plan successfully without any change In step6: Failed to ping outside in VM when the pod is created, wait for a while, can ping outside successfully

Expected results:

In step4, Migration plan executed successfully

Additional info:

- cold-ovn-br-vlan-vm-275-9c9f5-virt-v2v.log - POD1-chhu.yaml