-

Bug

-

Resolution: Unresolved

-

Major

-

MTC 1.8.10

-

None

-

None

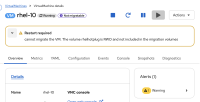

Migration fails, saying:

Failed cannot migrate VMI: PVC blank-dv-for-hotplug is not shared, live migration requires that all PVCs must be shared (using ReadWriteMany access mode) (11s)'],

But according to VMI and VMIM - migration actually succeeded.

Version:

MTC 1.8.10, CNV 4.20.0 (Can't see the failure in 4.19 CNV runs!)

How reproducible:

In Jenkins - one test passes, one fails Locally - 1 failed, 5 passed

MigMigration resource:

13:50:29 2025-09-16T10:50:27.330233 ocp_resources MigMigration INFO Deleting {'apiVersion': 'migration.openshift.io/v1alpha1', 'kind': 'MigMigration', 'metadata': {'annotations': {'openshift.io/touch': 'c881366d-92e9-11f0-a3c8-0a580a800032'}, 'creationTimestamp': '2025-09-16T10:40:24Z', 'generation': 24, 'labels': {'migration.openshift.io/migplan-name': 'storage-mig-plan', 'migration.openshift.io/migration-uid': 'bde61954-6989-4bf5-bdaf-5fe0b6dad331'}, 'managedFields': [{'apiVersion': 'migration.openshift.io/v1alpha1', 'fieldsType': 'FieldsV1', 'fieldsV1': {'f:spec': {'.': {}, 'f:migPlanRef': {}, 'f:migrateState': {}, 'f:quiescePods': {}, 'f:stage': {}}}, 'manager': 'OpenAPI-Generator', 'operation': 'Update', 'time': '2025-09-16T10:40:24Z'}, {'apiVersion': 'migration.openshift.io/v1alpha1', 'fieldsType': 'FieldsV1', 'fieldsV1': {'f:metadata': {'f:annotations': {'.': {}, 'f:openshift.io/touch': {}}, 'f:labels': {'.': {}, 'f:migration.openshift.io/migplan-name': {}, 'f:migration.openshift.io/migration-uid': {}}, 'f:ownerReferences': {'.': {}, 'k:{"uid":"09427ddb-a014-4125-9846-e6b164ae2aa7"}': {}}}, 'f:status': {'.': {}, 'f:conditions': {}, 'f:errors': {}, 'f:itinerary': {}, 'f:observedDigest': {}, 'f:phase': {}, 'f:pipeline': {}, 'f:startTimestamp': {}}}, 'manager': 'manager', 'operation': 'Update', 'time': '2025-09-16T10:42:03Z'}], 'name': 'mig-migration-storage', 'namespace': 'openshift-migration', 'ownerReferences': [{'apiVersion': 'migration.openshift.io/v1alpha1', 'kind': 'MigPlan', 'name': 'storage-mig-plan', 'uid': '09427ddb-a014-4125-9846-e6b164ae2aa7'}], 'resourceVersion': '907868', 'uid': 'bde61954-6989-4bf5-bdaf-5fe0b6dad331'}, 'spec': {'migPlanRef': {'name': 'storage-mig-plan', 'namespace': 'openshift-migration'}, 'migrateState': True, 'quiescePods': True, 'stage': False}, 'status': {'conditions': [{'category': 'Warn', 'durable': True, 'lastTransitionTime': '2025-09-16T10:42:03Z', 'message': 'DirectVolumeMigration (dvm): openshift-migration/mig-migration-storage-hk9wn failed. See in dvm status.Errors', 'status': 'True', 'type': 'DirectVolumeMigrationFailed'}, {'category': 'Advisory', 'durable': True, 'lastTransitionTime': '2025-09-16T10:42:03Z', 'message': 'The migration has failed. See: Errors.', 'reason': 'WaitForDirectVolumeMigrationToComplete', 'status': 'True', 'type': 'Failed'}], 'errors': ['DVM failed: cannot migrate VMI: PVC blank-dv-for-hotplug is not shared, live migration requires that all PVCs must be shared (using ReadWriteMany access mode)'], 'itinerary': 'Failed', 'observedDigest': '458005d0e00f41ae25c448db4999139312412b60b9781415cdfb5e88fba3dd04', 'phase': 'Completed', 'pipeline': [{'completed': '2025-09-16T10:41:52Z', 'message': 'Completed', 'name': 'Prepare', 'started': '2025-09-16T10:40:24Z'}, {'completed': '2025-09-16T10:41:53Z', 'message': 'Completed', 'name': 'StageBackup', 'started': '2025-09-16T10:41:52Z'}, {'completed': '2025-09-16T10:42:03Z', 'failed': True, 'message': 'Failed', 'name': 'DirectVolume', 'progress': ['[blank-dv-for-hotplug] Live Migration storage-migration-test-mtc-storage-class-migration/fedora-volume-hotplug-vm-1758018926-4210007: Failed cannot migrate VMI: PVC blank-dv-for-hotplug is not shared, live migration requires that all PVCs must be shared (using ReadWriteMany access mode) (11s)'], 'started': '2025-09-16T10:41:53Z'}, {'completed': '2025-09-16T10:42:03Z', 'message': 'Completed', 'name': 'Cleanup', 'started': '2025-09-16T10:42:03Z'}, {'completed': '2025-09-16T10:42:03Z', 'message': 'Completed', 'name': 'CleanupHelpers', 'started': '2025-09-16T10:42:03Z'}], 'startTimestamp': '2025-09-16T10:40:24Z'}}

VM:

apiVersion: kubevirt.io/v1 kind: VirtualMachine metadata: annotations: kubemacpool.io/transaction-timestamp: "2025-09-16T10:42:03.323951613Z" kubevirt.io/latest-observed-api-version: v1 kubevirt.io/storage-observed-api-version: v1 creationTimestamp: "2025-09-16T10:35:26Z" finalizers: - kubevirt.io/virtualMachineControllerFinalize generation: 4 labels: kubevirt.io/vm: fedora-volume-hotplug-vm name: fedora-volume-hotplug-vm-1758018926-4210007 namespace: storage-migration-test-mtc-storage-class-migration resourceVersion: "908726" uid: e947626a-6e16-4f3e-baec-804fb912650e spec: runStrategy: Always template: metadata: creationTimestamp: null labels: debugLogs: "true" kubevirt.io/domain: fedora-volume-hotplug-vm-1758018926-4210007 kubevirt.io/vm: fedora-volume-hotplug-vm-1758018926-4210007 spec: architecture: amd64 domain: cpu: cores: 1 devices: disks: - disk: bus: virtio name: containerdisk - disk: bus: virtio name: cloudinitdisk - disk: bus: scsi name: blank-dv-for-hotplug serial: blank-dv-for-hotplug interfaces: - macAddress: 02:de:4e:7d:71:16 masquerade: {} name: default rng: {} firmware: uuid: ca4a9a76-69e3-4502-b9e2-896d298ef7b5 machine: type: pc-q35-rhel9.6.0 memory: guest: 1Gi resources: {} networks: - name: default pod: {} terminationGracePeriodSeconds: 30 volumes: - containerDisk: image: quay.io/openshift-cnv/qe-cnv-tests-fedora:41@sha256:a91659fba4e0258dda1be076dd6e56e34d9bf3dce082e57f939994ce0f124348 name: containerdisk - cloudInitNoCloud: userData: |- #cloud-config chpasswd: expire: false password: password user: fedora ssh_pwauth: true ssh_authorized_keys: [ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC7Acf5EbS8Tmz/0nEPVzv71upmfa5DrMgj4gKXqZYt6JSDT5CMZwFh6bwqhGhsFqWPdJpTRuEZ/7ZtCzfmi3cxxZHXOAmaYtVqKsRzKA2MmzYA3Xl105OJlcYSe6whuYRktedu2OJ6FH3k5pFxELjtJhNzuEopoymYAl7vB2yyORKFRCYacs0jO15kS3CXJ4TGgSlU8GdrZGOe5a6L6+2lZyzYgX+tn+cBZI/bfj7P1vZdou7ran+iTi2TxUlU7Rk/2jGnv9Bu9pGn2rtGeBvqx/LNhGlgoQa4j5KTEtPjueJdm+yhTHKSF7jnDxD99TSs6YwbKrIOww7g5MebCtWt root@exec1.rdocloud] runcmd: ['grep ssh-rsa /etc/crypto-policies/back-ends/opensshserver.config || sudo update-crypto-policies --set LEGACY || true', "sudo sed -i 's/^#\\?PasswordAuthentication no/PasswordAuthentication yes/g' /etc/ssh/sshd_config", 'sudo systemctl enable sshd', 'sudo systemctl restart sshd'] name: cloudinitdisk - dataVolume: hotpluggable: true name: blank-dv-for-hotplug-mig-fph6 name: blank-dv-for-hotplug updateVolumesStrategy: Migration status: conditions: - lastProbeTime: null lastTransitionTime: "2025-09-16T10:42:38Z" status: "True" type: Ready - lastProbeTime: null lastTransitionTime: null status: "True" type: LiveMigratable - lastProbeTime: null lastTransitionTime: null status: "True" type: StorageLiveMigratable - lastProbeTime: "2025-09-16T10:35:55Z" lastTransitionTime: null status: "True" type: AgentConnected - lastProbeTime: null lastTransitionTime: null message: All of the VMI's DVs are bound and ready reason: AllDVsReady status: "True" type: DataVolumesReady created: true desiredGeneration: 4 observedGeneration: 2 printableStatus: Running ready: true runStrategy: Always volumeSnapshotStatuses: - enabled: false name: containerdisk reason: Snapshot is not supported for this volumeSource type [containerdisk] - enabled: false name: cloudinitdisk reason: Snapshot is not supported for this volumeSource type [cloudinitdisk] - enabled: true name: blank-dv-for-hotplug volumeUpdateState: volumeMigrationState: migratedVolumes: - destinationPVCInfo: claimName: blank-dv-for-hotplug-mig-fph6 volumeMode: Block sourcePVCInfo: claimName: blank-dv-for-hotplug volumeMode: Filesystem volumeName: blank-dv-for-hotplug

VMI:

apiVersion: kubevirt.io/v1 kind: VirtualMachineInstance metadata: annotations: kubevirt.io/latest-observed-api-version: v1 kubevirt.io/nonroot: "true" kubevirt.io/storage-observed-api-version: v1 kubevirt.io/vm-generation: "2" creationTimestamp: "2025-09-16T10:35:27Z" finalizers: - kubevirt.io/virtualMachineControllerFinalize - foregroundDeleteVirtualMachine generation: 38 labels: debugLogs: "true" kubevirt.io/domain: fedora-volume-hotplug-vm-1758018926-4210007 kubevirt.io/nodeName: cnvqe-038.lab.eng.tlv2.redhat.com kubevirt.io/vm: fedora-volume-hotplug-vm-1758018926-4210007 name: fedora-volume-hotplug-vm-1758018926-4210007 namespace: storage-migration-test-mtc-storage-class-migration ownerReferences: - apiVersion: kubevirt.io/v1 blockOwnerDeletion: true controller: true kind: VirtualMachine name: fedora-volume-hotplug-vm-1758018926-4210007 uid: e947626a-6e16-4f3e-baec-804fb912650e resourceVersion: "908738" uid: 5f26eff8-84ba-42fa-9ec0-098ab1283539 spec: architecture: amd64 domain: cpu: cores: 1 model: host-model devices: disks: - disk: bus: virtio name: containerdisk - disk: bus: virtio name: cloudinitdisk - disk: bus: scsi name: blank-dv-for-hotplug serial: blank-dv-for-hotplug interfaces: - macAddress: 02:de:4e:7d:71:16 masquerade: {} name: default rng: {} features: acpi: enabled: true firmware: uuid: ca4a9a76-69e3-4502-b9e2-896d298ef7b5 machine: type: pc-q35-rhel9.6.0 memory: guest: 1Gi maxGuest: 4Gi resources: requests: memory: 1Gi evictionStrategy: LiveMigrate networks: - name: default pod: {} terminationGracePeriodSeconds: 30 volumes: - containerDisk: image: quay.io/openshift-cnv/qe-cnv-tests-fedora:41@sha256:a91659fba4e0258dda1be076dd6e56e34d9bf3dce082e57f939994ce0f124348 imagePullPolicy: IfNotPresent name: containerdisk - cloudInitNoCloud: userData: |- #cloud-config chpasswd: expire: false password: password user: fedora ssh_pwauth: true ssh_authorized_keys: [ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC7Acf5EbS8Tmz/0nEPVzv71upmfa5DrMgj4gKXqZYt6JSDT5CMZwFh6bwqhGhsFqWPdJpTRuEZ/7ZtCzfmi3cxxZHXOAmaYtVqKsRzKA2MmzYA3Xl105OJlcYSe6whuYRktedu2OJ6FH3k5pFxELjtJhNzuEopoymYAl7vB2yyORKFRCYacs0jO15kS3CXJ4TGgSlU8GdrZGOe5a6L6+2lZyzYgX+tn+cBZI/bfj7P1vZdou7ran+iTi2TxUlU7Rk/2jGnv9Bu9pGn2rtGeBvqx/LNhGlgoQa4j5KTEtPjueJdm+yhTHKSF7jnDxD99TSs6YwbKrIOww7g5MebCtWt root@exec1.rdocloud] runcmd: ['grep ssh-rsa /etc/crypto-policies/back-ends/opensshserver.config || sudo update-crypto-policies --set LEGACY || true', "sudo sed -i 's/^#\\?PasswordAuthentication no/PasswordAuthentication yes/g' /etc/ssh/sshd_config", 'sudo systemctl enable sshd', 'sudo systemctl restart sshd'] name: cloudinitdisk - dataVolume: hotpluggable: true name: blank-dv-for-hotplug-mig-fph6 name: blank-dv-for-hotplug status: activePods: a719203a-b7b4-4bf5-a5e4-e49472597dc3: cnvqe-038.lab.eng.tlv2.redhat.com fbf5cc44-8fdc-4cf6-af1e-dc824eb0292e: cnvqe-037.lab.eng.tlv2.redhat.com conditions: - lastProbeTime: null lastTransitionTime: "2025-09-16T10:42:38Z" status: "True" type: Ready - lastProbeTime: null lastTransitionTime: null status: "True" type: StorageLiveMigratable - lastProbeTime: "2025-09-16T10:35:55Z" lastTransitionTime: null status: "True" type: AgentConnected - lastProbeTime: null lastTransitionTime: null message: All of the VMI's DVs are bound and ready reason: AllDVsReady status: "True" type: DataVolumesReady - lastProbeTime: null lastTransitionTime: null status: "True" type: LiveMigratable currentCPUTopology: cores: 1 guestOSInfo: id: fedora kernelRelease: 6.13.6-200.fc41.x86_64 kernelVersion: '#1 SMP PREEMPT_DYNAMIC Fri Mar 7 21:33:48 UTC 2025' machine: x86_64 name: Fedora Linux prettyName: Fedora Linux 41 (Cloud Edition) version: 41 (Cloud Edition) versionId: "41" interfaces: - infoSource: domain, guest-agent interfaceName: eth0 ipAddress: 10.129.0.254 ipAddresses: - 10.129.0.254 - fd02:0:0:2::2e7 linkState: up mac: 02:de:4e:7d:71:16 name: default podInterfaceName: eth0 queueCount: 1 launcherContainerImageVersion: registry.redhat.io/container-native-virtualization/virt-launcher-rhel9@sha256:999c47180c12246dccb495c816bf6d4bc9232680615e5475dcfcaac94977737f machine: type: pc-q35-rhel9.6.0 memory: guestAtBoot: 1Gi guestCurrent: 1Gi guestRequested: 1Gi migrationMethod: BlockMigration migrationState: completed: true endTimestamp: "2025-09-16T10:42:38Z" migrationConfiguration: allowAutoConverge: false allowPostCopy: false allowWorkloadDisruption: false bandwidthPerMigration: "0" completionTimeoutPerGiB: 150 nodeDrainTaintKey: kubevirt.io/drain parallelMigrationsPerCluster: 5 parallelOutboundMigrationsPerNode: 2 progressTimeout: 150 unsafeMigrationOverride: false migrationUid: 1fd8d768-782d-4313-a47f-8c7cbc1e7951 mode: PreCopy sourceNode: cnvqe-037.lab.eng.tlv2.redhat.com sourcePod: virt-launcher-fedora-volume-hotplug-vm-1758018926-4210007-prnhl startTimestamp: "2025-09-16T10:42:35Z" targetAttachmentPodUID: ae8a2977-d25a-417d-97de-12940159a9a5 targetDirectMigrationNodePorts: "40561": 0 "41055": 49152 "46833": 49153 targetNode: cnvqe-038.lab.eng.tlv2.redhat.com targetNodeAddress: 10.129.0.121 targetNodeDomainDetected: true targetNodeDomainReadyTimestamp: "2025-09-16T10:42:38Z" targetPod: virt-launcher-fedora-volume-hotplug-vm-1758018926-4210007-mvs77 migrationTransport: Unix nodeName: cnvqe-038.lab.eng.tlv2.redhat.com phase: Running phaseTransitionTimestamps: - phase: Pending phaseTransitionTimestamp: "2025-09-16T10:35:27Z" - phase: Scheduling phaseTransitionTimestamp: "2025-09-16T10:35:27Z" - phase: Scheduled phaseTransitionTimestamp: "2025-09-16T10:35:37Z" - phase: Running phaseTransitionTimestamp: "2025-09-16T10:35:39Z" qosClass: Burstable runtimeUser: 107 selinuxContext: system_u:object_r:container_file_t:s0:c18,c480 virtualMachineRevisionName: revision-start-vm-e947626a-6e16-4f3e-baec-804fb912650e-2 volumeStatus: - hotplugVolume: attachPodName: hp-volume-6gpsf attachPodUID: ae8a2977-d25a-417d-97de-12940159a9a5 message: Successfully attach hotplugged volume blank-dv-for-hotplug to VM name: blank-dv-for-hotplug persistentVolumeClaimInfo: accessModes: - ReadWriteMany capacity: storage: 1Gi claimName: blank-dv-for-hotplug-mig-fph6 filesystemOverhead: "0" requests: storage: "1073741824" volumeMode: Block phase: Ready reason: VolumeReady target: sda - name: cloudinitdisk size: 1048576 target: vdb - containerDiskVolume: checksum: 643904771 name: containerdisk target: vda

VMIM:

apiVersion: kubevirt.io/v1 kind: VirtualMachineInstanceMigration metadata: annotations: kubevirt.io/latest-observed-api-version: v1 kubevirt.io/storage-observed-api-version: v1 kubevirt.io/workloadUpdateMigration: "" creationTimestamp: "2025-09-16T10:42:08Z" generateName: kubevirt-workload-update- generation: 1 labels: kubevirt.io/vmi-name: fedora-volume-hotplug-vm-1758018926-4210007 kubevirt.io/volume-update-migration: fedora-volume-hotplug-vm-1758018926-4210007 name: kubevirt-workload-update-64jzf namespace: storage-migration-test-mtc-storage-class-migration resourceVersion: "908732" uid: 1fd8d768-782d-4313-a47f-8c7cbc1e7951 spec: vmiName: fedora-volume-hotplug-vm-1758018926-4210007 status: migrationState: completed: true endTimestamp: "2025-09-16T10:42:38Z" migrationConfiguration: allowAutoConverge: false allowPostCopy: false allowWorkloadDisruption: false bandwidthPerMigration: "0" completionTimeoutPerGiB: 150 nodeDrainTaintKey: kubevirt.io/drain parallelMigrationsPerCluster: 5 parallelOutboundMigrationsPerNode: 2 progressTimeout: 150 unsafeMigrationOverride: false migrationUid: 1fd8d768-782d-4313-a47f-8c7cbc1e7951 mode: PreCopy sourceNode: cnvqe-037.lab.eng.tlv2.redhat.com sourcePod: virt-launcher-fedora-volume-hotplug-vm-1758018926-4210007-prnhl startTimestamp: "2025-09-16T10:42:35Z" targetAttachmentPodUID: ae8a2977-d25a-417d-97de-12940159a9a5 targetDirectMigrationNodePorts: "40561": 0 "41055": 49152 "46833": 49153 targetNode: cnvqe-038.lab.eng.tlv2.redhat.com targetNodeAddress: 10.129.0.121 targetNodeDomainDetected: true targetNodeDomainReadyTimestamp: "2025-09-16T10:42:38Z" targetPod: virt-launcher-fedora-volume-hotplug-vm-1758018926-4210007-mvs77 phase: Succeeded phaseTransitionTimestamps: - phase: Pending phaseTransitionTimestamp: "2025-09-16T10:42:08Z" - phase: Scheduling phaseTransitionTimestamp: "2025-09-16T10:42:34Z" - phase: Scheduled phaseTransitionTimestamp: "2025-09-16T10:42:34Z" - phase: PreparingTarget phaseTransitionTimestamp: "2025-09-16T10:42:34Z" - phase: TargetReady phaseTransitionTimestamp: "2025-09-16T10:42:35Z" - phase: Running phaseTransitionTimestamp: "2025-09-16T10:42:35Z" - phase: Succeeded phaseTransitionTimestamp: "2025-09-16T10:42:38Z"