-

Bug

-

Resolution: Won't Do

-

Major

-

None

-

None

-

False

-

-

False

-

No

-

---

-

---

-

-

WHAT:

KafkaTopicPartitionReplicaSpreadMin is an alert rule to identify when there is a partition replica not spreading across all AZs. What we did in the rule is to calculate the expected replica number of each AZ, and then, check our actual replica counts in each AZ, to see if there's any AZ has less than the expected number. Take a 3 replication factor topic and 3 AZs case for example, the expected replica number in each AZ will be "1", and we check if the actual value of the replica count in each AZ is less than 1. If so, alert fired.

But I found the way we calculated the actual replica counts "won't" get the 0 number in one AZ.

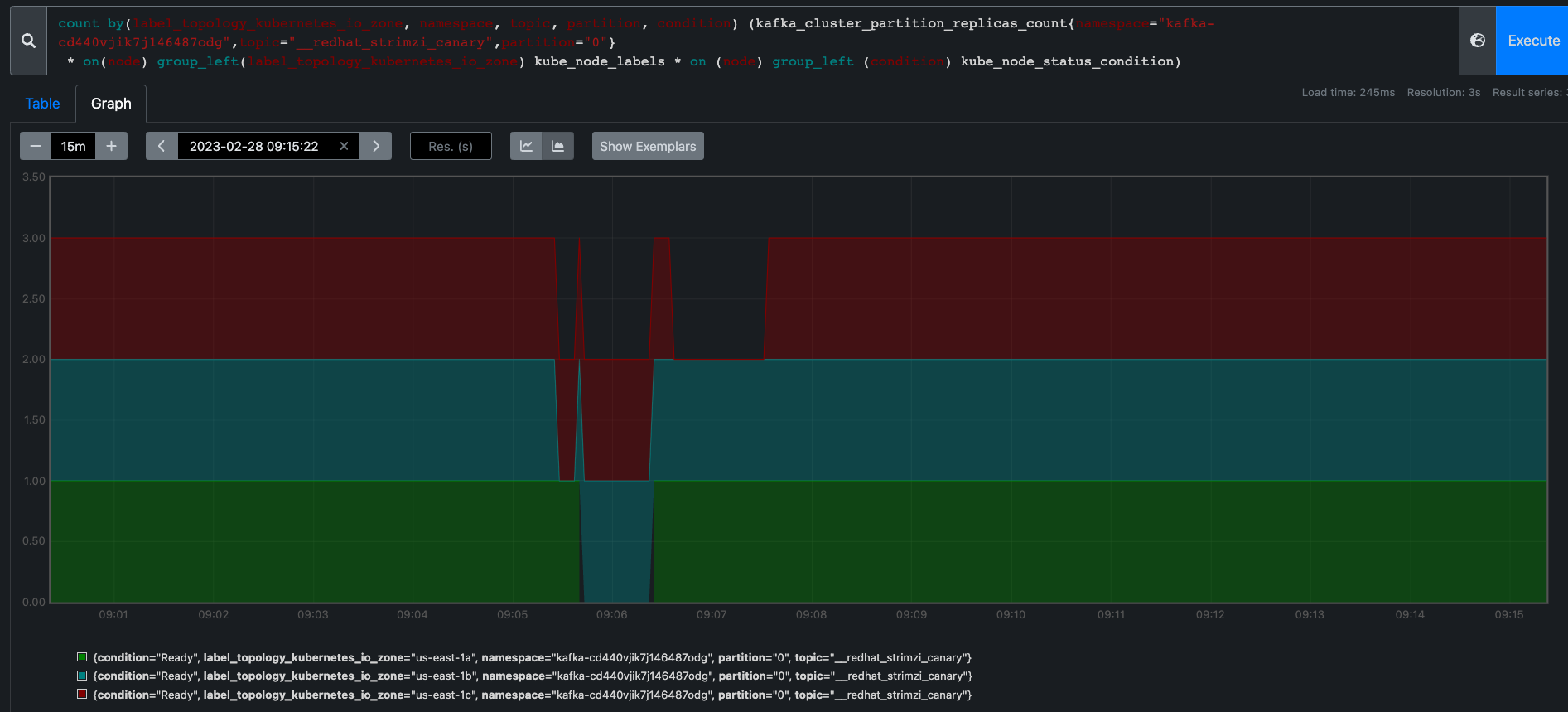

In this graph below, we can see during pod rolling at 09:06, we only get 2 replicas reported.

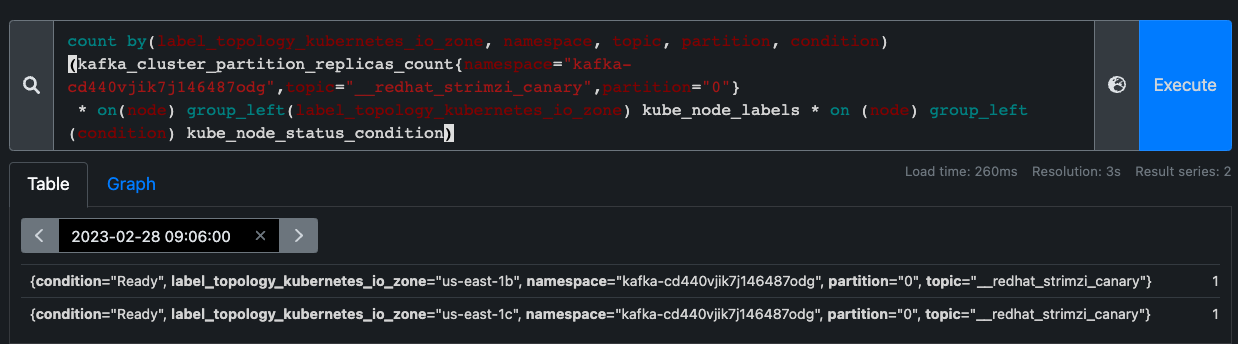

Let's check the table view, it's more clear that at 09:06, we only get 2 replicas reported, using our current alert rule.

The reason is because the the "base metric" we used `kafka_cluster_partition_replicas_count` will only show the replicas for a partition. If there's no replica existed, no value will be reported. That means, if there's one AZ having no replica in the above example, the alert will not get triggered.

WHY:

KafkaTopicPartitionReplicaSpreadMin cannot fire if there's 0 replica in a AZ.

HOW:

a. We need to include all AZs in the left hand side of the rule, so that it will show the value of 0 in a AZ.

b. Or maybe we can delete KafkaTopicPartitionReplicaSpreadMin rule? The reason is:

- we already have KafkaTopicPartitionReplicaSpreadMax rule, so if there are more than expected replicas located in a specific AZ, it'll fire (which already implies, there's a specific AZ having less than expected replicas)

- If it's the issue that we don't have enough replicas for a specific partition, we'll have "UnderReplicatedPartitions" alert fired (# of under replicated partitions (|ISR| < |all replicas|))

count by(label_topology_kubernetes_io_zone, namespace, topic, partition,condition) (kafka_cluster_partition_replicas_count *

on(node) group_left(label_topology_kubernetes_io_zone) kube_node_labels * on (node) group_left (condition) kube_node_status_condition)