-

Bug

-

Resolution: Done

-

Critical

-

4.4.1.AM3

-

None

In openshift explorer, when scaling a service that has 2 deployments, it deploys pod from the oldest deployment instead of the latest. Eventually, these pods are killed by openshift.

The workaround is to open the properties view and issue a scale command on a deployment directly.

However, because scaling is such a front-and-center feature from the explorer, I believe it's critical we fix it ASAP

(copied from JBIDE-23412)

The following steps can be seen in the following screencast:

scale-to-shows-old-rc.ogv![]()

- ASSERT: have an app running (ex. nodejs-example)

- ASSERT: In OpenShift Explorer: make sure it has at least 1 pod: pick "Scale To" in the context menu of the service. Dialog shows that there's at least 1 pod currently

- ASSERT: in OpenShift Explorer: there 1 pod shown as child to the service.

- ASSERT: in Properties view, pick "Deployments" tab and see that there's at least 1 deployment (aka replication controller)

- EXEC: in OpenShift explorer: select Service pick "Deploy Latest"

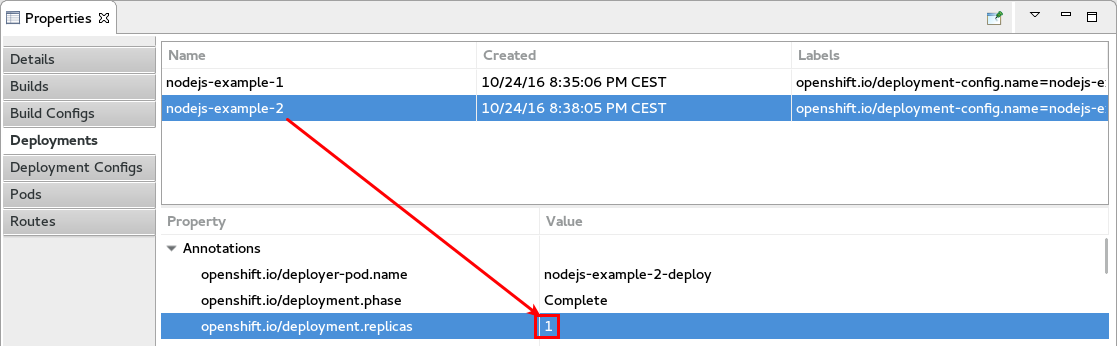

- ASSERT: in Properties view: "Deployments" now shows 2 Deployments

- ASSERT: in OpenShift Explorer you now see 2 children/pods

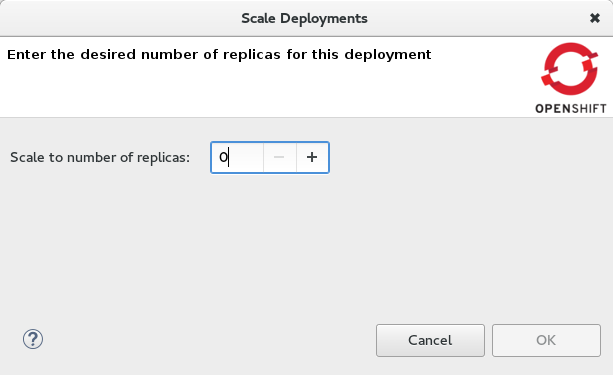

- EXEC: in OpenShift Explorer: pick "Scale To..." in the context menu of the service

Result:

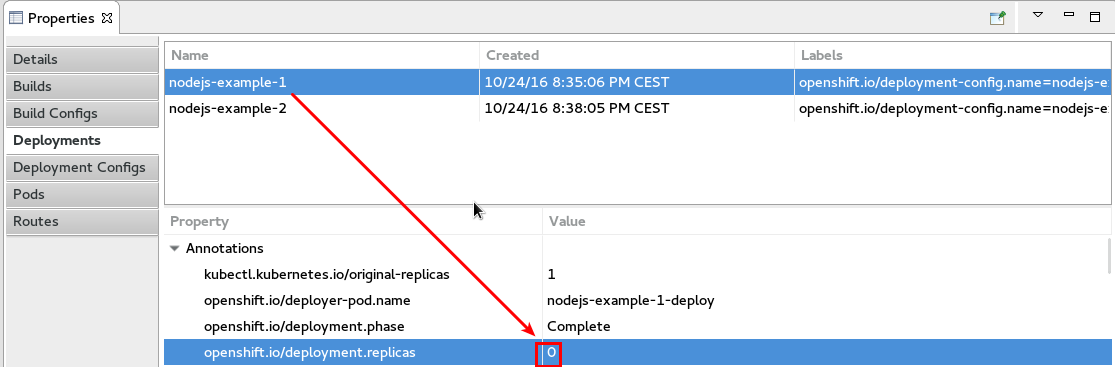

The current number of pods is shown as 0.

But it's very sure that this is not true. Behind the scenes a new replication controller was created which deployed a new pod:

The old replication controller was turned to have 0 pods. The "Scale To" dialog shows the number of replcas for the old replication controller.

- duplicates

-

JBIDE-23412 Scale To: wrong number of replicas is shown if invoked right after "Deploy latest"

-

- Closed

-

- is caused by

-

JBIDE-22259 Server Adapter: Debugging of OS 3 application creates a new replication controller

-

- Closed

-

- relates to

-

JBIDE-22362 Server Adapter: Static changes done to nodejs application are not visible

-

- Closed

-