-

Story

-

Resolution: Done

-

Blocker

-

None

-

None

-

None

-

None

-

False

-

-

False

-

None

-

None

-

None

-

None

-

None

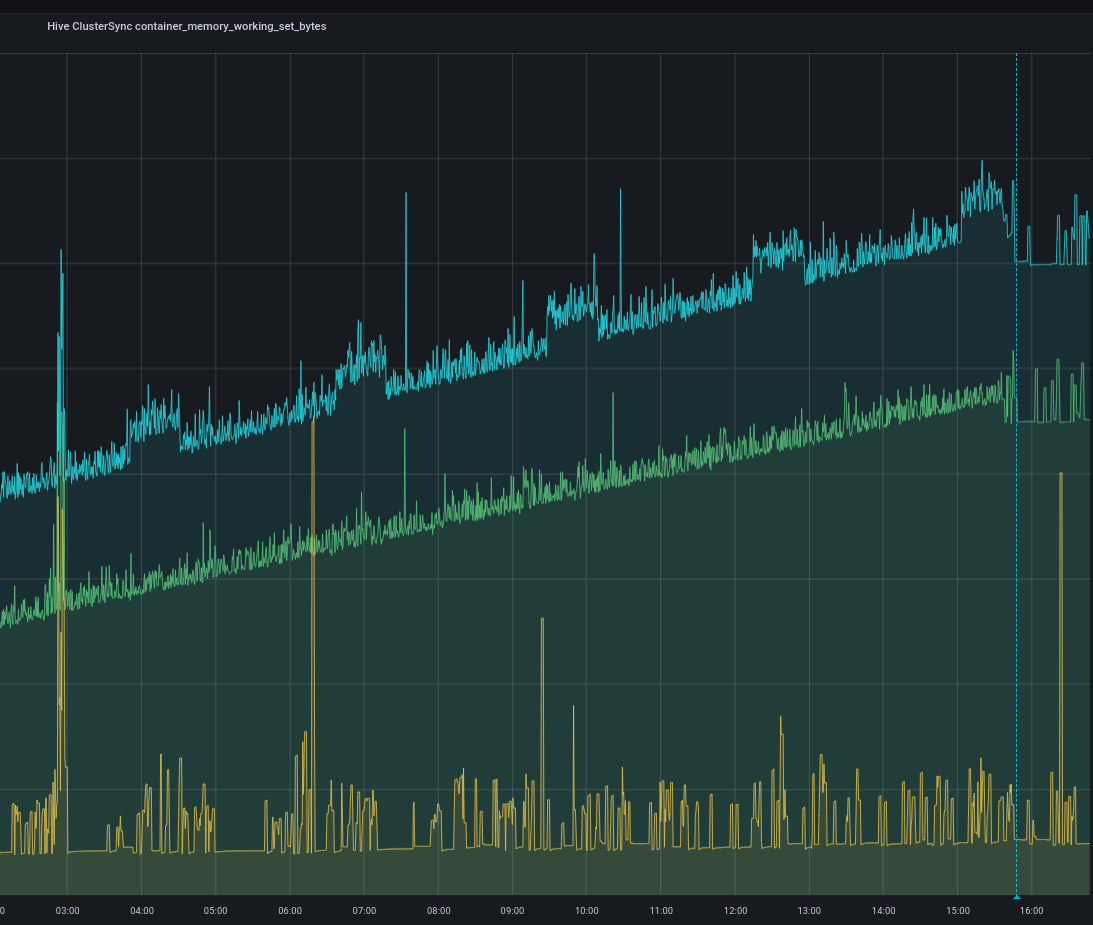

So two things here:

- The green and aqua graphs (replicas 0 and 2) show a clear and serious problem. These replicas were handling syncsets with consistent errors. That's no excuse to leak memory like that. The vertical blue line is where we paused syncsets for the offending clusters (see OHSS-18481) and you can see the graphs level off nicely. However, the excess memory is not reclaimed. It oughtta be.

- The overall trend of the yellow graph (replica 1, no erroring syncsets) is still upward, albeit relatively slowly. So there's leakage even without failing syncsets.

If code inspection doesn't reveal the problem here (I looked through and couldn't see anything obvious) then we need to profile the thing and dig deeper.

- is related to

-

HIVE-2038 clustersync controller should watch clustersyncs

-

- Closed

-

- relates to

-

HIVE-2399 [itn-2024-00005-followups] Clustersync pods intermittently have memory spikes

-

- In Progress

-

-

HIVE-2137 ClusterSync: Detect bogus syncsets and avoid repeatedly applying them

-

- To Do

-

-

HIVE-1461 memory leak observed in ACM 2.2 version of Hive

-

- Closed

-

- links to

(1 links to)