When transaction failure happens, ovn-northd may wait up to duration of a probe interval, which is usually high in high scale environments, before it re-computes the content of the Sb DB. This may cause a significant delay for resources to be created in the Southbound DB and subsequently on the nodes. Resources may also potentially be never propagated if all the probes are disabled and no new database changes happen (unlikely).

In the example below from 500-node cluster-density ovn-heater test from 24.08.2024:

- A new instance of ovn-northd becomes active after Sb leadership transfer.

- It performs a first re-compute.

- Transaction fails, presumably because the previously active northd had an incomplete transaction in-flight before reconnecting to a new leader. (that's kind of OK)

- New active northd waits for 16-ish seconds before starting a new re-compute that succeeds.

The 16 seconds value is likely related to the northd_probe_interval set to 16 seconds.

As a result, a group of ports added to Nb around 15:49:59 was not propagated to Sb Db until 15:50:25 causing the scale test failure.

2024-08-24T15:50:00.070Z|00243|reconnect|INFO|ssl:192.16.0.2:6642: connecting... 2024-08-24T15:50:00.075Z|00244|reconnect|INFO|ssl:192.16.0.2:6642: connected 2024-08-24T15:50:00.077Z|00245|ovn_northd|INFO|ovn-northd lock acquired. This ovn-northd instance is now active. 2024-08-24T15:50:00.077Z|00246|memory_trim|INFO|Detected inactivity (last active 633242 ms ago): trimming memory 2024-08-24T15:50:01.058Z|00247|inc_proc_eng|INFO|node: northd, recompute (forced) took 592ms 2024-08-24T15:50:02.105Z|00248|inc_proc_eng|INFO|node: lr_stateful, recompute (forced) took 1016ms 2024-08-24T15:50:04.287Z|00249|inc_proc_eng|INFO|node: lflow, recompute (forced) took 1682ms 2024-08-24T15:50:04.290Z|00250|timeval|WARN|Unreasonably long 4211ms poll interval (2657ms user, 668ms system) 2024-08-24T15:50:04.294Z|00276|poll_loop|INFO|wakeup due to [POLLIN] on fd 19 (192.16.0.2:45474<->192.16.0.2:6642) at lib/stream-ssl.c:842 (55% CPU usage) 2024-08-24T15:50:04.294Z|00277|ovsdb_idl|WARN|transaction error: {"details":"Transaction causes multiple rows in \"Address_Set\" table to have identical values (pg_deny_igr_NS_density_7823_ip6) for index on column \"name\". First row, with UUID b2c4d455-e575-484c-a43e-e4325d0f1b5d, existed in the database before this transaction and was not modified by the transaction. Second row, with UUID 5663ca4c-9cc4-45c6-9ffd-c632b9cafff4, was inserted by this transaction.","error":"constraint violation"} 2024-08-24T15:50:04.294Z|00278|ovn_northd|INFO|OVNSB commit failed, force recompute next time. <-- Failure detected, marking for re-compute. 2024-08-24T15:50:21.159Z|00279|inc_proc_eng|INFO|node: northd, recompute (forced) took 575ms <-- Actual recompute 16-ish seconds later. 2024-08-24T15:50:22.414Z|00280|inc_proc_eng|INFO|node: lr_stateful, recompute (forced) took 1230ms 2024-08-24T15:50:25.129Z|00281|inc_proc_eng|INFO|node: lflow, recompute (forced) took 2235ms 2024-08-24T15:50:25.132Z|00282|timeval|WARN|Unreasonably long 4826ms poll interval (3518ms user, 527ms system) 2024-08-24T15:50:25.332Z|00315|poll_loop|INFO|wakeup due to [POLLIN] on fd 19 (192.16.0.2:45474<->192.16.0.2:6642) at lib/stream-ssl.c:842 (84% CPU usage) 2024-08-24T15:50:30.687Z|00316|inc_proc_eng|INFO|node: lflow, recompute (failed handler for input port_group) took 2137ms 2024-08-24T15:50:30.690Z|00317|timeval|WARN|Unreasonably long 2233ms poll interval (1447ms user, 144ms system)

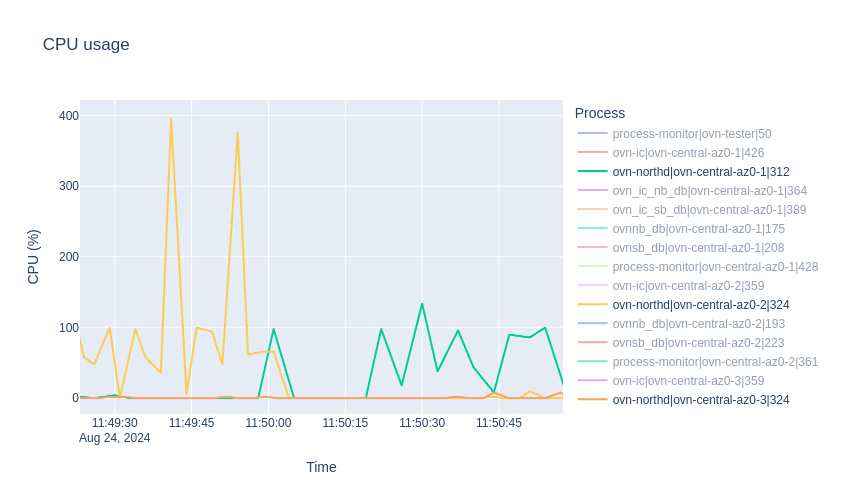

CPU usage graph confirms that the process was sleeping:

ovn-northd should try to resolve the inconsistency by re-computing and re-trying the transaction right away without waiting for some other (external) events to happen.

On a quick glance through the code, there might be a similar issue with ovn-controller as well.

- links to

-

RHBA-2024:140398

ovn24.09 bug fix and enhancement update

RHBA-2024:140398

ovn24.09 bug fix and enhancement update