-

Feature Request

-

Resolution: Not a Bug

-

Major

-

None

-

None

-

None

-

False

-

None

-

False

We have encountered some issues with our Debezium service recently.

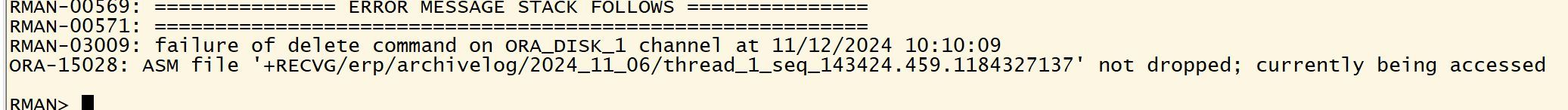

- Oracle database archive log deletion failed

java.lang.OutOfMemoryError: Java heap space

We are seeking solutions to the above-mentioned issues, we found the relevant configuration in the document.

Other information:we have 3 servers, each with 4 cores and 8g of memory, JVM 5g, running 100+collection tasks. DBZ version: 2.7.0.Final

log.mining.archive.log.hours control the time range of service access logs.

{{log.mining.batch.size.min, }}log.mining.batch.size.max, log.mining.batch.size.default control the size of database data read by the connector.

{{My question is, is the above configuration helpful for my problem? We want to control the reading speed and traffic size of the collection task, which has been adapted to our server configuration, there any other related configurations that need to be adjusted? For example, controlling the number of tasks run by a single service or other means. }}

Looking forward to your answer very much.

The error log is as follows

//代码占位符 2024-11-04 20:13:34,056 ERROR Oracle|relation_cdc_server_1851185398195687426|streaming Mining session stopped due to error. [io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource] java.lang.OutOfMemoryError: Java heap space 2024-11-04 20:43:30,518 ERROR Oracle|relation_cdc_server_1851185398195687426|streaming Producer failure [io.debezium.pipeline.ErrorHandler] java.lang.OutOfMemoryError: Java heap space 2024-11-04 20:43:30,518 ERROR || WorkerSourceTask{id=relation_cdc_server_1851573122545602561_1808443932259332097-0} Task threw an uncaught and unrecoverable exception. Task is being killed and will not recover until manually restarted [org.apache.kafka.connect.runtime.WorkerTask] org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped. at io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:67) at io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:267) at io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:61) at io.debezium.pipeline.ChangeEventSourceCoordinator.streamEvents(ChangeEventSourceCoordinator.java:312) at io.debezium.pipeline.ChangeEventSourceCoordinator.executeChangeEventSources(ChangeEventSourceCoordinator.java:203) at io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:143) at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:572) at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:317) at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144) at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642) at java.base/java.lang.Thread.run(Thread.java:1583) Caused by: java.lang.OutOfMemoryError: Java heap space