-

Feature Request

-

Resolution: Obsolete

-

Major

-

None

-

2.1.4.Final

-

None

-

False

-

None

-

False

What Debezium connector do you use and what version?

2.1.4.Final

What is the connector configuration?

<Your answer>

What is the captured database version and mode of deployment?

19c

What behavior do you expect?

discards or persists records of transactions that have not been committed for a long time;

If discarded, the time can be personalized and configured;

If data persistence is required, save data in a database or Kafka;

Do you see the same behaviour using the latest released Debezium version?

yes

Do you have the connector logs, ideally from start till finish?

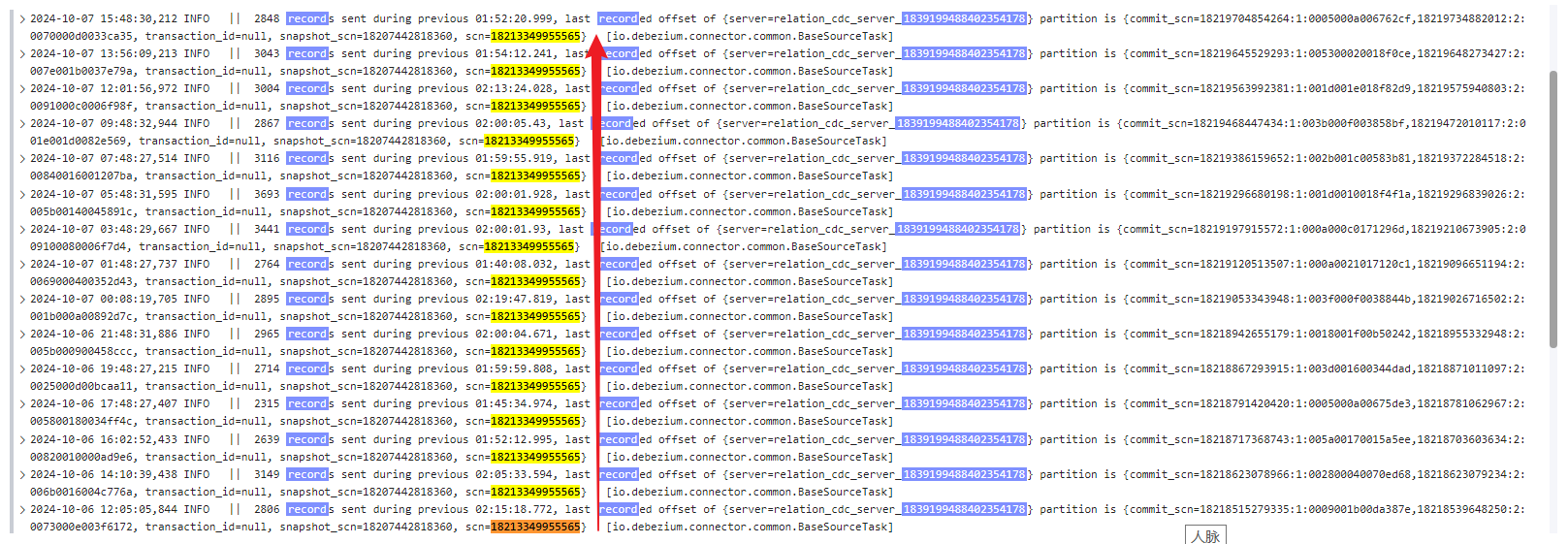

SCN not update for long time log detail:

then connector restart, NO SCN ERROR:

2024-10-11 17:55:28,169 INFO || Stopping down connector [io.debezium.connector.common.BaseSourceTask] ... 9 more at io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:142) Caused by: io.debezium.DebeziumException: Online REDO LOG files or archive log files do not contain the offset scn 18162207253753. Please perform a new snapshot. at java.base/java.lang.Thread.run(Thread.java:829) at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264) at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515) at io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:109) at io.debezium.pipeline.ChangeEventSourceCoordinator.executeChangeEventSources(ChangeEventSourceCoordinator.java:141) at io.debezium.pipeline.ChangeEventSourceCoordinator.streamEvents(ChangeEventSourceCoordinator.java:174) at io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:59) at io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:225) at io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:53) org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped.

How to reproduce the issue using our tutorial deployment?

The link to access the database is not released,then write to the database but do not commit transactions.

Feature request or enhancement

discards or persists records of transactions that have not been committed for a long time;

If discarded, the time can be personalized and configured,may cause data loss;

If data persistence is required, save data in a database or Kafka;

in this case,the min scn is loss in the log , if you want data not to be lost, you have to find a way to persistently store the data , in database or Kafka。

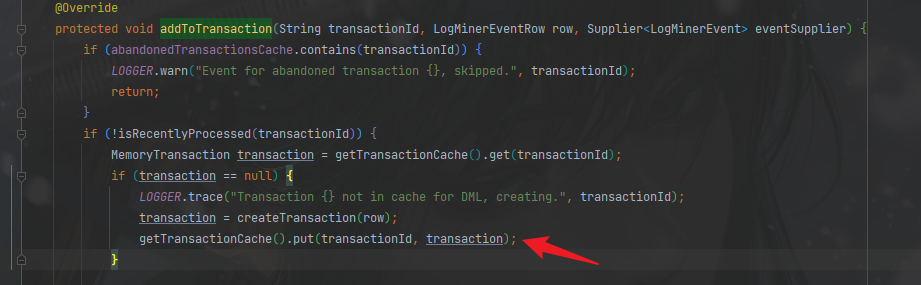

in addition,storing data to be processed in memory is dangerous, storage quantity limits should be added, and if exceeded, Kafka, databases, or files can be considered. Especially in scenarios where there are a large number of data operations and transactions have not been submitted for a short period of time.

io.debezium.connector.oracle.logminer.processor.memory.MemoryLogMinerEventProcessor#addToTransaction

io.debezium.connector.oracle.logminer.processor.memory.MemoryLogMinerEventProcessor#transactionCache

Which use case/requirement will be addressed by the proposed feature?

a large number of data operations and transactions have not been submitted for a short period of time.

Implementation ideas (optional)

<Your answer>