-

Bug

-

Resolution: Obsolete

-

Critical

-

None

-

False

-

-

False

-

Critical

In order to make your issue reports as actionable as possible, please provide the following information, depending on the issue type.

Bug report

For bug reports, provide this information, please:

What Debezium connector do you use and what version?

I am using Informix Debezium Connector and the version of the jars is 2.6.1 Final.

What is the connector configuration?

{

"connector.class": "io.debezium.connector.informix.InformixConnector",

"errors.log.include.messages": "true",

"topic.creation.default.partitions": "1",

"value.converter.schema.registry.subject.name.strategy": "io.confluent.kafka.serializers.subject.TopicNameStrategy",

"key.converter.schema.registry.subject.name.strategy": "io.confluent.kafka.serializers.subject.TopicNameStrategy",

"transforms": "unwrap,TSF1",

"signal.enabled.channels": "source",

"errors.deadletterqueue.context.headers.enable": "true",

"queue.buffering.max.ms": "90000",

"transforms.unwrap.drop.tombstones": "false",

"signal.data.collection": "cards_1952.mcp.dbz_signal",

"topic.creation.default.replication.factor": "1",

"errors.deadletterqueue.topic.replication.factor": "1",

"transforms.unwrap.type": "io.debezium.transforms.ExtractNewRecordState",

"errors.log.enable": "true",

"key.converter": "io.confluent.connect.avro.AvroConverter",

"database.user": "kafka",

"database.dbname": "cards_1952",

"topic.creation.default.compression.type": "lz4",

"schema.history.internal.kafka.bootstrap.servers": ".....",

"value.converter.schema.registry.url": "....",

"transforms.TSF1.static.value": "150",

"errors.max.retries": "-1",

"errors.deadletterqueue.topic.name": "informix-gpdb-source-errors",

"database.password": "******",

"name": "new_source",

"errors.tolerance": "all",

"send.buffer.bytes": "1000000",

"pk.mode": "primary_key",

"snapshot.mode": "schema_only",

"compression.type": "lz4",

"incremental.snapshot.chunk.size": "50000",

"tasks.max": "1",

"retriable.restart.connector.wait.ms": "60000",

"transforms.TSF1.static.field": "instance_id",

"schema.history.internal.store.only.captured.tables.ddl": "true",

"schema.history.internal.store.only.captured.databases.ddl": "true",

"transforms.TSF1.type": "org.apache.kafka.connect.transforms.InsertField$Value",

"tombstones.on.delete": "true",

"topic.prefix": "....",

"decimal.handling.mode": "double",

"schema.history.internal.kafka.topic": "informixschemahistory",

"value.converter": "io.confluent.connect.avro.AvroConverter",

"topic.creation.default.cleanup.policy": "compact",

"skipped.operations":"d",

"time.precision.mode": "connect",

"database.server.name": "....",

"snapshot.isolation.mode": "read_committed",

"database.port": "...",

"debug.logs": "true",

"column.exclude.list": "mcp.kafkatable.value1",

"database.hostname": "....",

"table.include.list": "cards_1952.mcp.kafkatable,cards_1952.mcp.dbz_signal",

"key.converter.schema.registry.url": "...."

}

What is the captured database version and mode of depoyment?

I am using the below versions of Informix and I am using the KAFKA Connect UI for Debezium connector deployment.

Informix Dynamic Server

14.10.FC10W1X2

Informix JDBC Driver for Informix Dynamic Server

4.50.JC10

KAFKA Version: 7.4.1-ce

What behaviour do you expect?

After adding "skipped.operations":"d" configuration in the informix source connector, all the delete operations executed on the tables on which CDC is enabled would be ignored by the Informix source connector and would stream only the inserts and updates operations.

What behaviour do you see?

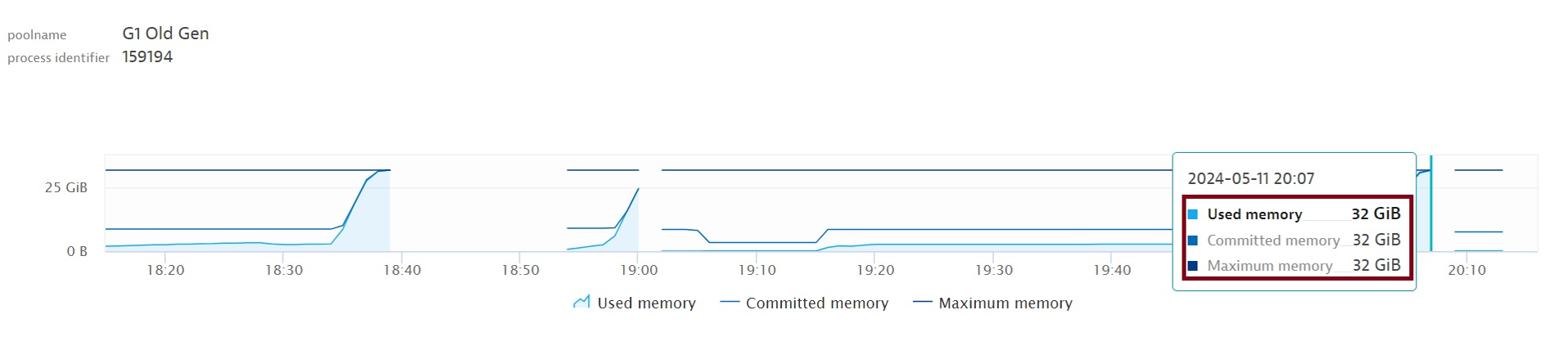

During the cleanup process in which a huge chunk of data is being deleted in a single session from a table in Informix on which CDC is enabled by the source connector, the source connector throws an error of Out of Java Heap Memory even though the KAFKA Heap Memory is assigned 32GB. This issue has only been observed with deletes and not with inserts or updates. Kindly suggest what should be done in order to prevent this issue from occurring.

[2024-04-26 03:29:12,999] ERROR WorkerSourceTask{id=Source Informix-0} Task threw an uncaught and unrecoverable exception. Task is being killed and will not recover until manually restarted (org.apache.kafka.connect.runtime.WorkerTask:210)

java.lang.OutOfMemoryError: Java heap space

[2024-04-26 03:29:12,999] ERROR Uncaught exception in thread 'kafka-producer-network-thread | connect-cluster--offsets': (org.apache.kafka.common.utils.KafkaThread:49)

java.lang.OutOfMemoryError: Java heap space

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:567)

at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:328)

at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:243)

at java.base/java.lang.Thread.run(Thread.java:829)

Exception in thread "IfxSqliConnectCleaner-Thread-1" [2024-04-26 03:29:12,999] INFO [Sink Destination|task-0] Received 0 records (io.confluent.connect.jdbc.sink.JdbcSinkTask:93)