-

Bug

-

Resolution: Unresolved

-

Major

-

None

-

None

-

False

-

None

-

False

In order to make your issue reports as actionable as possible, please provide the following information, depending on the issue type.

Bug repDeort

For bug reports, provide this information, please:

What Debezium connector do you use and what version?

2.6.0.Final, PostgresConnector

What is the connector configuration?

{

"name": "orders-cloud-v1-connector",

"config": {

"connector.class": "io.debezium.connector.postgresql.PostgresConnector",

"plugin.name": "pgoutput",

"snapshot.mode": "initial",

"topic.prefix": "orders-cloud-v1",

"topic.heartbeat.prefix": "sys_debezium-heartbeat",

"table.include.list": "public.orders",

"message.key.columns": "public.orders:id",

"slot.name": "orders_slot",

"publication.name": "orders_publication",

"publication.autocreate.mode": "disabled",

"database.dbname": "productsdb",

"database.hostname": "localhost",

"database.port": "5432",

"database.user": "postgres",

"database.password": "postgres",

"key.converter" : "org.apache.kafka.connect.storage.StringConverter",

"key.converter.schemas.enable": false,

"value.converter": "org.apache.kafka.connect.json.JsonConverter",

"value.converter.schemas.enable": false,

"decimal.handling.mode": "precise",

"tombstones.on.delete": false,

"null.handling.mode": "keep",

"heartbeat.interval.ms": "15000",

"transforms": "filterSignalEvents,tracing,unwrap,ReplaceField,moveHeadersToValue,dropPrefix",

"transforms.addMetadataHeaders.type": "org.apache.kafka.connect.transforms.HeaderFrom$Value",

"transforms.addMetadataHeaders.fields": "source,op,transaction",

"transforms.addMetadataHeaders.headers": "source,op,transaction",

"transforms.addMetadataHeaders.operation": "copy",

"transforms.addMetadataHeaders.predicate": "isHeartbeat",

"transforms.addMetadataHeaders.negate": true,

"transforms.unwrap.type": "io.debezium.transforms.ExtractNewRecordState",

"transforms.unwrap.add.fields": "op,lsn",

"transforms.unwrap.delete.handling.mode": "rewrite",

"transforms.unwrap.drop.tombstones": "true",

"transforms.setSchemaMetadata.type": "org.apache.kafka.connect.transforms.SetSchemaMetadata$Value",

"transforms.setSchemaMetadata.schema.name": "music.Order",

"transforms.setSchemaMetadata.predicate": "isHeartbeat",

"transforms.setSchemaMetadata.negate": true,

"transforms.dropPrefix.type": "org.apache.kafka.connect.transforms.RegexRouter",

"transforms.dropPrefix.regex": "orders-cloud-v1\\.public\\.(.*)",

"transforms.dropPrefix.replacement": "music.subscriptions.order.events",

"transforms.dropPrefix.predicate": "isHeartbeat",

"transforms.dropPrefix.negate": true,

"transforms.filterSignalEvents.type": "org.apache.kafka.connect.transforms.Filter",

"transforms.filterSignalEvents.predicate": "isDebeziumSignal",

"transforms.tracing.type": "io.debezium.transforms.tracing.ActivateTracingSpan",

"transforms.tracing.tracing.with.context.field.only": "true",

"transforms.ReplaceField.type": "org.apache.kafka.connect.transforms.ReplaceField$Value",

"transforms.ReplaceField.exclude": "tracingspancontext",

"transforms.moveHeadersToValue.type": "io.debezium.transforms.HeaderToValue",

"transforms.moveHeadersToValue.operation": "copy",

"transforms.moveHeadersToValue.headers": "traceparent",

"transforms.moveHeadersToValue.fields": "traceparent",

"predicates": "isHeartbeat,isDebeziumSignal",

"predicates.isHeartbeat.type": "org.apache.kafka.connect.transforms.predicates.TopicNameMatches",

"predicates.isHeartbeat.pattern": "sys_debezium-heartbeat.*",

"predicates.isDebeziumSignal.type": "org.apache.kafka.connect.transforms.predicates.TopicNameMatches",

"predicates.isDebeziumSignal.pattern": "orders-cloud-v1\\.public\\.debezium_signal",

"signal.enabled.channels": "source",

"signal.data.collection": "public.debezium_signal"

}

}

What is the captured database version and mode of depoyment?

(E.g. on-premises, with a specific cloud provider, etc.)

Postgres 15

What behaviour do you expect?

Debezium ActivateTracingSpan smt inserts header traceparent with debezium-read operation's span as it's written in documentation

What behaviour do you see?

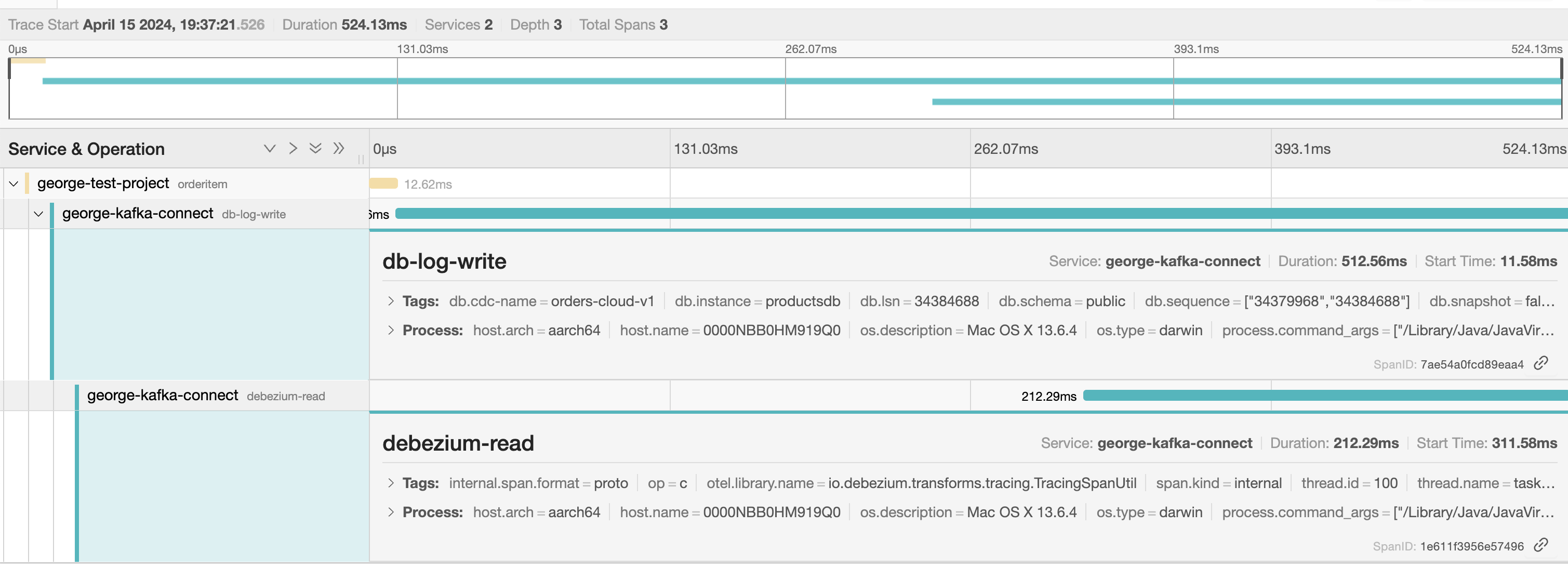

Debezium ActivateTracingSpan smt inserts header traceparent with db-log-write operation's span.

As we can see debezium-read span is 1e611f3956e57496

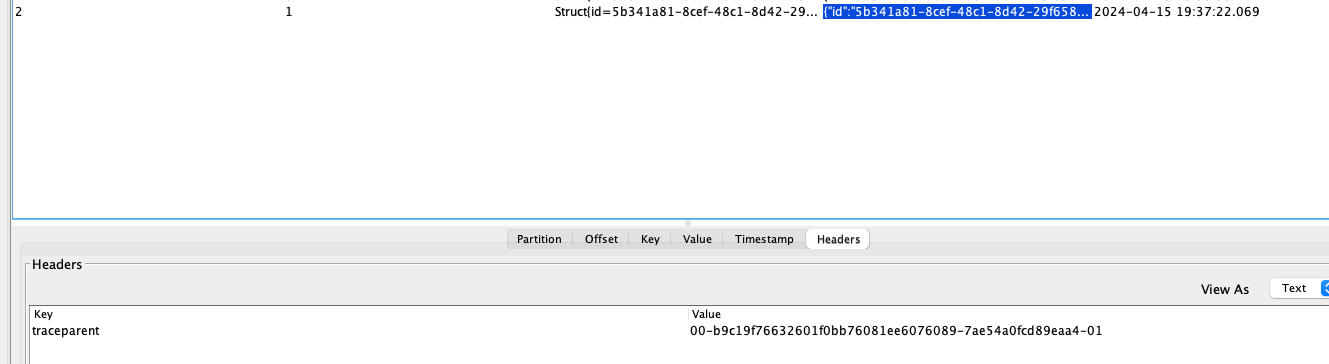

In Offset Explorer however there is span 7ae54a0fcd89eaa4:

Actually I see the same behaviour with EventRouter SMT. If i add Debezium Interceptor it will add onSend span as child of db-log-write span and will send traceparent with db-log-write span to kafka.

Do you see the same behaviour using the latest relesead Debezium version?

(Ideally, also verify with latest Alpha/Beta/CR version)

<Your answer>

Do you have the connector logs, ideally from start till finish?

(You might be asked later to provide DEBUG/TRACE level log)

How to reproduce the issue using our tutorial deployment?

Create table orders:

create table orders ( id uuid not null constraint order_pk primary key, client_id uuid not null, tracingspancontext varchar(255) ); -- create publication and replication slot select pg_create_logical_replication_slot('orders_slot', 'pgoutput'); create publication orders_publication for table orders;

start kafka-connect with open telemetry jars on classpath

register connector with given config via kafka-connect rest api

Feature request or enhancement

For feature requests or enhancements, provide this information, please:

Which use case/requirement will be addressed by the proposed feature?

OpenTelemetry Integration

Implementation ideas (optional)

Probably this issue appears because debezium-read span is started and closed via finally block within db-log-write operation. Context is put to record headers after debezium-read closure so db-log-write span is written to traceparent. Perhaps context should be added to headers before debezium-read closure