-

Bug

-

Resolution: Obsolete

-

Major

-

None

-

None

-

False

-

-

False

In order to make your issue reports as actionable as possible, please provide the following information, depending on the issue type.

Bug report

For bug reports, provide this information, please:

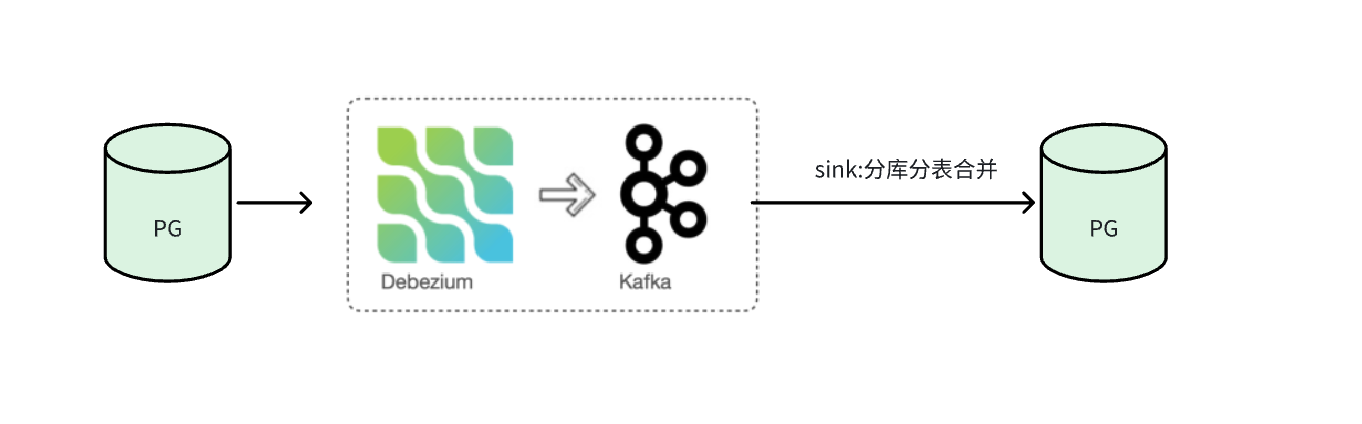

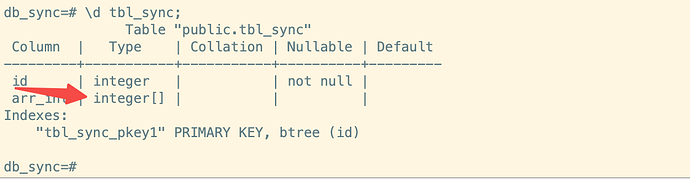

Using debezium to synchronize data from pg to pg for database, table, and merge operations. Table structure fields containing array types cannot be consumed through sink

###jdbc:pg to kafka config:

{ "connector.class": "io.debezium.connector.postgresql.PostgresConnector", "database.user": "postgres", "database.dbname": "db_sync", "topic.creation.default.partitions": "1", "slot.name": "pg_233_db_sync", "tasks.max": "1", "publication.name": "pub_db_sync", "transforms": "1", "time.precision.mode": "connect", "database.port": "5432", "plugin.name": "pgoutput", "transforms.1.topic.replacement": "$1tbl_sync", "transforms.1.key.field.name": "source_table", "transforms.1.topic.regex": "(.*)tbl_sync", "topic.prefix": "source_pg_233_1", "decimal.handling.mode": "string", "database.hostname": "host-ip", "database.password": "**********", "topic.creation.default.replication.factor": "1", "name": "source_pg_233_1", "transforms.1.type": "io.debezium.transforms.ByLogicalTableRouter", "table.include.list": "public.tbl_sync" }kafka data:

{

"schema": {

"type": "struct",

"fields": [

{

"type": "struct",

"fields": [

,

{

"type": "array",

"items":

,

"optional": false,

"field": "arr_int"

}

],

"optional": true,

"name": "source_pg_233_1.public.tbl_sync.Value",

"field": "before"

},

{

"type": "struct",

"fields": [

,

{

"type": "array",

"items":

,

"optional": false,

"field": "arr_int"

}

],

"optional": true,

"name": "source_pg_233_1.public.tbl_sync.Value",

"field": "after"

},

{

"type": "struct",

"fields": [

,

,

,

,

{

"type": "string",

"optional": true,

"name": "io.debezium.data.Enum",

"version": 1,

"parameters":

,

"default": "false",

"field": "snapshot"

},

,

,

,

,

,

,

],

"optional": false,

"name": "io.debezium.connector.postgresql.Source",

"field": "source"

},

,

,

{

"type": "struct",

"fields": [

,

,

],

"optional": true,

"name": "event.block",

"version": 1,

"field": "transaction"

}

],

"optional": false,

"name": "source_pg_233_1.public.tbl_sync.Envelope",

"version": 1

},

"payload": {

"before": null,

"after":

,

"source":

,

"op": "c",

"ts_ms": 1711378788960,

"transaction": null

}

}

for sink:kafka-pg config

{ "connector.class": "io.debezium.connector.jdbc.JdbcSinkConnector", "connection.password": "*******", "primary.key.mode": "record_key", "tasks.max": "1", "batch.size": "3000", "connection.username": "postgres", "transforms": "unwrap,route", "topics.regex": "source_pg_233(.*)public.tbl_(.*)", "transforms.route.type": "org.apache.kafka.connect.transforms.RegexRouter", "transforms.route.regex": "([^.]+)\\.([^.]+)\\.([^.]+)", "delete.enabled": "true", "auto.evolve": "true", "schema.evolution": "basic", "name": "p1", "auto.create": "true", "primary.key.fields": "id", "transforms.unwrap.type": "io.debezium.transforms.ExtractNewRecordState", "connection.url": "jdbc:postgresql://ip:5432/db_sync", "errors.log.enable": "true", "transforms.route.replacement": "$3", "insert.mode": "upsert" }

What Debezium connector do you use and what version?

2.5.0

What is the connector configuration?

2.5.0

What is the captured database version and mode of depoyment?

postgres15

What behaviour do you expect?

By using the sink operation, it is possible to synchronize data of array type from Kafka consumption data with the target database

What behaviour do you see?

{

"name": "p1",

"connector":

,

"tasks": [

],

"type": "sink"

}

Do you see the same behaviour using the latest relesead Debezium version?

(Ideally, also verify with latest Alpha/Beta/CR version)

Not using other versions

Do you have the connector logs, ideally from start till finish?

(You might be asked later to provide DEBUG/TRACE level log)

[2024-03-23 14:39:35,098] INFO [Consumer clientId=connector-consumer-test-db-marketing-0, groupId=connect-test-db-marketing] Resetting offset for partition test_db_marketing.public.tbl_coupon_template-0 to position FetchPosition{offset=0, offsetEpoch=Optional.empty, currentLeader=LeaderAndEpoch{leader=Optional[1:9092 (id: 0 rack: null)], epoch=0}}. (org.apache.kafka.clients.consumer.internals.SubscriptionState:398)

[2024-03-23 14:39:35,115] ERROR Failed to process record: Failed to process a sink record (io.debezium.connector.jdbc.JdbcSinkConnectorTask:112)

org.apache.kafka.connect.errors.ConnectException: Failed to process a sink record

at io.debezium.connector.jdbc.JdbcChangeEventSink.buildRecordSinkDescriptor(JdbcChangeEventSink.java:181)

at io.debezium.connector.jdbc.JdbcChangeEventSink.execute(JdbcChangeEventSink.java:85)

at io.debezium.connector.jdbc.JdbcSinkConnectorTask.put(JdbcSinkConnectorTask.java:103)

at org.apache.kafka.connect.runtime.WorkerSinkTask.deliverMessages(WorkerSinkTask.java:582)

at org.apache.kafka.connect.runtime.WorkerSinkTask.poll(WorkerSinkTask.java:330)

at org.apache.kafka.connect.runtime.WorkerSinkTask.iteration(WorkerSinkTask.java:232)

at org.apache.kafka.connect.runtime.WorkerSinkTask.execute(WorkerSinkTask.java:201)

at org.apache.kafka.connect.runtime.WorkerTask.doRun(WorkerTask.java:188)

at org.apache.kafka.connect.runtime.WorkerTask.run(WorkerTask.java:237)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:572)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:317)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642)

at java.base/java.lang.Thread.run(Thread.java:1583)

Caused by: org.apache.kafka.connect.errors.ConnectException: Failed to resolve column type for schema: ARRAY (null)

at io.debezium.connector.jdbc.dialect.GeneralDatabaseDialect.getSchemaType(GeneralDatabaseDialect.java:481)

at io.debezium.connector.jdbc.SinkRecordDescriptor$FieldDescriptor.<init>(SinkRecordDescriptor.java:195)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.applyNonKeyField(SinkRecordDescriptor.java:483)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.applyNonKeyFields(SinkRecordDescriptor.java:476)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.readSinkRecordNonKeyData(SinkRecordDescriptor.java:458)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.build(SinkRecordDescriptor.java:313)

at io.debezium.connector.jdbc.JdbcChangeEventSink.buildRecordSinkDescriptor(JdbcChangeEventSink.java:178)

... 13 more

[2024-03-23 14:39:35,688] INFO WorkerSourceTask{id=test_db_payment_001-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:510)

[2024-03-23 14:39:37,352] INFO WorkerSourceTask{id=test_db_bo_shop-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:510)

[2024-03-23 14:39:39,380] INFO WorkerSourceTask{id=source_pg_233_1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:510)

[2024-03-23 14:39:39,808] INFO WorkerSourceTask{id=test_db_payment-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:510)

[2024-03-23 14:39:39,808] INFO WorkerSourceTask{id=thanos_db_payment-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:510)

[2024-03-23 14:39:39,808] INFO WorkerSourceTask{id=test_db_vulcan-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:510)

[2024-03-23 14:39:41,665] INFO WorkerSourceTask{id=source_tbl_international_language_data_version-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:510)

[2024-03-23 14:39:42,377] ERROR JDBC sink connector failure (io.debezium.connector.jdbc.JdbcSinkConnectorTask:95)

org.apache.kafka.connect.errors.ConnectException: Failed to process a sink record

at io.debezium.connector.jdbc.JdbcChangeEventSink.buildRecordSinkDescriptor(JdbcChangeEventSink.java:181)

at io.debezium.connector.jdbc.JdbcChangeEventSink.execute(JdbcChangeEventSink.java:85)

at io.debezium.connector.jdbc.JdbcSinkConnectorTask.put(JdbcSinkConnectorTask.java:103)

at org.apache.kafka.connect.runtime.WorkerSinkTask.deliverMessages(WorkerSinkTask.java:582)

at org.apache.kafka.connect.runtime.WorkerSinkTask.poll(WorkerSinkTask.java:330)

at org.apache.kafka.connect.runtime.WorkerSinkTask.iteration(WorkerSinkTask.java:232)

at org.apache.kafka.connect.runtime.WorkerSinkTask.execute(WorkerSinkTask.java:201)

at org.apache.kafka.connect.runtime.WorkerTask.doRun(WorkerTask.java:188)

at org.apache.kafka.connect.runtime.WorkerTask.run(WorkerTask.java:237)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:572)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:317)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642)

at java.base/java.lang.Thread.run(Thread.java:1583)

Caused by: org.apache.kafka.connect.errors.ConnectException: Failed to resolve column type for schema: ARRAY (null)

at io.debezium.connector.jdbc.dialect.GeneralDatabaseDialect.getSchemaType(GeneralDatabaseDialect.java:481)

at io.debezium.connector.jdbc.SinkRecordDescriptor$FieldDescriptor.<init>(SinkRecordDescriptor.java:195)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.applyNonKeyField(SinkRecordDescriptor.java:483)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.applyNonKeyFields(SinkRecordDescriptor.java:476)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.readSinkRecordNonKeyData(SinkRecordDescriptor.java:458)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.build(SinkRecordDescriptor.java:313)

at io.debezium.connector.jdbc.JdbcChangeEventSink.buildRecordSinkDescriptor(JdbcChangeEventSink.java:178)

... 13 more

[2024-03-23 14:39:42,378] ERROR WorkerSinkTask{id=test-db-marketing-0} Task threw an uncaught and unrecoverable exception. Task is being killed and will not recover until manually restarted. Error: JDBC sink connector failure (org.apache.kafka.connect.runtime.WorkerSinkTask:608)

org.apache.kafka.connect.errors.ConnectException: JDBC sink connector failure

at io.debezium.connector.jdbc.JdbcSinkConnectorTask.put(JdbcSinkConnectorTask.java:96)

at org.apache.kafka.connect.runtime.WorkerSinkTask.deliverMessages(WorkerSinkTask.java:582)

at org.apache.kafka.connect.runtime.WorkerSinkTask.poll(WorkerSinkTask.java:330)

at org.apache.kafka.connect.runtime.WorkerSinkTask.iteration(WorkerSinkTask.java:232)

at org.apache.kafka.connect.runtime.WorkerSinkTask.execute(WorkerSinkTask.java:201)

at org.apache.kafka.connect.runtime.WorkerTask.doRun(WorkerTask.java:188)

at org.apache.kafka.connect.runtime.WorkerTask.run(WorkerTask.java:237)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:572)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:317)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642)

at java.base/java.lang.Thread.run(Thread.java:1583)

Caused by: org.apache.kafka.connect.errors.ConnectException: Failed to process a sink record

at io.debezium.connector.jdbc.JdbcChangeEventSink.buildRecordSinkDescriptor(JdbcChangeEventSink.java:181)

at io.debezium.connector.jdbc.JdbcChangeEventSink.execute(JdbcChangeEventSink.java:85)

at io.debezium.connector.jdbc.JdbcSinkConnectorTask.put(JdbcSinkConnectorTask.java:103)

... 11 more

Caused by: org.apache.kafka.connect.errors.ConnectException: Failed to resolve column type for schema: ARRAY (null)

at io.debezium.connector.jdbc.dialect.GeneralDatabaseDialect.getSchemaType(GeneralDatabaseDialect.java:481)

at io.debezium.connector.jdbc.SinkRecordDescriptor$FieldDescriptor.<init>(SinkRecordDescriptor.java:195)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.applyNonKeyField(SinkRecordDescriptor.java:483)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.applyNonKeyFields(SinkRecordDescriptor.java:476)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.readSinkRecordNonKeyData(SinkRecordDescriptor.java:458)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.build(SinkRecordDescriptor.java:313)

at io.debezium.connector.jdbc.JdbcChangeEventSink.buildRecordSinkDescriptor(JdbcChangeEventSink.java:178)

... 13 more

[2024-03-23 14:39:42,378] ERROR WorkerSinkTask{id=test-db-marketing-0} Task threw an uncaught and unrecoverable exception. Task is being killed and will not recover until manually restarted (org.apache.kafka.connect.runtime.WorkerTask:190)

org.apache.kafka.connect.errors.ConnectException: Exiting WorkerSinkTask due to unrecoverable exception.

at org.apache.kafka.connect.runtime.WorkerSinkTask.deliverMessages(WorkerSinkTask.java:610)

at org.apache.kafka.connect.runtime.WorkerSinkTask.poll(WorkerSinkTask.java:330)

at org.apache.kafka.connect.runtime.WorkerSinkTask.iteration(WorkerSinkTask.java:232)

at org.apache.kafka.connect.runtime.WorkerSinkTask.execute(WorkerSinkTask.java:201)

at org.apache.kafka.connect.runtime.WorkerTask.doRun(WorkerTask.java:188)

at org.apache.kafka.connect.runtime.WorkerTask.run(WorkerTask.java:237)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:572)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:317)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642)

at java.base/java.lang.Thread.run(Thread.java:1583)

Caused by: org.apache.kafka.connect.errors.ConnectException: JDBC sink connector failure

at io.debezium.connector.jdbc.JdbcSinkConnectorTask.put(JdbcSinkConnectorTask.java:96)

at org.apache.kafka.connect.runtime.WorkerSinkTask.deliverMessages(WorkerSinkTask.java:582)

... 10 more

Caused by: org.apache.kafka.connect.errors.ConnectException: Failed to process a sink record

at io.debezium.connector.jdbc.JdbcChangeEventSink.buildRecordSinkDescriptor(JdbcChangeEventSink.java:181)

at io.debezium.connector.jdbc.JdbcChangeEventSink.execute(JdbcChangeEventSink.java:85)

at io.debezium.connector.jdbc.JdbcSinkConnectorTask.put(JdbcSinkConnectorTask.java:103)

... 11 more

Caused by: org.apache.kafka.connect.errors.ConnectException: Failed to resolve column type for schema: ARRAY (null)

at io.debezium.connector.jdbc.dialect.GeneralDatabaseDialect.getSchemaType(GeneralDatabaseDialect.java:481)

at io.debezium.connector.jdbc.SinkRecordDescriptor$FieldDescriptor.<init>(SinkRecordDescriptor.java:195)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.applyNonKeyField(SinkRecordDescriptor.java:483)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.applyNonKeyFields(SinkRecordDescriptor.java:476)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.readSinkRecordNonKeyData(SinkRecordDescriptor.java:458)

at io.debezium.connector.jdbc.SinkRecordDescriptor$Builder.build(SinkRecordDescriptor.java:313)

at io.debezium.connector.jdbc.JdbcChangeEventSink.buildRecordSinkDescriptor(JdbcChangeEventSink.java:178)

... 13 more

[2024-03-23 14:39:42,378] INFO Closing session. (io.debezium.connector.jdbc.JdbcChangeEventSink:228)

[2024-03-23 14:39:42,378] INFO Closing the session factory (io.debezium.connector.jdbc.JdbcSinkConnectorTask:143)

How to reproduce the issue using our tutorial deployment?

<Your answer>

Feature request or enhancement

For feature requests or enhancements, provide this information, please:

Which use case/requirement will be addressed by the proposed feature?

<Your answer>

Implementation ideas (optional)