-

Bug

-

Resolution: Obsolete

-

Critical

-

None

-

1.9.0.Final

-

None

-

False

-

-

False

Bug report

What Debezium connector do you use and what version?

MySQL connector with version 1.9.0.Final.

What is the connector configuration?

class: io.debezium.connector.mysql.MySqlConnector

config:

database.characterEncoding: UTF-8

database.history.consumer.sasl.jaas.config: ${file:<path_to_msk_jaas>}

database.history.consumer.sasl.mechanism: SCRAM-SHA-512

database.history.consumer.security.protocol: SASL_SSL

database.history.kafka.bootstrap.servers: bootstrap_servers_in_AWS

database.history.kafka.topic: db-schema-changes.history

database.history.producer.sasl.jaas.config: ${file:<path_to_msk_jaas>}

database.history.producer.sasl.mechanism: SCRAM-SHA-512

database.history.producer.security.protocol: SASL_SSL

database.history.store.only.captured.tables.ddl: true

database.hostname: source_database_host

database.password: ${file:<path_to_source_db_password>

database.port: "3306"

database.server.id: "184054"

database.server.name: <database_name>

database.user: ${file:<path_to_source_db_username>}

event.deserialization.failure.handling.mode: warn

include.schema.changes: "true"

inconsistent.schema.handling.mode: warn

internal.key.converter: org.apache.kafka.connect.json.JsonConverter

internal.key.converter.schemas.enable: false

internal.value.converter: org.apache.kafka.connect.json.JsonConverter

internal.value.converter.schemas.enable: false

key.converter: com.amazonaws.services.schemaregistry.kafkaconnect.AWSKafkaAvroConverter

key.converter.avroRecordType: GENERIC_RECORD

key.converter.region: eu-central-1

key.converter.registry.name: <registry_name>

key.converter.schemaAutoRegistrationEnabled: true

key.converter.schemaNameGenerationClass: full.path.to.CustomerProvidedSchemaNamingStrategy

key.converter.schemas.enable: true

signal.data.collection: schema.debezium_signal

table.include.list: schema.debezium_signal, schema.testtable1, schema.testtable2, schema.testtable3

time.precision.mode: connect

tombstones.on.delete: true

topic.creation.default.partitions: 3

topic.creation.default.replication.factor: 3

value.converter: com.amazonaws.services.schemaregistry.kafkaconnect.AWSKafkaAvroConverter

value.converter.avroRecordType: GENERIC_RECORD

value.converter.region: eu-central-1

value.converter.registry.name: <registry_name>

value.converter.schemaAutoRegistrationEnabled: true

value.converter.schemaNameGenerationClass: full.path.to.CustomerProvidedSchemaNamingStrategy

value.converter.schemas.enable: true

tasksMax: 1

What is the captured database version and mode of depoyment?

(E.g. on-premises, with a specific cloud provider, etc.)

We've tested with both RDS and an on-prem. database. Version 5.7 of MySQL.

What behaviour do you expect?

We fire up the source-connector with schema.testtable1, schema.testtable2 as well as the signalling table configured. After running the connector for a while, we want to add an additional table, schema.testtable3. We add testtable3 to the include.list and restart the connector. Once in place, we signal a snapshot. We expect the signal to trigger an incremental snapshot and start populating the topic in kafka.

What behaviour do you see?

Following the described case above, we do not see testtable3 being created. Instead we see this message and no snapshot is happening.

2022-04-26 11:21:27,920 WARN [mysql-connector|task-0] Schema not found for table 'schema.testtable3', known tables [schema.testtable1, schema.debezium_signal, schema.testtable2] (io.debezium.pipeline.source.snapshot.incremental.AbstractIncrementalSnapshotChangeEventSource) [blc-<RDS mysql instance>]

Do you see the same behaviour using the latest relesead Debezium version?

(Ideally, also verify with latest Alpha/Beta/CR version)

We have only targeted 1.9.0.Final on our local setup. We do not see this error in the tutorial that targets 1.9.1.Final (but that doesn't rely on schemas).

Do you have the connector logs, ideally from start till finish?

(You might be asked later to provide DEBUG/TRACE level log)

I don't have more logs right now, but we can try to provide more if needed.

How to reproduce the issue using our tutorial deployment?

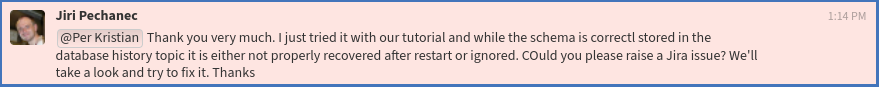

We have not been able to reproduce the issue following https://debezium.io/blog/2021/10/07/incremental-snapshots/ (and adapting it to MySQL). This seems to indicate that the problem might be related to the use of a Schema Registry. This zulipchat-thread also contains some information: https://debezium.zulipchat.com/#narrow/stream/302529-users/topic/MySQL.20signalling

Additional information: