-

Bug

-

Resolution: Done

-

Major

-

1.4.0.Final

-

False

-

False

-

Undefined

-

First of all, thank you very much to the debezium team. As a developer, I have been focusing on debezium since 2019. Recently, I found many problems when using Oracle connector

As follows:

1.Not in the official documents database.schema If this configuration is missing, an error will be reported directly

ERROR The 'database.schema' value is invalid: The 'database.schema' be provided when using the LogMiner connection adapter

2.After configuration, there is no description in the document table.include.list Many users will encounter this error.

ERROR Cannot parse statement : update "HR"."JOBS" set "MAX_SALARY" = '9002' where "JOB_ID" = 'MK_REP' and "JOB_TITLE" = 'Marketing Representative' and "MIN_SALARY" = '4000' and "MAX_SALARY" = '9001';, transaction: 0A000C00DB040000, due to the {} (io.debezium.connector.oracle.jsqlparser.SimpleDmlParser) io.debezium.text.ParsingException: Trying to parse a table 'ORCLDB.HR.JOBS', which does not exist. at io.debezium.connector.oracle.jsqlparser.SimpleDmlParser.initColumns(SimpleDmlParser.java:147)

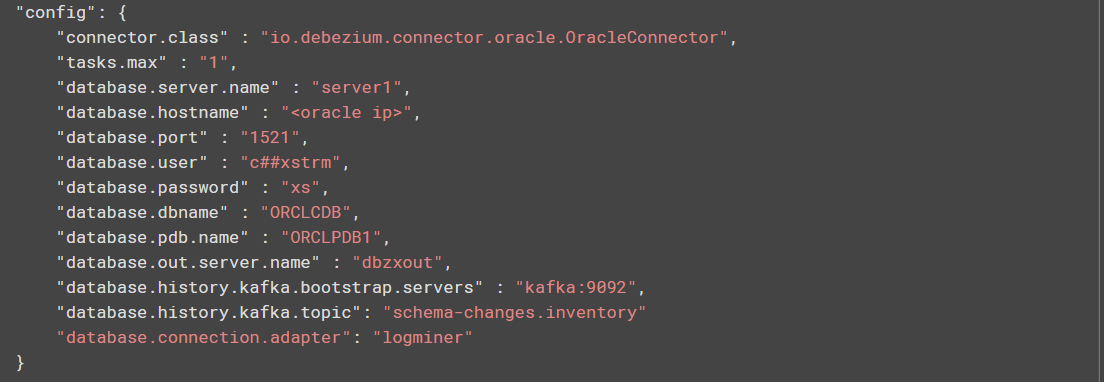

according to this issuehttps://issues.redhat.com/projects/DBZ/issues/DBZ-2691?filter=doneissues ,Naros made suggestions

Correct format: schema.table Wrong format: table

other similar issue

https://issues.redhat.com/projects/DBZ/issues/DBZ-2969?filter=doneissues

https://issues.redhat.com/projects/DBZ/issues/DBZ-2691?filter=doneissues

https://issues.redhat.com/browse/DBZ-2946

3. Importantly, after above errors are corrected, Oracle connector will not report any more errors. It is always running. However, only history topic will be created in Kafka, which is not the same as described in the document.

my config is

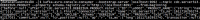

//json { "name": "server312", "config": { "connector.class" : "io.debezium.connector.oracle.OracleConnector", "tasks.max" : "1", "database.server.name" : "cdc.server312", "database.hostname" : "172.20.3.229", "database.port" : "1521", "database.user" : "c##logminer", "database.password" : "lm", "database.dbname" : "ORCL", "database.pdb.name" : "ORCLDB", "database.schema" : "INVENTORY", "table.include.list" : "INVENTORY.PRODUCT", "database.history.kafka.bootstrap.servers" : "172.20.3.180:9092", "database.history.kafka.topic": "server312.history", "database.out.server.name" : "dbzxout", "database.connection.adapter": "logminer" } }

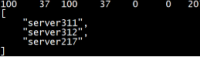

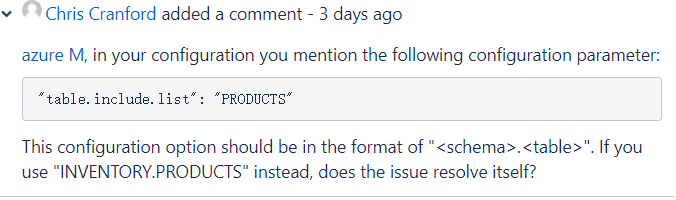

my all jobs, the server312 is created by me just now

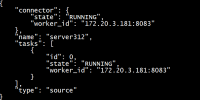

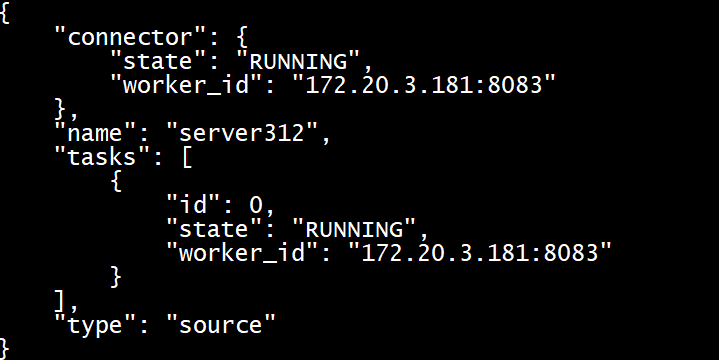

Obviously,the connector job is running

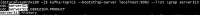

only history topic

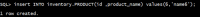

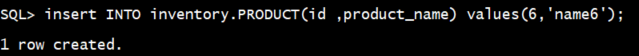

When I insert records into the product table, Kafka does not receive any data, and connector still does not throw any exception

II don't know which of my steps is wrong or whether there is a problem with the debezium Oracle connector itself

- is related to

-

DBZ-3009 Support multiple schemas with Oracle LogMiner

-

- Closed

-