-

Bug

-

Resolution: Done

-

Major

-

None

-

1.3.0.Final

-

None

-

False

-

False

-

Undefined

-

-

Debezium Version: 1.3

Oracle Instant version: 12.2

Oracle DB version: 12c release 2.

Name of DB: SLC15ALL

Captured Table: HIVE.DBS

When testing with Oracle NON-CDB, debezium throws that no metadata registered for captured table when committing a new transaction in the captured table.

At first, it has successfully captured the snapshot of the table (see attached successful-snapshot.txt) but doing some updates in the table throw exceptions (see attached exception-no-metadata-registered.txt)

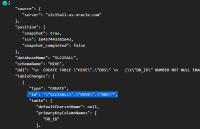

Upon investigation, seems like this happens if the SOURCE_DATABASE from XStream is different from the schema ID that was generated by Debezium. Debezium generated a schema with ID: SLC15ALL.HIVE.DBS (see attached initial-schema-generated-by-debezium.txt)

but the LCR contains the schema as SLC15ALL.US.ORACLE.COM.HIVE.DBS.

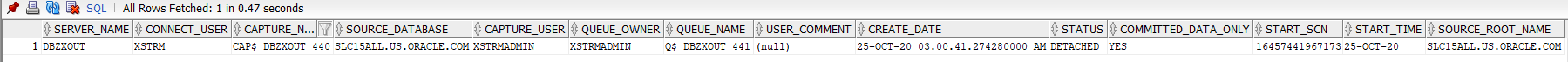

Here's the result of the query, SELECT * FROM ALL_XSTREAM_OUTBOUND;

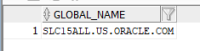

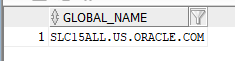

Reading from the documentation of XStream, the SOURCE_DATABASE comes from the Global DB Name. Here's the result of query, SELECT * FROM GLOBAL_NAME;

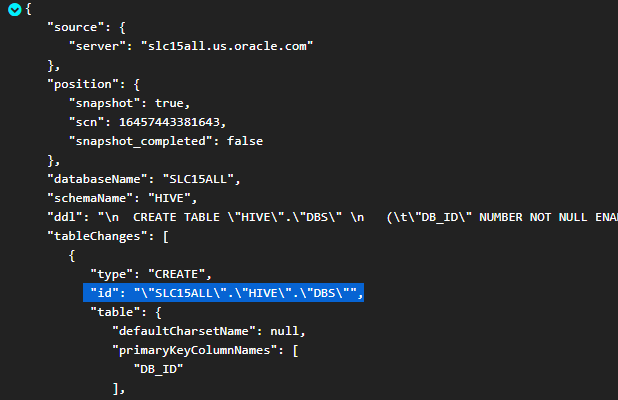

To verify that the issue lies with the "id" attribute of the schema, I produced a new message schema with the same content but changing the "id" to "SLC15ALL.US.ORACLE.COM.HIVE.DBS" and it is now working and not throwing the exception.

Below is the sample payload submitted in connect REST API:

{{ "name": "hive", "config": { "connector.class": "io.debezium.connector.oracle.OracleConnector", "tasks.max": "1", "database.server.name": "slc15all.us.oracle.com", "database.hostname": "slc15all.us.oracle.com", "database.port": "1521", "database.user": "xstrm", "database.password": "xs", "database.dbname": "slc15all", "database.out.server.name": "dbzxout", "database.history.kafka.bootstrap.servers": "localhost:9092", "database.history.kafka.topic": "schema-hive", "table.include.list": "hive.dbs", "include.schema.changes": "true", "database.oracle.version": "11", "database.schema": "hive", "poll.interval.ms": "500" }}

Note that I set the "database.oracle.version" to 11 as it is throwing an error if I didn't.

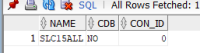

And using a NON-CDB Oracle DB:

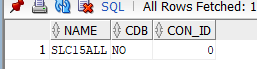

SELECT NAME, CDB, CON_ID FROM V$DATABASE;

Suggestion:

- Can we change the implementation of how it generated the ID in schema message such that it will use the SOURCE_DATABASE field in XSTREAM Outbound Server?

- Or can we add a new property field in REST like "database.xstream.sourcedb" and set the SOURCE_DATABASE so that if it's existing, it will use that field as the ID in schema?

- relates to

-

DBZ-2679 ChangeRecord informations don't connect with the TableSchema

-

- Closed

-