-

Feature Request

-

Resolution: Done

-

Major

-

3.12.0.GA, DWO 0.26.0

-

False

-

-

False

-

Release Notes

-

-

Enhancement

-

Done

-

-

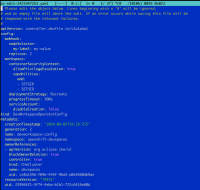

While pod placement for devspaces-operator and devworkspace-controller-manager can be managed via Subscription object, the settings are not applied for devworkspace-webhook-server meaning pod placement for devworkspace-webhook-server can not be controlled.

Yet enterprise customers have the desire to run certain Opertor and Controller pods on specific OpenShift Container Platform 4 - Node(s) based on labels and taints but are unable to control that for devworkspace-webhook-server, meaning the pod can be scheduled on pretty much any OpenShift Container Platform 4 - Node kubernetes considered suitable/feasible.

It therefore is requested to have a way that helps to control pod placement for devworkspace-webhook-server and therefore allow more control for enterprise customers to place the pod on the desired OpenShift Container Platform 4 - Node(s).

Acceptance criteria should be the following:

- Possibility to specify nodeSelector for devworkspace-webhook-server

- Possibility to specify toleration for devworkspace-webhook-server

- Replica count for devworkspace-webhook-server should be set to 2

Given that devworkspace-webhook-server is critical for the functionality of the entire OpenShift Container Platform 4 - Cluster (it's triggered when exec or rsh is run), the devworkspace-webhook-server Deployment should run with at least 2 replicas spread across different OpenShift Container Platform 4 - Node in order to prevent OpenShift Container Platform 4 - API disruption when one of the pod is restarting.

Currently, there is disruption seen when the pod is being restarted/rescheduled, which is not acceptable in terms of SLA granted by enterprise customers via OpenShift Container Platform 4 - API.

- is duplicated by

-

CRW-7128 [RN] Add pod placement capabilities for devworkspace-webhook-server and make it more robust

-

- Closed

-

- links to