I deployed Cluster Observability Operator (COO) v1.2.2 on an OpenShift 4.18 cluster, and deployed a UI PlugIn for troubleshooting panel.

I can see the nodes for a platform alert: korrel8rcli -o json neighbours -u https://korrel8r-openshift-cluster-observability-operator.apps.tsisodia-dev.51ty.p1.openshiftapps.com --query ‘alert:alert:{“alertname”:“PodDisruptionBudgetLimit”}’

WARNING: partial result, search timed out

,{“goal”:“metric:metric”,“start”:“alert:alert”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:Deployment.v1.apps”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:Pod.v1”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:PodDisruptionBudget.v1.policy”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:StatefulSet.v1.apps”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:DaemonSet.v1.apps”}],“nodes”:[{“class”:“k8s:LokiStack.v1.loki.grafana.com”,“count”:2,“queries”:[{“count”:1,“query”:“k8s:LokiStack.v1.loki.grafana.com:

{\“namespace\“:\“observability-hub\“,\“name\“:\“logging-loki\“}”},{“count”:1,“query”:“k8s:LokiStack.v1.loki.grafana.com:

{\“namespace\“:\“openshift-logging\“,\“name\“:\“logging-loki\“}”}]},{“class”:“k8s:PodDisruptionBudget.v1.policy”,“count”:2,“queries”:[{“count”:1,“query”:“k8s:PodDisruptionBudget.v1.policy:

{\“namespace\“:\“observability-hub\“,\“name\“:\“logging-loki-ingester\“}”},{“count”:1,“query”:“k8s:PodDisruptionBudget.v1.policy:

{\“namespace\“:\“openshift-logging\“,\“name\“:\“logging-loki-ingester\“}”}]},{“class”:“alert:alert”,“count”:2,“queries”:[{“count”:2,“query”:“alert:alert:

{\“alertname\“:\“PodDisruptionBudgetLimit\“}”}]},{“class”:“metric:metric”,“count”:80,“queries”:[

{“count”:80,“query”:“metric:metric:max by (namespace, poddisruptionbudget) (kube_poddisruptionbudget_status_current_healthy \u003c kube_poddisruptionbudget_status_desired_healthy)“}]}]}

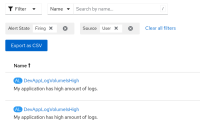

However, query for custom alert fails:

korrel8rcli -o json neighbours -u https://korrel8r-openshift-cluster-observability-operator.apps.tsisodia-dev.51ty.p1.openshiftapps.com --query ‘alert:alert:{“alertname”:“AlertExampleContainerCrashing”}’

I saw the configmap created by the troubleshooting panel has such data:

oc get cm korrel8r -o yaml

apiVersion: v1

data:

korrel8r.yaml: |

# Default configuration for deploying Korrel8r as a service in an OpenShift cluster.

# Store service URLs assume that stores are installed in their default locations.

stores:

- domain: k8s

- domain: alert

metrics: https://thanos-querier.openshift-monitoring.svc:9091

alertmanager: https://alertmanager-main.openshift-monitoring.svc:9094

certificateAuthority: ./run/secrets/kubernetes.io/serviceaccount/service-ca.crt

- domain: log

lokiStack: https://logging-loki-gateway-http.openshift-logging.svc:8080

certificateAuthority: ./run/secrets/kubernetes.io/serviceaccount/service-ca.crt

- domain: metric

metric: https://thanos-querier.openshift-monitoring.svc:9091

certificateAuthority: ./run/secrets/kubernetes.io/serviceaccount/service-ca.crt

- domain: netflow

lokiStack: https://loki-gateway-http.netobserv.svc:8080

certificateAuthority: ./run/secrets/kubernetes.io/serviceaccount/service-ca.crt

- domain: trace

tempoStack: https://tempo-platform-gateway.openshift-tracing.svc.cluster.local:8080/api/traces/v1/platform/tempo/api/search

certificateAuthority: ./run/secrets/kubernetes.io/serviceaccount/service-ca.crt

include:

- /etc/korrel8r/rules/all.yaml

kind: ConfigMap

Sounds like it does not have access to openshift-user-workload-monitoring.

Now I can see the nodes for a platform alert: korrel8rcli o json neighbours -u https://korrel8r-openshift-cluster-observability-operator.apps.tsisodia-dev.51ty.p1.openshiftapps.com -query ‘alert!https://a.slack-edge.com/production-standard-emoji-assets/14.0/apple-medium/2757@2x.png!

’

WARNING: partial result, search timed out

,{“goal”:“metric:metric”,“start”:“alert:alert”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:Deployment.v1.apps”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:Pod.v1”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:PodDisruptionBudget.v1.policy”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:StatefulSet.v1.apps”},{“goal”:“k8s:LokiStack.v1.loki.grafana.com”,“start”:“k8s:DaemonSet.v1.apps”}],“nodes”:[{“class”:“k8s:LokiStack.v1.loki.grafana.com”,“count”:2,“queries”:[{“count”:1,“query”:“k8s:LokiStack.v1.loki.grafana.com:

{\“namespace\“:\“observability-hub\“,\“name\“:\“logging-loki\“}”},{“count”:1,“query”:“k8s:LokiStack.v1.loki.grafana.com:

{\“namespace\“:\“openshift-logging\“,\“name\“:\“logging-loki\“}”}]},{“class”:“k8s:PodDisruptionBudget.v1.policy”,“count”:2,“queries”:[{“count”:1,“query”:“k8s:PodDisruptionBudget.v1.policy:

{\“namespace\“:\“observability-hub\“,\“name\“:\“logging-loki-ingester\“}”},{“count”:1,“query”:“k8s:PodDisruptionBudget.v1.policy:

{\“namespace\“:\“openshift-logging\“,\“name\“:\“logging-loki-ingester\“}”}]},{“class”:“alert:alert”,“count”:2,“queries”:[{“count”:2,“query”:“alert!https://a.slack-edge.com/production-standard-emoji-assets/14.0/apple-medium/2757@2x.png!

{\“alertname\“:\“PodDisruptionBudgetLimit\“}”}]},{“class”:“metric:metric”,“count”:80,“queries”:[

{“count”:80,“query”:“metric:metric:max by (namespace, poddisruptionbudget) (kube_poddisruptionbudget_status_current_healthy \u003c kube_poddisruptionbudget_status_desired_healthy)“}]}]}

3:14

However, query for custom alert fails:

3:14

korrel8rcli o json neighbours -u https://korrel8r-openshift-cluster-observability-operator.apps.tsisodia-dev.51ty.p1.openshiftapps.com -query ‘alert!https://a.slack-edge.com/production-standard-emoji-assets/14.0/apple-medium/2757@2x.png!

’

{“edges”:null,“nodes”:null}