-

Bug

-

Resolution: Done-Errata

-

Blocker

-

CNV v4.20.0

-

None

-

Quality / Stability / Reliability

-

3

-

False

-

-

False

-

CNV v4.99.0.rhel9-2400, CNV v4.20.0.rhel9-165, CNV v4.99.0.rhel9-2405

-

-

CNV Storage 276, CNV Storage 277

-

Critical

-

None

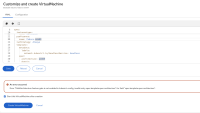

Description of problem:

While creating a VM from fedora arm64 datasource,

1. Datasource points to fedora preference and not fedora arm preference. works fine for rhel10 and rhel9

$ oc get datasources fedora-arm64 -n openshift-virtualization-os-images -o yaml

apiVersion: cdi.kubevirt.io/v1beta1

kind: DataSource labels:

app.kubernetes.io/component: storage

app.kubernetes.io/managed-by: cdi-controller

app.kubernetes.io/part-of: hyperconverged-cluster

app.kubernetes.io/version: 4.20.0

cdi.kubevirt.io/dataImportCron: fedora-image-cron-arm64

instancetype.kubevirt.io/default-instancetype: u1.medium

instancetype.kubevirt.io/default-preference: fedora

kubevirt.io/dynamic-credentials-support: "true"

template.kubevirt.io/architecture: arm64

$ oc get datasources rhel10-arm64 -n openshift-virtualization-os-images -o yaml

apiVersion: cdi.kubevirt.io/v1beta1

kind: DataSource

app.kubernetes.io/component: storage

app.kubernetes.io/managed-by: cdi-controller

app.kubernetes.io/part-of: hyperconverged-cluster

app.kubernetes.io/version: 4.20.0

cdi.kubevirt.io/dataImportCron: rhel10-image-cron-arm64

instancetype.kubevirt.io/default-instancetype: u1.medium

instancetype.kubevirt.io/default-preference: rhel.10.arm64

kubevirt.io/dynamic-credentials-support: "true"

template.kubevirt.io/architecture: arm64

2. when i pick fedora.arm64 prefernce manually, VM gets configured on non arm cluster which doesn't look right.

if i set arch explictly in VM config under spec.template.spec.archtecture: arm64, it throws

Danger alert:An error occurredError "MultiArchitecture feature gate is not enabled in kubevirt-config, invalid entry spec.template.spec.architecture" for field "spec.template.spec.architecture".

i don't see it in FG

$ oc get kubevirt kubevirt-kubevirt-hyperconverged -n openshift-cnv -o json | jq .spec.configuration.developerConfiguration.featureGates

[ "CPUManager", "Snapshot", "HotplugVolumes", "ExpandDisks", "HostDevices", "VMExport", "KubevirtSeccompProfile", "VMPersistentState", "InstancetypeReferencePolicy", "WithHostModelCPU", "HypervStrictCheck"]

HCO have enableMultiArchBootImageImport: true set

$ oc get hco kubevirt-hyperconverged -n openshift-cnv -o json | jq .spec.featureGates

{

"alignCPUs": false,

"decentralizedLiveMigration": false,

"deployKubeSecondaryDNS": false,

"disableMDevConfiguration": false,

"downwardMetrics": false,

"enableMultiArchBootImageImport": true,

"persistentReservation": false

}

Version-Release number of selected component (if applicable):

4.20 Deployed: OCP-4.20.0-ec.6 Deployed: CNV-v4.20.0.rhel9-117

How reproducible:

always

Steps to Reproduce:

1. 2. 3.

Actual results:

Expected results:

Additional info:

spawning a VM , picks cluster

$ oc get vm -A

NAMESPACE NAME AGE STATUS READY

default fedora-blush-guan-47 11m Running True

[cloud-user@ocp-psi-executor-xl ~]$ oc get vmi -A -o yaml

apiVersion: v1

items:

- apiVersion: kubevirt.io/v1

kind: VirtualMachineInstance

metadata:

annotations:

kubevirt.io/cluster-instancetype-name: u1.large

kubevirt.io/cluster-preference-name: fedora.arm64

kubevirt.io/latest-observed-api-version: v1

kubevirt.io/storage-observed-api-version: v1

kubevirt.io/vm-generation: "2"

vm.kubevirt.io/os: linux

creationTimestamp: "2025-09-04T16:03:15Z"

finalizers:

- kubevirt.io/virtualMachineControllerFinalize

- foregroundDeleteVirtualMachine

generation: 19

labels:

kubevirt.io/nodeName: ip-10-0-59-221.us-east-2.compute.internal

network.kubevirt.io/headlessService: headless

name: fedora-blush-guan-47

namespace: default

ownerReferences:

- apiVersion: kubevirt.io/v1

blockOwnerDeletion: true

controller: true

kind: VirtualMachine

name: fedora-blush-guan-47

uid: afbab5b6-764a-4e89-808e-0c41fc7e7562

resourceVersion: "313781"

uid: 8cc36eb8-84fe-49d0-8210-7395f767d59e

spec:

architecture: amd64

domain:

cpu:

cores: 1

maxSockets: 8

model: host-model

sockets: 2

threads: 1

devices:

autoattachPodInterface: false

disks:

- disk:

bus: virtio

name: rootdisk

- disk:

bus: virtio

name: cloudinitdisk

interfaces:

- macAddress: 02:e5:d3:5b:82:19

masquerade: {}

model: virtio

name: default

rng: {}

features:

acpi:

enabled: true

firmware:

uuid: f91e4d04-20a2-4890-91d8-139238312e51

machine:

type: pc-q35-rhel9.6.0

memory:

guest: 8Gi

maxGuest: 32Gi

resources:

requests:

memory: 8Gi

evictionStrategy: LiveMigrate

networks:

- name: default

pod: {}

subdomain: headless

volumes:

- dataVolume:

name: fedora-blush-guan-47-volume

name: rootdisk

- cloudInitNoCloud:

userData: |

#cloud-config

chpasswd:

expire: false

password: uja9-rcq4-rt2l

user: fedora

name: cloudinitdisk

status:

activePods:

ed47ffa7-5dd9-483d-899d-91fe7d115d75: ip-10-0-59-221.us-east-2.compute.internal

conditions:

- lastProbeTime: null

lastTransitionTime: "2025-09-04T16:06:08Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: null

message: All of the VMI's DVs are bound and ready

reason: AllDVsReady

status: "True"

type: DataVolumesReady

- lastProbeTime: null

lastTransitionTime: null

message: 'cannot migrate VMI: PVC fedora-blush-guan-47-volume is not shared,

live migration requires that all PVCs must be shared (using ReadWriteMany

access mode)'

reason: DisksNotLiveMigratable

status: "False"

type: LiveMigratable

- lastProbeTime: null

lastTransitionTime: null

status: "True"

type: StorageLiveMigratable

- lastProbeTime: "2025-09-04T16:06:29Z"

lastTransitionTime: null

status: "True"

type: AgentConnected

currentCPUTopology:

cores: 1

sockets: 2

threads: 1

guestOSInfo:

id: fedora

kernelRelease: 6.14.0-63.fc42.x86_64

kernelVersion: '#1 SMP PREEMPT_DYNAMIC Mon Mar 24 19:53:37 UTC 2025'

machine: x86_64

name: Fedora Linux

prettyName: Fedora Linux 42 (Cloud Edition)

version: 42 (Cloud Edition)

versionId: "42"

interfaces:

- infoSource: domain, guest-agent

interfaceName: enp1s0

ipAddress: 10.131.0.96

ipAddresses:

- 10.131.0.96

- fe80::e5:d3ff:fe5b:8219

linkState: up

mac: 02:e5:d3:5b:82:19

name: default

podInterfaceName: eth0

queueCount: 1

launcherContainerImageVersion: registry.redhat.io/container-native-virtualization/virt-launcher-rhel9@sha256:3d619787d670d9b026aae66c8ad0b31a87389ee33165e3e189b71a2b62878e3b

machine:

type: pc-q35-rhel9.6.0

memory:

guestAtBoot: 8Gi

guestCurrent: 8Gi

guestRequested: 8Gi

migrationMethod: BlockMigration

migrationTransport: Unix

nodeName: ip-10-0-59-221.us-east-2.compute.internal

phase: Running

phaseTransitionTimestamps:

- phase: Pending

phaseTransitionTimestamp: "2025-09-04T16:03:15Z"

- phase: Scheduling

phaseTransitionTimestamp: "2025-09-04T16:03:15Z"

- phase: Scheduled

phaseTransitionTimestamp: "2025-09-04T16:06:08Z"

- phase: Running

phaseTransitionTimestamp: "2025-09-04T16:06:10Z"

qosClass: Burstable

runtimeUser: 107

selinuxContext: system_u:object_r:container_file_t:s0:c378,c609

virtualMachineRevisionName: revision-start-vm-afbab5b6-764a-4e89-808e-0c41fc7e7562-2

volumeStatus:

- name: cloudinitdisk

size: 1048576

target: vdb

- name: rootdisk

persistentVolumeClaimInfo:

accessModes:

- ReadWriteOnce

capacity:

storage: 30Gi

claimName: fedora-blush-guan-47-volume

filesystemOverhead: "0"

requests:

storage: "32212254720"

volumeMode: Block

target: vda

kind: List

metadata:

resourceVersion: ""

$ oc get nodes ip-10-0-59-221.us-east-2.compute.internal -o yaml | grep arch

beta.kubernetes.io/arch: amd64

cpu-feature.node.kubevirt.io/arch-capabilities: "true"

host-model-required-features.node.kubevirt.io/arch-capabilities: "true"

kubernetes.io/arch: amd64

architecture: amd64

- blocks

-

CNV-76714 GA: Support for heterogeneous multi-arch clusters (golden images support) - Infra

-

- New

-

-

CNV-76732 GA: Support for heterogeneous multi-arch clusters (golden images support) - Storage

-

- New

-

-

CNV-76741 GA: Support for heterogeneous multi-arch clusters (golden images support) - Network

-

- New

-

-

CNV-67900 GA: HCO support for heterogeneous multi-arch clusters (golden images support)

-

- In Progress

-

-

CNV-76723 GA: Support for heterogeneous multi-arch clusters (golden images support) - QE Infra

-

- In Progress

-

-

CNV-32960 TP: HCO support for heterogeneous multi-arch clusters (golden images support)

-

- Closed

-

-

CNV-64577 [QE] update tier2/3 tests to work with ssp/hco changes and add upgrade marker

-

- In Progress

-

- is cloned by

-

CNV-69422 [4.20] Multiarchcluster : fedora-arm64 datasources don't point to right preference and VM doesn't boot

-

- Closed

-

- links to

-

RHEA-2025:150257

OpenShift Virtualization 4.20.0 Images

RHEA-2025:150257

OpenShift Virtualization 4.20.0 Images