-

Bug

-

Resolution: Done-Errata

-

Normal

-

None

-

Quality / Stability / Reliability

-

0.42

-

False

-

-

False

-

CNV v4.99.0.rhel9-2435

-

-

None

Description of problem:

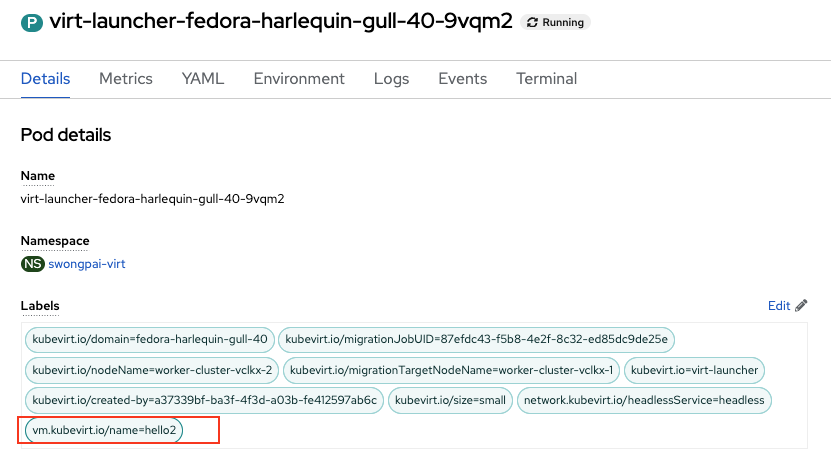

When creating a VM with a name different from the hostname, the corresponding pod is created with the label `vm.kubevirt.io/name=<VM_HOSTNAME>` . However, when using virtctl expose vm to create a service, the service uses the selector `vm.kubevirt.io/name=<VM_NAME>` , which does not match the pod label. As a result, the service does not select any pods and is not accessible.

Version-Release number of selected component (if applicable):

OCP 4.18.18, CNV 4.18.3

How reproducible:

Steps to Reproduce:

1. Create fedora VM 2. Change hostname difference from the VM and restart 3. Check pod label 4. expose vm with virtctl `virtctl expose vmi <VMI_NAME> --port=80 --target-port=80 --name=testxxx` 5. Check service `testxxx` it will not select and pod

Actual results:

The service inaccessible and no pod associated with the service

Expected results:

The service accessible and correct pod selector

Additional info:

An example VM

apiVersion: kubevirt.io/v1 kind: VirtualMachine metadata: annotations: kubemacpool.io/transaction-timestamp: '2025-07-31T04:42:46.951761204Z' kubevirt.io/latest-observed-api-version: v1 kubevirt.io/storage-observed-api-version: v1 vm.kubevirt.io/validations: | [ { "name": "minimal-required-memory", "path": "jsonpath::.spec.domain.memory.guest", "rule": "integer", "message": "This VM requires more memory.", "min": 2147483648 } ] resourceVersion: '8306434' name: fedora-harlequin-gull-40 uid: 02f851fd-28c9-435f-85a5-0db99a3c9d54 creationTimestamp: '2025-07-30T15:09:24Z' generation: 10 managedFields: - apiVersion: kubevirt.io/v1 fieldsType: FieldsV1 fieldsV1: 'f:metadata': 'f:annotations': 'f:kubevirt.io/latest-observed-api-version': {} 'f:kubevirt.io/storage-observed-api-version': {} 'f:finalizers': .: {} 'v:"kubevirt.io/virtualMachineControllerFinalize"': {} manager: virt-controller operation: Update time: '2025-07-30T15:09:24Z' - apiVersion: kubevirt.io/v1 fieldsType: FieldsV1 fieldsV1: 'f:spec': 'f:template': 'f:spec': 'f:subdomain': {} manager: kubectl-patch operation: Update time: '2025-07-31T04:06:24Z' - apiVersion: kubevirt.io/v1 fieldsType: FieldsV1 fieldsV1: 'f:metadata': 'f:annotations': .: {} 'f:vm.kubevirt.io/validations': {} 'f:labels': .: {} 'f:app': {} 'f:kubevirt.io/dynamic-credentials-support': {} 'f:vm.kubevirt.io/template': {} 'f:vm.kubevirt.io/template.namespace': {} 'f:vm.kubevirt.io/template.revision': {} 'f:vm.kubevirt.io/template.version': {} 'f:spec': .: {} 'f:dataVolumeTemplates': {} 'f:runStrategy': {} 'f:template': .: {} 'f:metadata': .: {} 'f:annotations': .: {} 'f:vm.kubevirt.io/flavor': {} 'f:vm.kubevirt.io/os': {} 'f:vm.kubevirt.io/workload': {} 'f:labels': .: {} 'f:kubevirt.io/domain': {} 'f:kubevirt.io/size': {} 'f:network.kubevirt.io/headlessService': {} 'f:spec': .: {} 'f:architecture': {} 'f:domain': .: {} 'f:cpu': .: {} 'f:cores': {} 'f:sockets': {} 'f:threads': {} 'f:devices': .: {} 'f:disks': {} 'f:interfaces': {} 'f:logSerialConsole': {} 'f:rng': {} 'f:features': .: {} 'f:smm': .: {} 'f:enabled': {} 'f:firmware': .: {} 'f:bootloader': .: {} 'f:efi': {} 'f:memory': .: {} 'f:guest': {} 'f:hostname': {} 'f:networks': {} 'f:terminationGracePeriodSeconds': {} 'f:volumes': {} manager: Mozilla operation: Update time: '2025-07-31T04:42:46Z' - apiVersion: kubevirt.io/v1 fieldsType: FieldsV1 fieldsV1: 'f:status': 'f:printableStatus': {} 'f:runStrategy': {} 'f:conditions': {} .: {} 'f:ready': {} 'f:volumeSnapshotStatuses': {} 'f:observedGeneration': {} 'f:created': {} 'f:desiredGeneration': {} manager: virt-controller operation: Update subresource: status time: '2025-08-01T06:43:03Z' namespace: swongpai-virt finalizers: - kubevirt.io/virtualMachineControllerFinalize labels: app: myvmi kubevirt.io/dynamic-credentials-support: 'true' vm.kubevirt.io/template: fedora-server-small vm.kubevirt.io/template.namespace: openshift vm.kubevirt.io/template.revision: '1' vm.kubevirt.io/template.version: v0.32.2 spec: dataVolumeTemplates: - apiVersion: cdi.kubevirt.io/v1beta1 kind: DataVolume metadata: creationTimestamp: null name: fedora-harlequin-gull-40 spec: sourceRef: kind: DataSource name: fedora namespace: openshift-virtualization-os-images storage: resources: requests: storage: 30Gi runStrategy: RerunOnFailure template: metadata: annotations: vm.kubevirt.io/flavor: small vm.kubevirt.io/os: fedora vm.kubevirt.io/workload: server creationTimestamp: null labels: kubevirt.io/domain: fedora-harlequin-gull-40 kubevirt.io/size: small network.kubevirt.io/headlessService: headless spec: architecture: amd64 domain: cpu: cores: 1 sockets: 1 threads: 1 devices: disks: - disk: bus: virtio name: rootdisk - disk: bus: virtio name: cloudinitdisk interfaces: - macAddress: '02:46:b8:00:00:0a' masquerade: {} model: virtio name: default logSerialConsole: false rng: {} features: acpi: {} smm: enabled: true firmware: bootloader: efi: {} machine: type: pc-q35-rhel9.4.0 memory: guest: 2Gi resources: {} hostname: hello2 networks: - name: default pod: {} subdomain: headless terminationGracePeriodSeconds: 180 volumes: - dataVolume: name: fedora-harlequin-gull-40 name: rootdisk - cloudInitNoCloud: userData: |- #cloud-config user: fedora password: 713b-d5pc-ne7j chpasswd: { expire: False } name: cloudinitdisk status: conditions: - lastProbeTime: null lastTransitionTime: '2025-08-01T06:43:03Z' status: 'True' type: Ready - lastProbeTime: null lastTransitionTime: null message: All of the VMI's DVs are bound and not running reason: AllDVsReady status: 'True' type: DataVolumesReady - lastProbeTime: null lastTransitionTime: null status: 'True' type: LiveMigratable - lastProbeTime: null lastTransitionTime: null status: 'True' type: StorageLiveMigratable - lastProbeTime: '2025-07-31T04:43:21Z' lastTransitionTime: null status: 'True' type: AgentConnected created: true desiredGeneration: 10 observedGeneration: 10 printableStatus: Running ready: true runStrategy: RerunOnFailure volumeSnapshotStatuses: - enabled: true name: rootdisk - enabled: false name: cloudinitdisk reason: 'Snapshot is not supported for this volumeSource type [cloudinitdisk]'

The service created by `virtctl expose ` command

kind: Service apiVersion: v1 metadata: name: testxxx namespace: swongpai-virt uid: bf87d16e-bdb9-432b-8e30-2eb733682a6c resourceVersion: '7028190' creationTimestamp: '2025-07-31T04:31:05Z' managedFields: - manager: virtctl operation: Update apiVersion: v1 time: '2025-07-31T04:31:05Z' fieldsType: FieldsV1 fieldsV1: 'f:spec': 'f:internalTrafficPolicy': {} 'f:ports': .: {} 'k:{"port":80,"protocol":"TCP"}': .: {} 'f:port': {} 'f:protocol': {} 'f:targetPort': {} 'f:selector': {} 'f:sessionAffinity': {} 'f:type': {} spec: clusterIP: 172.31.151.110 ipFamilies: - IPv4 ports: - protocol: TCP port: 80 targetPort: 80 internalTrafficPolicy: Cluster clusterIPs: - 172.31.151.110 type: ClusterIP ipFamilyPolicy: SingleStack sessionAffinity: None selector: vm.kubevirt.io/name: fedora-harlequin-gull-40 status: loadBalancer: {}

- is related to

-

CNV-71151 Update service label selectors to use vmi.kubevirt.io/id for KubeVirt v1.7+ compatibility

-

- In Progress

-

- links to

-

RHEA-2025:155516

OpenShift Virtualization 4.21.0 Images

RHEA-2025:155516

OpenShift Virtualization 4.21.0 Images