-

Bug

-

Resolution: Done

-

Critical

-

MCE 2.2.0, ACM 2.7.0

-

False

-

-

False

-

-

-

Critical

-

Customer Facing

-

No

Description of problem:

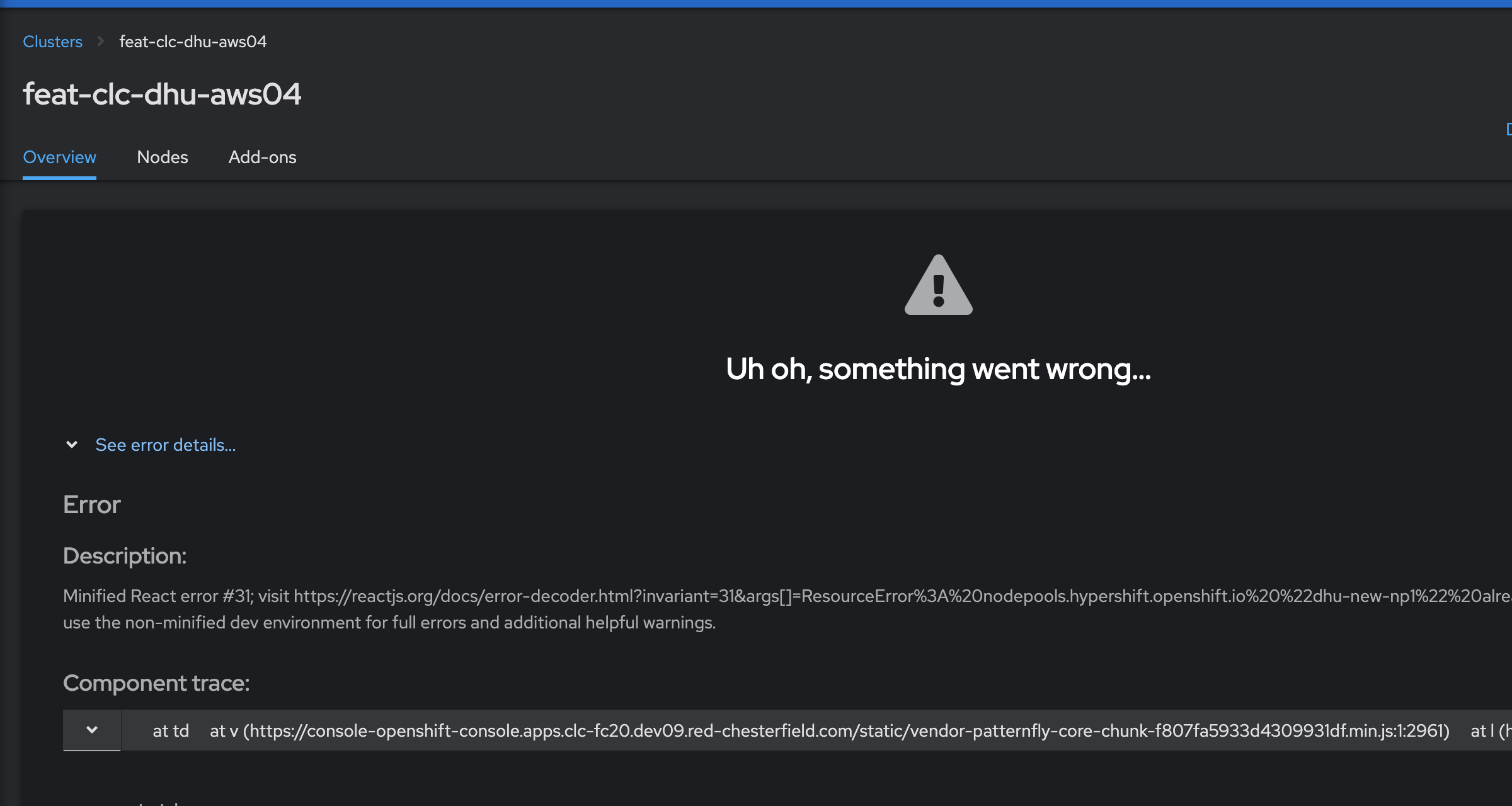

With two hosted clusters with the same hosting cluster, if:

- Hosted cluster A has node pool "np0"

- Hosted cluster B has node pool "np1"

If we create a new node pool on HC A with name "np1" or on HC B with name "np0", then a react error is thrown in the UI.

Error Description: Minified React error #31; visit https://reactjs.org/docs/error-decoder.html? invariant=31&args[]=ResourceError%3A%20nodepools.hypershift.openshift.io%20%22dhu-new-np1%22%20already%20exists for the full message or use the non-minified dev environment for full errors and additional helpful warnings. Error: Minified React error #31; visit https://reactjs.org/docs/error-decoder.html?invariant=31&args[]=ResourceError%3A%20nodepools.hypershift.openshift.io%20%22dhu-new-np1%22%20already%20exists for the full message or use the non-minified dev environment for full errors and additional helpful warnings. at _o (https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:254:51096) at https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:254:55933 at Da (https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:254:66927) at Hs (https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:254:113413) at xc (https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:254:98327) at Cc (https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:254:98255) at _c (https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:254:98118) at pc (https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:254:95105) at https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:254:44774 at t.unstable_runWithPriority (https://console-openshift-console.apps.clc-fc20.dev09.red-chesterfield.com/static/vendors~main-chunk-f976f8f46920cb701c89.min.js:262:3768)

Version-Release number of selected component (if applicable):

How reproducible:

Steps to Reproduce:

- ...

Actual results:

Expected results:

error thrown for already existing node pool

Additional info:

Refreshing page or leaving and coming back to the hosted cluster page removes the error.

If we do this in CLI, its handled as expected:

2023-01-07T01:47:37-08:00 ERROR Failed to create nodepool {"error": "NodePool local-cluster/feat-clc-dhu-aws04-us-east-1a already exists"}

github.com/spf13/cobra.(*Command).execute

/remote-source/app/vendor/github.com/spf13/cobra/command.go:872

github.com/spf13/cobra.(*Command).ExecuteC

/remote-source/app/vendor/github.com/spf13/cobra/command.go:990

github.com/spf13/cobra.(*Command).Execute

/remote-source/app/vendor/github.com/spf13/cobra/command.go:918

github.com/spf13/cobra.(*Command).ExecuteContext

/remote-source/app/vendor/github.com/spf13/cobra/command.go:911

main.main

/remote-source/app/main.go:70

runtime.main

/usr/lib/golang/src/runtime/proc.go:250

Error: NodePool local-cluster/feat-clc-dhu-aws04-us-east-1a already exists

NodePool local-cluster/feat-clc-dhu-aws04-us-east-1a already exists