-

Epic

-

Resolution: Done

-

Critical

-

ACM 2.14.0

-

Enhance ClusterPermission API to show RoleBinding/ClusterRoleBinding status

-

Quality / Stability / Reliability

-

3

-

False

-

-

False

-

Green

-

Done

-

ACM-26601 - Fine-Grained RBAC experience at the hub (Phase 1)

-

-

0% To Do, 0% In Progress, 100% Done

-

Workload Mgmt Train 31 - 1, Workload Mgmt Train 31 - 2, Workload Mgmt Train 32 - 1, Workload Mgmt Train 32 - 2, Workload Mgmt Train 33 - 1

-

Critical

Description of problem:

When a ClusterPermission is created, the status looks like this:

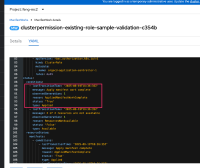

ubuntu@ubuntu2404:~/REPOS/console_mshort55$ oc get clusterpermission -n sno-2-b9657 sno-2-b9657-kubevirt-admin -oyaml ... status: conditions: - lastTransitionTime: "2025-08-05T20:29:24Z" message: |- Run the following command to check the ManifestWork status: kubectl -n sno-2-b9657 get ManifestWork sno-2-b9657-kubevirt-admin-5a886 -o yaml reason: AppliedRBACManifestWork status: "True" type: AppliedRBACManifestWork

If we rely on this, it would appear that the ClusterPermission was successfully applied. However if there is an underlying problem with the ManfiestWork, it is not reported on the ClusterPermission status:

ubuntu@ubuntu2404:~/REPOS/console_mshort55$ kubectl -n sno-2-b9657 get ManifestWork sno-2-b9657-kubevirt-admin-5a886 -o yaml ... status: conditions: - lastTransitionTime: "2025-08-14T17:46:11Z" message: Failed to apply manifest work observedGeneration: 2 reason: AppliedManifestWorkFailed status: "False" type: Applied - lastTransitionTime: "2025-08-10T10:29:02Z" message: All resources are available observedGeneration: 2 reason: ResourcesAvailable status: "True" type: Available resourceStatus: manifests: - conditions: - lastTransitionTime: "2025-08-14T17:46:11Z" message: 'Failed to apply manifest: ClusterRoleBinding.rbac.authorization.k8s.io "sno-2-b9657-kubevirt-admin" is invalid: roleRef: Invalid value: rbac.RoleRef{APIGroup:"rbac.authorization.k8s.io", Kind:"ClusterRole", Name:"kubevirt.io:adminn"}: cannot change roleRef' reason: AppliedManifestFailed status: "False" type: Applied - lastTransitionTime: "2025-08-10T10:29:02Z" message: Resource is available reason: ResourceAvailable status: "True" type: Available - lastTransitionTime: "2025-08-05T20:29:24Z" message: "" reason: NoStatusFeedbackSynced status: "True" type: StatusFeedbackSynced resourceMeta: group: rbac.authorization.k8s.io kind: ClusterRoleBinding name: sno-2-b9657-kubevirt-admin namespace: "" ordinal: 0 resource: clusterrolebindings version: v1

This bug is to fix ClusterPermission status to report on errors with the underlying ManifestWork. Clients like UI should be able to rely on ClusterPermission status and not have to check underlying resources.

Version-Release number of selected component (if applicable): ACM 2.14

How reproducible:

Every time.

Steps to Reproduce:

- Create ClusterPermission successfully

- Edit ClusterPermission and change role name in roleRef

- ClusterPermission will not show the ManfiestWork error

Actual results:

ClusterPermission does not show an error status, even though ManifestWork has failed.

Expected results:

ClusterPermission should report failures in it's status for ManifestWork failures.

Additional info:

- blocks

-

ACM-25557 MulticlusterRoleAssignment - Role assignment status updates based on ClusterPermission status changes

-

- Closed

-

- is depended on by

-

ACM-23469 MulticlusterRoleAssignment - watchers mechanism [2.15]

-

- Closed

-

-

ACM-23009 MulticlusterRoleAssignment - CR Implementation [2.15]

-

- Closed

-

- relates to

-

ACM-20640 RBAC UI Implementation [2.15] - multiple sub tasks

-

- Closed

-