-

Bug

-

Resolution: Done

-

Undefined

-

None

-

ACM 2.7.0

-

False

-

-

False

-

-

-

Critical

-

No

Description of the problem:

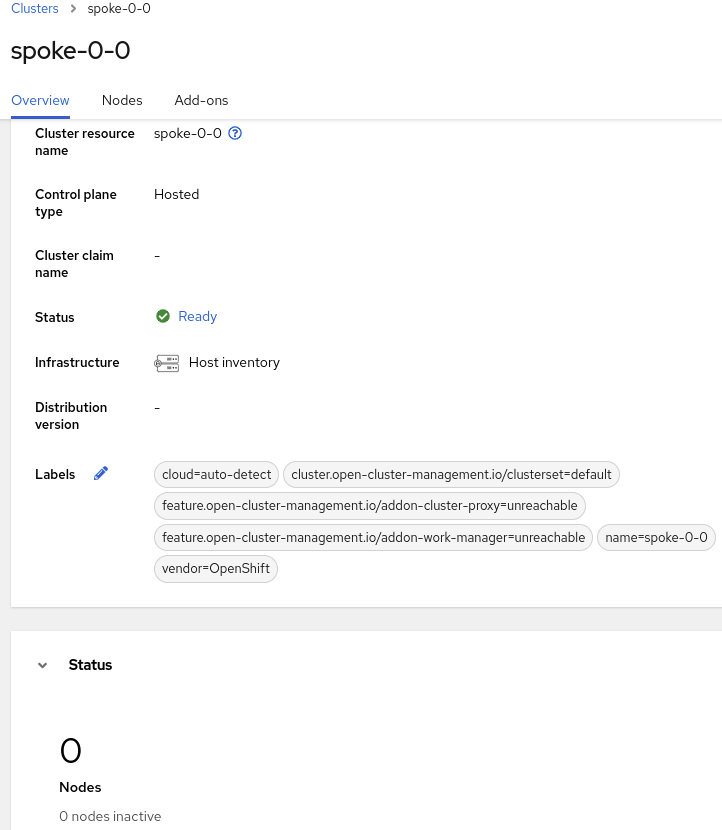

After spoke cluster is installed on hypershift and imported via UI, 0 nodes are reported as part of the cluster.

In infrastructure tab I can see 3 nodes are installed and are part of the cluster.

In machine-approver-controller log I can see errors "failed to get kubelet CA: ConfigMap "csr-controller-ca" not found".

in hypershift operator log

{"level":"error","ts":"2022-12-05T11:27:56Z","msg":"Reconciler error","controller":"hostedcluster","controllerGroup":"hypershift.openshift.io","controllerKind":"HostedCluster","hostedCluster":\{"name":"spoke-0-0","namespace":"clusters"},"namespace":"clusters","name":"spoke-0-0","reconcileID":"366cf617-8d62-445f-9d39-da4a3148e81d","error":"Operation cannot be fulfilled on hostedclusters.hypershift.openshift.io \"spoke-0-0\": the object has been modified; please apply your changes to the latest version and try again","errorCauses":[\{"error":"Operation cannot be fulfilled on hostedclusters.hypershift.openshift.io \"spoke-0-0\": the object has been modified; please apply your changes to the latest version and try again"}],"stacktrace":"sigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\t/remote-source/app/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller/controller.go:273\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\t/remote-source/app/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller/controller.go:234"}

{"level":"error","ts":"2022-12-05T11:27:53Z","msg":"Invalid infraID, waiting.","controller":"nodepool","controllerGroup":"hypershift.openshift.io","controllerKind":"NodePool","nodePool":

{"name":"spoke-0-0","namespace":"clusters"},"namespace":"clusters","name":"spoke-0-0","reconcileID":"d448e2b1-194f-4f29-8600-5ca65a27fe10","error":"infraID can't be empty","stacktrace":"github.com/openshift/hypershift/hypershift-operator/controllers/nodepool.(*NodePoolReconciler).Reconcile\n\t/remote-source/app/hypershift-operator/controllers/nodepool/nodepool_controller.go:182\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Reconcile\n\t/remote-source/app/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller/controller.go:121\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\t/remote-source/app/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller/controller.go:320\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\t/remote-source/app/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller/controller.go:273\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\t/remote-source/app/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller/controller.go:234"}

How reproducible:

tried 2 times - the same result

Steps to reproduce:

1. Deploy hypershift with 3 masters

2. Deploy spoke cluster with 3 workers

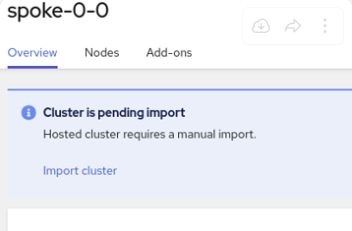

3. Using UI Import the cluster

4. Ensure IP for application is set correctly in /etc/NetworkManager/dnsmasq.d/openshift.conf on hypervisor (the correct IP can be found by running $oc get pods -n openshift-ingress -o wide on spoke). Restart NetworkManager if the IP was changed

Actual results:

0 nodes (see in problem description)

Expected results:

Spoke cluster is Ready and 3 workers are attached to it

4.12.0-0.nightly-2022-12-04-160656

MCE 2.2.0-DOWNANDBACK-2022-12-01-11-02-43