Details

-

Bug

-

Resolution: Done

-

Minor

-

5.0.0.Final

-

None

-

False

-

False

-

Undefined

-

Description

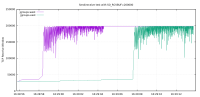

I recently finished troubleshooting a unidirectional throughput bottleneck involving a JGroups application (Infinispan) communicating over a high-latency (~45 milliseconds) TCP connection.

The root cause was JGroups improperly configuring the receive/send buffers on the listening socket. According to the tcp(7) man page:

On individual connections, the socket buffer size must be set prior to the listen(2) or connect(2) calls in order to have it take effect.

However, JGroups does not set the buffer size on the listening side until after accept().

The result is poor throughput when sending data from client (connecting side) to server (listening side.) Because the issue is a too-small TCP receive window, throughput is ultimately latency-bound.